mirror of

https://github.com/prowler-cloud/prowler.git

synced 2025-12-19 05:17:47 +00:00

Compare commits

99 Commits

0d0dabe166

...

2.12.0

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

8818f47333 | ||

|

|

3cffe72273 | ||

|

|

135aaca851 | ||

|

|

cf8df051de | ||

|

|

bef42f3f2d | ||

|

|

3d86bf1705 | ||

|

|

5a43ec951a | ||

|

|

b0e6ab6e31 | ||

|

|

b7fb38cc9e | ||

|

|

f29f7fc239 | ||

|

|

2997ff0f1c | ||

|

|

11dc0aa5b2 | ||

|

|

8bddb9b265 | ||

|

|

689e292585 | ||

|

|

bff2aabda6 | ||

|

|

4b29293362 | ||

|

|

4e24103dc6 | ||

|

|

3b90347849 | ||

|

|

6a7a037cec | ||

|

|

927c13b9c6 | ||

|

|

11cc8e998b | ||

|

|

4a71739c56 | ||

|

|

aedc5cd0ad | ||

|

|

3d81307e56 | ||

|

|

918661bd7a | ||

|

|

99f9abe3f6 | ||

|

|

f2950764f0 | ||

|

|

d9777a68c7 | ||

|

|

2a4cc9a5f8 | ||

|

|

1f0c210926 | ||

|

|

dd64c7d226 | ||

|

|

865f79f5b3 | ||

|

|

1f8a4c1022 | ||

|

|

1e422f20aa | ||

|

|

29eda28bf3 | ||

|

|

f67f0cc66d | ||

|

|

721cafa0cd | ||

|

|

c1d60054e9 | ||

|

|

b95b3f68d3 | ||

|

|

81b6e27eb8 | ||

|

|

d69678424b | ||

|

|

a43c1aceec | ||

|

|

f70cf8d81e | ||

|

|

83b6c79203 | ||

|

|

1192c038b2 | ||

|

|

4ebbf6553e | ||

|

|

c501d63382 | ||

|

|

72d6d3f535 | ||

|

|

ddd34dc9cc | ||

|

|

03b1c10d13 | ||

|

|

4cd5b8fd04 | ||

|

|

f0ce17182b | ||

|

|

2a8a7d844b | ||

|

|

ff33f426e5 | ||

|

|

f691046c1f | ||

|

|

9fad8735b8 | ||

|

|

c632055517 | ||

|

|

fd850790d5 | ||

|

|

912d5d7f8c | ||

|

|

d88a136ac3 | ||

|

|

172484cf08 | ||

|

|

821083639a | ||

|

|

e4f0f3ec87 | ||

|

|

cc6302f7b8 | ||

|

|

c89fd82856 | ||

|

|

0e29a92d42 | ||

|

|

835d8ffe5d | ||

|

|

21ee2068a6 | ||

|

|

0ad149942b | ||

|

|

66305768c0 | ||

|

|

05f98fe993 | ||

|

|

89416f37af | ||

|

|

7285ddcb4e | ||

|

|

8993a4f707 | ||

|

|

633d7bd8a8 | ||

|

|

3944ea2055 | ||

|

|

d85d0f5877 | ||

|

|

d32a7986a5 | ||

|

|

71813425bd | ||

|

|

da000b54ca | ||

|

|

74a9b42d9f | ||

|

|

f9322ab3aa | ||

|

|

5becaca2c4 | ||

|

|

50a670fbc4 | ||

|

|

48f405a696 | ||

|

|

bc56c4242e | ||

|

|

1b63256b9c | ||

|

|

7930b449b3 | ||

|

|

e5cd42da55 | ||

|

|

2a54bbf901 | ||

|

|

2e134ed947 | ||

|

|

ba727391db | ||

|

|

d4346149fa | ||

|

|

2637fc5132 | ||

|

|

ac5135470b | ||

|

|

613966aecf | ||

|

|

83ddcb9c39 | ||

|

|

957c2433cf | ||

|

|

c10b367070 |

@@ -1,6 +1,17 @@

|

||||

# Ignore git files

|

||||

.git/

|

||||

.github/

|

||||

|

||||

# Ignore Dodckerfile

|

||||

Dockerfile

|

||||

|

||||

# Ignore hidden files

|

||||

.pre-commit-config.yaml

|

||||

.dockerignore

|

||||

.gitignore

|

||||

.pytest*

|

||||

.DS_Store

|

||||

|

||||

# Ignore output directories

|

||||

output/

|

||||

junit-reports/

|

||||

|

||||

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

5

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: Questions & Help

|

||||

url: https://github.com/prowler-cloud/prowler/discussions/categories/q-a

|

||||

about: Please ask and answer questions here.

|

||||

39

.github/workflows/build-lint-push-containers.yml

vendored

39

.github/workflows/build-lint-push-containers.yml

vendored

@@ -7,17 +7,19 @@ on:

|

||||

paths-ignore:

|

||||

- '.github/**'

|

||||

- 'README.md'

|

||||

|

||||

|

||||

release:

|

||||

types: [published]

|

||||

types: [published, edited]

|

||||

|

||||

env:

|

||||

AWS_REGION_STG: eu-west-1

|

||||

AWS_REGION_PLATFORM: eu-west-1

|

||||

AWS_REGION_PRO: us-east-1

|

||||

IMAGE_NAME: prowler

|

||||

LATEST_TAG: latest

|

||||

STABLE_TAG: stable

|

||||

TEMPORARY_TAG: temporary

|

||||

DOCKERFILE_PATH: util/Dockerfile

|

||||

DOCKERFILE_PATH: ./Dockerfile

|

||||

|

||||

jobs:

|

||||

# Lint Dockerfile using Hadolint

|

||||

@@ -145,25 +147,25 @@ jobs:

|

||||

with:

|

||||

registry: ${{ secrets.STG_ECR }}

|

||||

-

|

||||

name: Configure AWS Credentials -- PRO

|

||||

name: Configure AWS Credentials -- PLATFORM

|

||||

if: github.event_name == 'release'

|

||||

uses: aws-actions/configure-aws-credentials@v1

|

||||

with:

|

||||

aws-region: ${{ env.AWS_REGION_PRO }}

|

||||

role-to-assume: ${{ secrets.PRO_IAM_ROLE_ARN }}

|

||||

aws-region: ${{ env.AWS_REGION_PLATFORM }}

|

||||

role-to-assume: ${{ secrets.STG_IAM_ROLE_ARN }}

|

||||

role-session-name: build-lint-containers-pro

|

||||

-

|

||||

name: Login to ECR -- PRO

|

||||

name: Login to ECR -- PLATFORM

|

||||

if: github.event_name == 'release'

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: ${{ secrets.PRO_ECR }}

|

||||

registry: ${{ secrets.PLATFORM_ECR }}

|

||||

-

|

||||

# Push to master branch - push "latest" tag

|

||||

name: Tag (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.STG_ECR }}/${{ secrets.STG_ECR_REPOSITORY }}:${{ env.LATEST_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ env.LATEST_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

-

|

||||

@@ -171,25 +173,34 @@ jobs:

|

||||

name: Push (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

docker push ${{ secrets.STG_ECR }}/${{ secrets.STG_ECR_REPOSITORY }}:${{ env.LATEST_TAG }}

|

||||

docker push ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ env.LATEST_TAG }}

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

-

|

||||

# Push the new release

|

||||

# Tag the new release (stable and release tag)

|

||||

name: Tag (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PRO_ECR }}/${{ secrets.PRO_ECR }}:${{ github.event.release.tag_name }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ github.event.release.tag_name }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ env.STABLE_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

|

||||

-

|

||||

# Push the new release

|

||||

# Push the new release (stable and release tag)

|

||||

name: Push (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker push ${{ secrets.PRO_ECR }}/${{ secrets.PRO_ECR }}:${{ github.event.release.tag_name }}

|

||||

docker push ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ github.event.release.tag_name }}

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker push ${{ secrets.PLATFORM_ECR }}/${{ secrets.PLATFORM_ECR_REPOSITORY }}:${{ env.STABLE_TAG }}

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

-

|

||||

name: Delete artifacts

|

||||

if: always()

|

||||

|

||||

2

.github/workflows/find-secrets.yml

vendored

2

.github/workflows/find-secrets.yml

vendored

@@ -11,7 +11,7 @@ jobs:

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: TruffleHog OSS

|

||||

uses: trufflesecurity/trufflehog@v3.4.4

|

||||

uses: trufflesecurity/trufflehog@v3.13.0

|

||||

with:

|

||||

path: ./

|

||||

base: ${{ github.event.repository.default_branch }}

|

||||

|

||||

41

.github/workflows/pull-request.yml

vendored

Normal file

41

.github/workflows/pull-request.yml

vendored

Normal file

@@ -0,0 +1,41 @@

|

||||

name: Lint & Test

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- 'prowler-3.0-dev'

|

||||

pull_request:

|

||||

branches:

|

||||

- 'prowler-3.0-dev'

|

||||

|

||||

jobs:

|

||||

build:

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.9"]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pipenv

|

||||

pipenv install

|

||||

- name: Bandit

|

||||

run: |

|

||||

pipenv run bandit -q -lll -x '*_test.py,./contrib/' -r .

|

||||

- name: Safety

|

||||

run: |

|

||||

pipenv run safety check

|

||||

- name: Vulture

|

||||

run: |

|

||||

pipenv run vulture --exclude "contrib" --min-confidence 100 .

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

pipenv run pytest -n auto

|

||||

50

.github/workflows/refresh_aws_services_regions.yml

vendored

Normal file

50

.github/workflows/refresh_aws_services_regions.yml

vendored

Normal file

@@ -0,0 +1,50 @@

|

||||

# This is a basic workflow to help you get started with Actions

|

||||

|

||||

name: Refresh regions of AWS services

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 9 * * *" #runs at 09:00 UTC everyday

|

||||

|

||||

env:

|

||||

GITHUB_BRANCH: "prowler-3.0-dev"

|

||||

|

||||

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

|

||||

jobs:

|

||||

# This workflow contains a single job called "build"

|

||||

build:

|

||||

# The type of runner that the job will run on

|

||||

runs-on: ubuntu-latest

|

||||

# Steps represent a sequence of tasks that will be executed as part of the job

|

||||

steps:

|

||||

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: ${{ env.GITHUB_BRANCH }}

|

||||

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9 #install the python needed

|

||||

|

||||

# Runs a single command using the runners shell

|

||||

- name: Run a one-line script

|

||||

run: python3 util/update_aws_services_regions.py

|

||||

|

||||

# Create pull request

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

commit-message: "feat(regions_update): Update regions for AWS services."

|

||||

branch: "aws-services-regions-updated"

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "feat(regions_update): Changes in regions for AWS services."

|

||||

body: |

|

||||

### Description

|

||||

|

||||

This PR updates the regions for AWS services.

|

||||

|

||||

### License

|

||||

|

||||

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

|

||||

@@ -8,6 +8,22 @@ repos:

|

||||

- id: check-json

|

||||

- id: end-of-file-fixer

|

||||

- id: trailing-whitespace

|

||||

exclude: 'README.md'

|

||||

- id: no-commit-to-branch

|

||||

- id: pretty-format-json

|

||||

args: ['--autofix']

|

||||

|

||||

- repo: https://github.com/koalaman/shellcheck-precommit

|

||||

rev: v0.8.0

|

||||

hooks:

|

||||

- id: shellcheck

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.10.0

|

||||

hooks:

|

||||

- id: hadolint

|

||||

name: Lint Dockerfiles

|

||||

description: Runs hadolint to lint Dockerfiles

|

||||

language: system

|

||||

types: ["dockerfile"]

|

||||

entry: hadolint

|

||||

|

||||

64

Dockerfile

Normal file

64

Dockerfile

Normal file

@@ -0,0 +1,64 @@

|

||||

# Build command

|

||||

# docker build --platform=linux/amd64 --no-cache -t prowler:latest -f ./Dockerfile .

|

||||

|

||||

# hadolint ignore=DL3007

|

||||

FROM public.ecr.aws/amazonlinux/amazonlinux:latest

|

||||

|

||||

LABEL maintainer="https://github.com/prowler-cloud/prowler"

|

||||

|

||||

ARG USERNAME=prowler

|

||||

ARG USERID=34000

|

||||

|

||||

# Prepare image as root

|

||||

USER 0

|

||||

# System dependencies

|

||||

# hadolint ignore=DL3006,DL3013,DL3033

|

||||

RUN yum upgrade -y && \

|

||||

yum install -y python3 bash curl jq coreutils py3-pip which unzip shadow-utils && \

|

||||

yum clean all && \

|

||||

rm -rf /var/cache/yum

|

||||

|

||||

RUN amazon-linux-extras install -y epel postgresql14 && \

|

||||

yum clean all && \

|

||||

rm -rf /var/cache/yum

|

||||

|

||||

# Create non-root user

|

||||

RUN useradd -l -s /bin/bash -U -u ${USERID} ${USERNAME}

|

||||

|

||||

USER ${USERNAME}

|

||||

|

||||

# Python dependencies

|

||||

# hadolint ignore=DL3006,DL3013,DL3042

|

||||

RUN pip3 install --upgrade pip && \

|

||||

pip3 install --no-cache-dir boto3 detect-secrets==1.0.3 && \

|

||||

pip3 cache purge

|

||||

# Set Python PATH

|

||||

ENV PATH="/home/${USERNAME}/.local/bin:${PATH}"

|

||||

|

||||

USER 0

|

||||

|

||||

# Install AWS CLI

|

||||

RUN curl https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip -o awscliv2.zip && \

|

||||

unzip -q awscliv2.zip && \

|

||||

aws/install && \

|

||||

rm -rf aws awscliv2.zip

|

||||

|

||||

# Keep Python2 for yum

|

||||

RUN sed -i '1 s/python/python2.7/' /usr/bin/yum

|

||||

|

||||

# Set Python3

|

||||

RUN rm /usr/bin/python && \

|

||||

ln -s /usr/bin/python3 /usr/bin/python

|

||||

|

||||

# Set working directory

|

||||

WORKDIR /prowler

|

||||

|

||||

# Copy all files

|

||||

COPY . ./

|

||||

|

||||

# Set files ownership

|

||||

RUN chown -R prowler .

|

||||

|

||||

USER ${USERNAME}

|

||||

|

||||

ENTRYPOINT ["./prowler"]

|

||||

564

README.md

564

README.md

@@ -10,7 +10,7 @@

|

||||

<img src="https://user-images.githubusercontent.com/3985464/113734260-7ba06900-96fb-11eb-82bc-d4f68a1e2710.png" />

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://discord.gg/UjSMCVnxSB"><img alt="Discord Shield" src="https://img.shields.io/discord/807208614288818196"></a>

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

@@ -26,12 +26,13 @@

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<i>Prowler</i> is an Open Source security tool to perform AWS security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness. It contains more than 200 controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custome security frameworks.

|

||||

<i>Prowler</i> is an Open Source security tool to perform AWS security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness. It contains more than 240 controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

</p>

|

||||

|

||||

## Table of Contents

|

||||

|

||||

- [Description](#description)

|

||||

- [Prowler Container Versions](#prowler-container-versions)

|

||||

- [Features](#features)

|

||||

- [High level architecture](#high-level-architecture)

|

||||

- [Requirements and Installation](#requirements-and-installation)

|

||||

@@ -41,6 +42,7 @@

|

||||

- [Security Hub integration](#security-hub-integration)

|

||||

- [CodeBuild deployment](#codebuild-deployment)

|

||||

- [Allowlist](#allowlist-or-remove-a-fail-from-resources)

|

||||

- [Inventory](#inventory)

|

||||

- [Fix](#how-to-fix-every-fail)

|

||||

- [Troubleshooting](#troubleshooting)

|

||||

- [Extras](#extras)

|

||||

@@ -58,21 +60,32 @@

|

||||

|

||||

Prowler is a command line tool that helps you with AWS security assessment, auditing, hardening and incident response.

|

||||

|

||||

It follows guidelines of the CIS Amazon Web Services Foundations Benchmark (49 checks) and has more than 100 additional checks including related to GDPR, HIPAA, PCI-DSS, ISO-27001, FFIEC, SOC2 and others.

|

||||

It follows guidelines of the CIS Amazon Web Services Foundations Benchmark (49 checks) and has more than 190 additional checks including related to GDPR, HIPAA, PCI-DSS, ISO-27001, FFIEC, SOC2 and others.

|

||||

|

||||

Read more about [CIS Amazon Web Services Foundations Benchmark v1.2.0 - 05-23-2018](https://d0.awsstatic.com/whitepapers/compliance/AWS_CIS_Foundations_Benchmark.pdf)

|

||||

|

||||

## Prowler container versions

|

||||

|

||||

The available versions of Prowler are the following:

|

||||

- latest: in sync with master branch (bear in mind that it is not a stable version)

|

||||

- <x.y.z> (release): you can find the releases [here](https://github.com/prowler-cloud/prowler/releases), those are stable releases.

|

||||

- stable: this tag always point to the latest release.

|

||||

|

||||

The container images are available here:

|

||||

- [DockerHub](https://hub.docker.com/r/toniblyx/prowler/tags)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/o4g1s5r6/prowler)

|

||||

|

||||

## Features

|

||||

|

||||

+200 checks covering security best practices across all AWS regions and most of AWS services and related to the next groups:

|

||||

+240 checks covering security best practices across all AWS regions and most of AWS services and related to the next groups:

|

||||

|

||||

- Identity and Access Management [group1]

|

||||

- Logging [group2]

|

||||

- Logging [group2]

|

||||

- Monitoring [group3]

|

||||

- Networking [group4]

|

||||

- CIS Level 1 [cislevel1]

|

||||

- CIS Level 2 [cislevel2]

|

||||

- Extras *see Extras section* [extras]

|

||||

- Extras _see Extras section_ [extras]

|

||||

- Forensics related group of checks [forensics-ready]

|

||||

- GDPR [gdpr] Read more [here](#gdpr-checks)

|

||||

- HIPAA [hipaa] Read more [here](#hipaa-checks)

|

||||

@@ -87,9 +100,10 @@ With Prowler you can:

|

||||

|

||||

- Get a direct colorful or monochrome report

|

||||

- A HTML, CSV, JUNIT, JSON or JSON ASFF (Security Hub) format report

|

||||

- Send findings directly to Security Hub

|

||||

- Send findings directly to the Security Hub

|

||||

- Run specific checks and groups or create your own

|

||||

- Check multiple AWS accounts in parallel or sequentially

|

||||

- Get an inventory of your AWS resources

|

||||

- And more! Read examples below

|

||||

|

||||

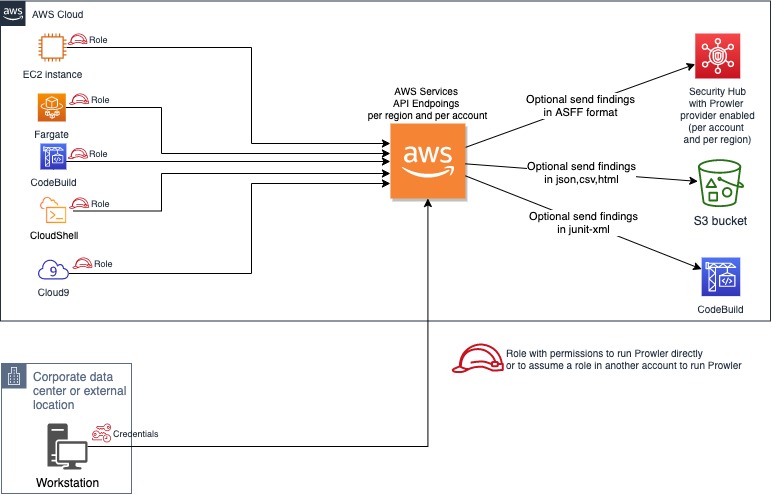

## High level architecture

|

||||

@@ -97,6 +111,7 @@ With Prowler you can:

|

||||

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

## Requirements and Installation

|

||||

|

||||

Prowler has been written in bash using AWS-CLI underneath and it works in Linux, Mac OS or Windows with cygwin or virtualization. Also requires `jq` and `detect-secrets` to work properly.

|

||||

@@ -104,140 +119,143 @@ Prowler has been written in bash using AWS-CLI underneath and it works in Linux,

|

||||

- Make sure the latest version of AWS-CLI is installed. It works with either v1 or v2, however _latest v2 is recommended if using new regions since they require STS v2 token_, and other components needed, with Python pip already installed.

|

||||

|

||||

- For Amazon Linux (`yum` based Linux distributions and AWS CLI v2):

|

||||

```

|

||||

sudo yum update -y

|

||||

sudo yum remove -y awscli

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

|

||||

unzip awscliv2.zip

|

||||

sudo ./aws/install

|

||||

sudo yum install -y python3 jq git

|

||||

sudo pip3 install detect-secrets==1.0.3

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

```

|

||||

```

|

||||

sudo yum update -y

|

||||

sudo yum remove -y awscli

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

|

||||

unzip awscliv2.zip

|

||||

sudo ./aws/install

|

||||

sudo yum install -y python3 jq git

|

||||

sudo pip3 install detect-secrets==1.0.3

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

```

|

||||

- For Ubuntu Linux (`apt` based Linux distributions and AWS CLI v2):

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt install python3 python3-pip jq git zip

|

||||

pip install detect-secrets==1.0.3

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

|

||||

unzip awscliv2.zip

|

||||

sudo ./aws/install

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

```

|

||||

|

||||

> NOTE: detect-secrets Yelp version is no longer supported, the one from IBM is mantained now. Use the one mentioned below or the specific Yelp version 1.0.3 to make sure it works as expected (`pip install detect-secrets==1.0.3`):

|

||||

```sh

|

||||

pip install "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets"

|

||||

```

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt install python3 python3-pip jq git zip

|

||||

pip install detect-secrets==1.0.3

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

|

||||

unzip awscliv2.zip

|

||||

sudo ./aws/install

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

```

|

||||

|

||||

AWS-CLI can be also installed it using other methods, refer to official documentation for more details: <https://aws.amazon.com/cli/>, but `detect-secrets` has to be installed using `pip` or `pip3`.

|

||||

> NOTE: detect-secrets Yelp version is no longer supported, the one from IBM is mantained now. Use the one mentioned below or the specific Yelp version 1.0.3 to make sure it works as expected (`pip install detect-secrets==1.0.3`):

|

||||

|

||||

```sh

|

||||

pip install "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets"

|

||||

```

|

||||

|

||||

AWS-CLI can be also installed it using other methods, refer to official documentation for more details: <https://aws.amazon.com/cli/>, but `detect-secrets` has to be installed using `pip` or `pip3`.

|

||||

|

||||

- Once Prowler repository is cloned, get into the folder and you can run it:

|

||||

|

||||

```sh

|

||||

cd prowler

|

||||

./prowler

|

||||

```

|

||||

```sh

|

||||

cd prowler

|

||||

./prowler

|

||||

```

|

||||

|

||||

- Since Prowler users AWS CLI under the hood, you can follow any authentication method as described [here](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-quickstart.html#cli-configure-quickstart-precedence). Make sure you have properly configured your AWS-CLI with a valid Access Key and Region or declare AWS variables properly (or instance profile/role):

|

||||

|

||||

```sh

|

||||

aws configure

|

||||

```

|

||||

```sh

|

||||

aws configure

|

||||

```

|

||||

|

||||

or

|

||||

or

|

||||

|

||||

```sh

|

||||

export AWS_ACCESS_KEY_ID="ASXXXXXXX"

|

||||

export AWS_SECRET_ACCESS_KEY="XXXXXXXXX"

|

||||

export AWS_SESSION_TOKEN="XXXXXXXXX"

|

||||

```

|

||||

```sh

|

||||

export AWS_ACCESS_KEY_ID="ASXXXXXXX"

|

||||

export AWS_SECRET_ACCESS_KEY="XXXXXXXXX"

|

||||

export AWS_SESSION_TOKEN="XXXXXXXXX"

|

||||

```

|

||||

|

||||

- Those credentials must be associated to a user or role with proper permissions to do all checks. To make sure, add the AWS managed policies, SecurityAudit and ViewOnlyAccess, to the user or role being used. Policy ARNs are:

|

||||

- Those credentials must be associated to a user or role with proper permissions to do all checks. To make sure, add the AWS managed policies, SecurityAudit and ViewOnlyAccess, to the user or role being used. Policy ARNs are:

|

||||

|

||||

```sh

|

||||

arn:aws:iam::aws:policy/SecurityAudit

|

||||

arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

|

||||

```

|

||||

```sh

|

||||

arn:aws:iam::aws:policy/SecurityAudit

|

||||

arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

|

||||

```

|

||||

|

||||

> Additional permissions needed: to make sure Prowler can scan all services included in the group *Extras*, make sure you attach also the custom policy [prowler-additions-policy.json](https://github.com/prowler-cloud/prowler/blob/master/iam/prowler-additions-policy.json) to the role you are using. If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/iam/prowler-security-hub.json).

|

||||

> Additional permissions needed: to make sure Prowler can scan all services included in the group _Extras_, make sure you attach also the custom policy [prowler-additions-policy.json](https://github.com/prowler-cloud/prowler/blob/master/iam/prowler-additions-policy.json) to the role you are using. If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/iam/prowler-security-hub.json).

|

||||

|

||||

## Usage

|

||||

|

||||

1. Run the `prowler` command without options (it will use your environment variable credentials if they exist or will default to using the `~/.aws/credentials` file and run checks over all regions when needed. The default region is us-east-1):

|

||||

|

||||

```sh

|

||||

./prowler

|

||||

```

|

||||

```sh

|

||||

./prowler

|

||||

```

|

||||

|

||||

Use `-l` to list all available checks and the groups (sections) that reference them. To list all groups use `-L` and to list content of a group use `-l -g <groupname>`.

|

||||

Use `-l` to list all available checks and the groups (sections) that reference them. To list all groups use `-L` and to list content of a group use `-l -g <groupname>`.

|

||||

|

||||

If you want to avoid installing dependencies run it using Docker:

|

||||

If you want to avoid installing dependencies run it using Docker:

|

||||

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest

|

||||

```

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest

|

||||

```

|

||||

|

||||

In case you want to get reports created by Prowler use docker volume option like in the example below:

|

||||

```sh

|

||||

docker run -ti --rm -v /your/local/output:/prowler/output --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -g hipaa -M csv,json,html

|

||||

```

|

||||

In case you want to get reports created by Prowler use docker volume option like in the example below:

|

||||

|

||||

```sh

|

||||

docker run -ti --rm -v /your/local/output:/prowler/output --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -g hipaa -M csv,json,html

|

||||

```

|

||||

|

||||

1. For custom AWS-CLI profile and region, use the following: (it will use your custom profile and run checks over all regions when needed):

|

||||

|

||||

```sh

|

||||

./prowler -p custom-profile -r us-east-1

|

||||

```

|

||||

```sh

|

||||

./prowler -p custom-profile -r us-east-1

|

||||

```

|

||||

|

||||

1. For a single check use option `-c`:

|

||||

|

||||

```sh

|

||||

./prowler -c check310

|

||||

```

|

||||

```sh

|

||||

./prowler -c check310

|

||||

```

|

||||

|

||||

With Docker:

|

||||

With Docker:

|

||||

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest "-c check310"

|

||||

```

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest "-c check310"

|

||||

```

|

||||

|

||||

or multiple checks separated by comma:

|

||||

or multiple checks separated by comma:

|

||||

|

||||

```sh

|

||||

./prowler -c check310,check722

|

||||

```

|

||||

```sh

|

||||

./prowler -c check310,check722

|

||||

```

|

||||

|

||||

or all checks but some of them:

|

||||

or all checks but some of them:

|

||||

|

||||

```sh

|

||||

./prowler -E check42,check43

|

||||

```

|

||||

```sh

|

||||

./prowler -E check42,check43

|

||||

```

|

||||

|

||||

or for custom profile and region:

|

||||

or for custom profile and region:

|

||||

|

||||

```sh

|

||||

./prowler -p custom-profile -r us-east-1 -c check11

|

||||

```

|

||||

```sh

|

||||

./prowler -p custom-profile -r us-east-1 -c check11

|

||||

```

|

||||

|

||||

or for a group of checks use group name:

|

||||

or for a group of checks use group name:

|

||||

|

||||

```sh

|

||||

./prowler -g group1 # for iam related checks

|

||||

```

|

||||

```sh

|

||||

./prowler -g group1 # for iam related checks

|

||||

```

|

||||

|

||||

or exclude some checks in the group:

|

||||

or exclude some checks in the group:

|

||||

|

||||

```sh

|

||||

./prowler -g group4 -E check42,check43

|

||||

```

|

||||

```sh

|

||||

./prowler -g group4 -E check42,check43

|

||||

```

|

||||

|

||||

Valid check numbers are based on the AWS CIS Benchmark guide, so 1.1 is check11 and 3.10 is check310

|

||||

Valid check numbers are based on the AWS CIS Benchmark guide, so 1.1 is check11 and 3.10 is check310

|

||||

|

||||

### Regions

|

||||

|

||||

By default, Prowler scans all opt-in regions available, that might take a long execution time depending on the number of resources and regions used. Same applies for GovCloud or China regions. See below Advance usage for examples.

|

||||

|

||||

Prowler has two parameters related to regions: `-r` that is used query AWS services API endpoints (it uses `us-east-1` by default and required for GovCloud or China) and the option `-f` that is to filter those regions you only want to scan. For example if you want to scan Dublin only use `-f eu-west-1` and if you want to scan Dublin and Ohio `-f eu-west-1,us-east-1`, note the regions are separated by a comma deliminator (it can be used as before with `-f 'eu-west-1,us-east-1'`).

|

||||

Prowler has two parameters related to regions: `-r` that is used query AWS services API endpoints (it uses `us-east-1` by default and required for GovCloud or China) and the option `-f` that is to filter those regions you only want to scan. For example if you want to scan Dublin only use `-f eu-west-1` and if you want to scan Dublin and Ohio `-f eu-west-1,us-east-1`, note the regions are separated by a comma delimiter (it can be used as before with `-f 'eu-west-1,us-east-1'`).

|

||||

|

||||

## Screenshots

|

||||

|

||||

@@ -261,81 +279,256 @@ Prowler has two parameters related to regions: `-r` that is used query AWS servi

|

||||

|

||||

1. If you want to save your report for later analysis thare are different ways, natively (supported text, mono, csv, json, json-asff, junit-xml and html, see note below for more info):

|

||||

|

||||

```sh

|

||||

./prowler -M csv

|

||||

```

|

||||

```sh

|

||||

./prowler -M csv

|

||||

```

|

||||

|

||||

or with multiple formats at the same time:

|

||||

or with multiple formats at the same time:

|

||||

|

||||

```sh

|

||||

./prowler -M csv,json,json-asff,html

|

||||

```

|

||||

```sh

|

||||

./prowler -M csv,json,json-asff,html

|

||||

```

|

||||

|

||||

or just a group of checks in multiple formats:

|

||||

or just a group of checks in multiple formats:

|

||||

|

||||

```sh

|

||||

./prowler -g gdpr -M csv,json,json-asff

|

||||

```

|

||||

```sh

|

||||

./prowler -g gdpr -M csv,json,json-asff

|

||||

```

|

||||

|

||||

or if you want a sorted and dynamic HTML report do:

|

||||

or if you want a sorted and dynamic HTML report do:

|

||||

|

||||

```sh

|

||||

./prowler -M html

|

||||

```

|

||||

```sh

|

||||

./prowler -M html

|

||||

```

|

||||

|

||||

Now `-M` creates a file inside the prowler `output` directory named `prowler-output-AWSACCOUNTID-YYYYMMDDHHMMSS.format`. You don't have to specify anything else, no pipes, no redirects.

|

||||

Now `-M` creates a file inside the prowler `output` directory named `prowler-output-AWSACCOUNTID-YYYYMMDDHHMMSS.format`. You don't have to specify anything else, no pipes, no redirects.

|

||||

|

||||

or just saving the output to a file like below:

|

||||

or just saving the output to a file like below:

|

||||

|

||||

```sh

|

||||

./prowler -M mono > prowler-report.txt

|

||||

```

|

||||

```sh

|

||||

./prowler -M mono > prowler-report.txt

|

||||

```

|

||||

|

||||

To generate JUnit report files, include the junit-xml format. This can be combined with any other format. Files are written inside a prowler root directory named `junit-reports`:

|

||||

To generate JUnit report files, include the junit-xml format. This can be combined with any other format. Files are written inside a prowler root directory named `junit-reports`:

|

||||

|

||||

```sh

|

||||

./prowler -M text,junit-xml

|

||||

```

|

||||

```sh

|

||||

./prowler -M text,junit-xml

|

||||

```

|

||||

|

||||

>Note about output formats to use with `-M`: "text" is the default one with colors, "mono" is like default one but monochrome, "csv" is comma separated values, "json" plain basic json (without comma between lines) and "json-asff" is also json with Amazon Security Finding Format that you can ship to Security Hub using `-S`.

|

||||

> Note about output formats to use with `-M`: "text" is the default one with colors, "mono" is like default one but monochrome, "csv" is comma separated values, "json" plain basic json (without comma between lines) and "json-asff" is also json with Amazon Security Finding Format that you can ship to Security Hub using `-S`.

|

||||

|

||||

To save your report in an S3 bucket, use `-B` to define a custom output bucket along with `-M` to define the output format that is going to be uploaded to S3:

|

||||

To save your report in an S3 bucket, use `-B` to define a custom output bucket along with `-M` to define the output format that is going to be uploaded to S3:

|

||||

|

||||

```sh

|

||||

./prowler -M csv -B my-bucket/folder/

|

||||

```

|

||||

>In the case you do not want to use the assumed role credentials but the initial credentials to put the reports into the S3 bucket, use `-D` instead of `-B`. Make sure that the used credentials have s3:PutObject permissions in the S3 path where the reports are going to be uploaded.

|

||||

```sh

|

||||

./prowler -M csv -B my-bucket/folder/

|

||||

```

|

||||

|

||||

When generating multiple formats and running using Docker, to retrieve the reports, bind a local directory to the container, e.g.:

|

||||

> In the case you do not want to use the assumed role credentials but the initial credentials to put the reports into the S3 bucket, use `-D` instead of `-B`. Make sure that the used credentials have s3:PutObject permissions in the S3 path where the reports are going to be uploaded.

|

||||

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --volume "$(pwd)":/prowler/output --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -M csv,json

|

||||

```

|

||||

When generating multiple formats and running using Docker, to retrieve the reports, bind a local directory to the container, e.g.:

|

||||

|

||||

```sh

|

||||

docker run -ti --rm --name prowler --volume "$(pwd)":/prowler/output --env AWS_ACCESS_KEY_ID --env AWS_SECRET_ACCESS_KEY --env AWS_SESSION_TOKEN toniblyx/prowler:latest -M csv,json

|

||||

```

|

||||

|

||||

1. To perform an assessment based on CIS Profile Definitions you can use cislevel1 or cislevel2 with `-g` flag, more information about this [here, page 8](https://d0.awsstatic.com/whitepapers/compliance/AWS_CIS_Foundations_Benchmark.pdf):

|

||||

|

||||

```sh

|

||||

./prowler -g cislevel1

|

||||

```

|

||||

```sh

|

||||

./prowler -g cislevel1

|

||||

```

|

||||

|

||||

1. If you want to run Prowler to check multiple AWS accounts in parallel (runs up to 4 simultaneously `-P 4`) but you may want to read below in Advanced Usage section to do so assuming a role:

|

||||

|

||||

```sh

|

||||

grep -E '^\[([0-9A-Aa-z_-]+)\]' ~/.aws/credentials | tr -d '][' | shuf | \

|

||||

xargs -n 1 -L 1 -I @ -r -P 4 ./prowler -p @ -M csv 2> /dev/null >> all-accounts.csv

|

||||

```

|

||||

```sh

|

||||

grep -E '^\[([0-9A-Aa-z_-]+)\]' ~/.aws/credentials | tr -d '][' | shuf | \

|

||||

xargs -n 1 -L 1 -I @ -r -P 4 ./prowler -p @ -M csv 2> /dev/null >> all-accounts.csv

|

||||

```

|

||||

|

||||

1. For help about usage run:

|

||||

|

||||

```

|

||||

./prowler -h

|

||||

```

|

||||

```

|

||||

./prowler -h

|

||||

```

|

||||

|

||||

## Database providers connector

|

||||

|

||||

You can send the Prowler's output to different databases (right now only PostgreSQL is supported).

|

||||

|

||||

Jump into the section for the database provider you want to use and follow the required steps to configure it.

|

||||

|

||||

### PostgreSQL

|

||||

|

||||

Install psql

|

||||

|

||||

- Mac -> `brew install libpq`

|

||||

- Ubuntu -> `sudo apt-get install postgresql-client `

|

||||

- RHEL/Centos -> `sudo yum install postgresql10`

|

||||

|

||||

#### Audit ID Field

|

||||

|

||||

Prowler can add an optional `audit_id` field to identify each audit that has been made in the database. You can do this by adding the `-u audit_id` flag to the prowler command.

|

||||

|

||||

#### Credentials

|

||||

|

||||

There are two options to pass the PostgreSQL credentials to Prowler:

|

||||

|

||||

##### Using a .pgpass file

|

||||

|

||||

Configure a `~/.pgpass` file into the root folder of the user that is going to launch Prowler ([pgpass file doc](https://www.postgresql.org/docs/current/libpq-pgpass.html)), including an extra field at the end of the line, separated by `:`, to name the table, using the following format:

|

||||

`hostname:port:database:username:password:table`

|

||||

|

||||

##### Using environment variables

|

||||

|

||||

- Configure the following environment variables:

|

||||

- `POSTGRES_HOST`

|

||||

- `POSTGRES_PORT`

|

||||

- `POSTGRES_USER`

|

||||

- `POSTGRES_PASSWORD`

|

||||

- `POSTGRES_DB`

|

||||

- `POSTGRES_TABLE`

|

||||

> _Note_: If you are using a schema different than postgres please include it at the beginning of the `POSTGRES_TABLE` variable, like: `export POSTGRES_TABLE=prowler.findings`

|

||||

|

||||

Also you need to have enabled the `uuid` postgresql extension, to enable it:

|

||||

|

||||

`CREATE EXTENSION IF NOT EXISTS "uuid-ossp";`

|

||||

|

||||

Create a table in your PostgreSQL database to store the Prowler's data. You can use the following SQL statement to create the table:

|

||||

|

||||

```

|

||||

CREATE TABLE IF NOT EXISTS prowler_findings (

|

||||

id uuid,

|

||||

audit_id uuid ,

|

||||

profile text,

|

||||

account_number text,

|

||||

region text,

|

||||

check_id text,

|

||||

result text,

|

||||

item_scored text,

|

||||

item_level text,

|

||||

check_title text,

|

||||

result_extended text,

|

||||

check_asff_compliance_type text,

|

||||

severity text,

|

||||

service_name text,

|

||||

check_asff_resource_type text,

|

||||

check_asff_type text,

|

||||

risk text,

|

||||

remediation text,

|

||||

documentation text,

|

||||

check_caf_epic text,

|

||||

resource_id text,

|

||||

account_details_email text,

|

||||

account_details_name text,

|

||||

account_details_arn text,

|

||||

account_details_org text,

|

||||

account_details_tags text,

|

||||

prowler_start_time text

|

||||

);

|

||||

```

|

||||

|

||||

- Execute Prowler with `-d` flag, for example:

|

||||

`./prowler -M csv -d postgresql`

|

||||

> _Note_: This command creates a `csv` output file and stores the Prowler output in the configured PostgreSQL DB. It's an example, `-d` flag **does not** require `-M` to run.

|

||||

|

||||

## Output Formats

|

||||

|

||||

Prowler supports natively the following output formats:

|

||||

|

||||

- CSV

|

||||

- JSON

|

||||

- JSON-ASFF

|

||||

- HTML

|

||||

- JUNIT-XML

|

||||

|

||||

Hereunder is the structure for each of them

|

||||

|

||||

### CSV

|

||||

|

||||

| PROFILE | ACCOUNT_NUM | REGION | TITLE_ID | CHECK_RESULT | ITEM_SCORED | ITEM_LEVEL | TITLE_TEXT | CHECK_RESULT_EXTENDED | CHECK_ASFF_COMPLIANCE_TYPE | CHECK_SEVERITY | CHECK_SERVICENAME | CHECK_ASFF_RESOURCE_TYPE | CHECK_ASFF_TYPE | CHECK_RISK | CHECK_REMEDIATION | CHECK_DOC | CHECK_CAF_EPIC | CHECK_RESOURCE_ID | PROWLER_START_TIME | ACCOUNT_DETAILS_EMAIL | ACCOUNT_DETAILS_NAME | ACCOUNT_DETAILS_ARN | ACCOUNT_DETAILS_ORG | ACCOUNT_DETAILS_TAGS |

|

||||

| ------- | ----------- | ------ | -------- | ------------ | ----------- | ---------- | ---------- | --------------------- | -------------------------- | -------------- | ----------------- | ------------------------ | --------------- | ---------- | ----------------- | --------- | -------------- | ----------------- | ------------------ | --------------------- | -------------------- | ------------------- | ------------------- | -------------------- |

|

||||

|

||||

### JSON

|

||||

|

||||

```

|

||||

{

|

||||

"Profile": "ENV",

|

||||

"Account Number": "1111111111111",

|

||||

"Control": "[check14] Ensure access keys are rotated every 90 days or less",

|

||||

"Message": "us-west-2: user has not rotated access key 2 in over 90 days",

|

||||

"Severity": "Medium",

|

||||

"Status": "FAIL",

|

||||

"Scored": "",

|

||||

"Level": "CIS Level 1",

|

||||

"Control ID": "1.4",

|

||||

"Region": "us-west-2",

|

||||

"Timestamp": "2022-05-18T10:33:48Z",

|

||||

"Compliance": "ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3",

|

||||

"Service": "iam",

|

||||

"CAF Epic": "IAM",

|

||||

"Risk": "Access keys consist of an access key ID and secret access key which are used to sign programmatic requests that you make to AWS. AWS users need their own access keys to make programmatic calls to AWS from the AWS Command Line Interface (AWS CLI)- Tools for Windows PowerShell- the AWS SDKs- or direct HTTP calls using the APIs for individual AWS services. It is recommended that all access keys be regularly rotated.",

|

||||

"Remediation": "Use the credential report to ensure access_key_X_last_rotated is less than 90 days ago.",

|

||||

"Doc link": "https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_getting-report.html",

|

||||

"Resource ID": "terraform-user",

|

||||

"Account Email": "",

|

||||

"Account Name": "",

|

||||

"Account ARN": "",

|

||||

"Account Organization": "",

|

||||

"Account tags": ""

|

||||

}

|

||||

```

|

||||

|

||||

> NOTE: Each finding is a `json` object.

|

||||

|

||||

### JSON-ASFF

|

||||

|

||||

```

|

||||

{

|

||||

"SchemaVersion": "2018-10-08",

|

||||

"Id": "prowler-1.4-1111111111111-us-west-2-us-west-2_user_has_not_rotated_access_key_2_in_over_90_days",

|

||||

"ProductArn": "arn:aws:securityhub:us-west-2::product/prowler/prowler",

|

||||

"RecordState": "ACTIVE",

|

||||

"ProductFields": {

|

||||

"ProviderName": "Prowler",

|

||||

"ProviderVersion": "2.9.0-13April2022",

|

||||

"ProwlerResourceName": "user"

|

||||

},

|

||||

"GeneratorId": "prowler-check14",

|

||||

"AwsAccountId": "1111111111111",

|

||||

"Types": [

|

||||

"ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3"

|

||||

],

|

||||

"FirstObservedAt": "2022-05-18T10:33:48Z",

|

||||

"UpdatedAt": "2022-05-18T10:33:48Z",

|

||||

"CreatedAt": "2022-05-18T10:33:48Z",

|

||||

"Severity": {

|

||||

"Label": "MEDIUM"

|

||||

},

|

||||

"Title": "iam.[check14] Ensure access keys are rotated every 90 days or less",

|

||||

"Description": "us-west-2: user has not rotated access key 2 in over 90 days",

|

||||

"Resources": [

|

||||

{

|

||||

"Type": "AwsIamUser",

|

||||

"Id": "user",

|

||||

"Partition": "aws",

|

||||

"Region": "us-west-2"

|

||||

}

|

||||

],

|

||||

"Compliance": {

|

||||

"Status": "FAILED",

|

||||

"RelatedRequirements": [

|

||||

"ens-op.acc.1.aws.iam.4 ens-op.acc.5.aws.iam.3"

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

> NOTE: Each finding is a `json` object.

|

||||

|

||||

## Advanced Usage

|

||||

|

||||

### Assume Role:

|

||||

|

||||

Prowler uses the AWS CLI underneath so it uses the same authentication methods. However, there are few ways to run Prowler against multiple accounts using IAM Assume Role feature depending on eachg use case. You can just set up your custom profile inside `~/.aws/config` with all needed information about the role to assume then call it with `./prowler -p your-custom-profile`. Additionally you can use `-A 123456789012` and `-R RemoteRoleToAssume` and Prowler will get those temporary credentials using `aws sts assume-role`, set them up as environment variables and run against that given account. To create a role to assume in multiple accounts easier eather as CFN Stack or StackSet, look at [this CloudFormation template](iam/create_role_to_assume_cfn.yaml) and adapt it.

|

||||

Prowler uses the AWS CLI underneath so it uses the same authentication methods. However, there are few ways to run Prowler against multiple accounts using IAM Assume Role feature depending on eachg use case. You can just set up your custom profile inside `~/.aws/config` with all needed information about the role to assume then call it with `./prowler -p your-custom-profile`. Additionally you can use `-A 123456789012` and `-R RemoteRoleToAssume` and Prowler will get those temporary credentials using `aws sts assume-role`, set them up as environment variables and run against that given account. To create a role to assume in multiple accounts easier either as CFN Stack or StackSet, look at [this CloudFormation template](iam/create_role_to_assume_cfn.yaml) and adapt it.

|

||||

|

||||

```sh

|

||||

./prowler -A 123456789012 -R ProwlerRole

|

||||

@@ -345,16 +538,18 @@ Prowler uses the AWS CLI underneath so it uses the same authentication methods.

|

||||

./prowler -A 123456789012 -R ProwlerRole -I 123456

|

||||

```

|

||||

|

||||

> *NOTE 1 about Session Duration*: By default it gets credentials valid for 1 hour (3600 seconds). Depending on the mount of checks you run and the size of your infrastructure, Prowler may require more than 1 hour to finish. Use option `-T <seconds>` to allow up to 12h (43200 seconds). To allow more than 1h you need to modify *"Maximum CLI/API session duration"* for that particular role, read more [here](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html#id_roles_use_view-role-max-session).

|

||||

> _NOTE 1 about Session Duration_: By default it gets credentials valid for 1 hour (3600 seconds). Depending on the mount of checks you run and the size of your infrastructure, Prowler may require more than 1 hour to finish. Use option `-T <seconds>` to allow up to 12h (43200 seconds). To allow more than 1h you need to modify _"Maximum CLI/API session duration"_ for that particular role, read more [here](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html#id_roles_use_view-role-max-session).

|

||||

|

||||

> *NOTE 2 about Session Duration*: Bear in mind that if you are using roles assumed by role chaining there is a hard limit of 1 hour so consider not using role chaining if possible, read more about that, in foot note 1 below the table [here](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html).

|

||||

> _NOTE 2 about Session Duration_: Bear in mind that if you are using roles assumed by role chaining there is a hard limit of 1 hour so consider not using role chaining if possible, read more about that, in foot note 1 below the table [here](https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html).

|

||||

|

||||

For example, if you want to get only the fails in CSV format from all checks regarding RDS without banner from the AWS Account 123456789012 assuming the role RemoteRoleToAssume and set a fixed session duration of 1h:

|

||||

|

||||

```sh

|

||||

./prowler -A 123456789012 -R RemoteRoleToAssume -T 3600 -b -M cvs -q -g rds

|

||||

```

|

||||

|

||||

or with a given External ID:

|

||||

|

||||

```sh

|

||||

./prowler -A 123456789012 -R RemoteRoleToAssume -T 3600 -I 123456 -b -M cvs -q -g rds

|

||||

```

|

||||

@@ -364,22 +559,28 @@ or with a given External ID:

|

||||

If you want to run Prowler or just a check or a group across all accounts of AWS Organizations you can do this:

|

||||

|

||||

First get a list of accounts that are not suspended:

|

||||

|

||||

```

|

||||

ACCOUNTS_IN_ORGS=$(aws organizations list-accounts --query Accounts[?Status==`ACTIVE`].Id --output text)

|

||||

```

|

||||

|

||||

Then run Prowler to assume a role (same in all members) per each account, in this example it is just running one particular check:

|

||||

|

||||

```

|

||||

for accountId in $ACCOUNTS_IN_ORGS; do ./prowler -A $accountId -R RemoteRoleToAssume -c extra79; done

|

||||

```

|

||||

|

||||

Using the same for loop it can be scanned a list of accounts with a variable like `ACCOUNTS_LIST='11111111111 2222222222 333333333'`

|

||||

|

||||

### Get AWS Account details from your AWS Organization:

|

||||

|

||||

From Prowler v2.8, you can get additional information of the scanned account in CSV and JSON outputs. When scanning a single account you get the Account ID as part of the output. Now, if you have AWS Organizations and are scanning multiple accounts using the assume role functionality, Prowler can get your account details like Account Name, Email, ARN, Organization ID and Tags and you will have them next to every finding in the CSV and JSON outputs.

|

||||

In order to do that you can use the new option `-O <management account id>`, requires `-R <role to assume>` and also needs permissions `organizations:ListAccounts*` and `organizations:ListTagsForResource`. See the following sample command:

|

||||

|

||||

```

|

||||

./prowler -R ProwlerScanRole -A 111111111111 -O 222222222222 -M json,csv

|

||||

```

|

||||

|

||||

In that command Prowler will scan the account `111111111111` assuming the role `ProwlerScanRole` and getting the account details from the AWS Organizatiosn management account `222222222222` assuming the same role `ProwlerScanRole` for that and creating two reports with those details in JSON and CSV.

|

||||

|

||||

In the JSON output below (redacted) you can see tags coded in base64 to prevent breaking CSV or JSON due to its format:

|

||||

@@ -391,6 +592,7 @@ In the JSON output below (redacted) you can see tags coded in base64 to prevent

|

||||

"Account Organization": "o-abcde1234",

|

||||

"Account tags": "\"eyJUYWdzIjpasf0=\""

|

||||

```

|

||||

|

||||

The additional fields in CSV header output are as follow:

|

||||

|

||||

```csv

|

||||

@@ -400,9 +602,11 @@ ACCOUNT_DETAILS_EMAIL,ACCOUNT_DETAILS_NAME,ACCOUNT_DETAILS_ARN,ACCOUNT_DETAILS_O

|

||||

### GovCloud

|

||||

|

||||

Prowler runs in GovCloud regions as well. To make sure it points to the right API endpoint use `-r` to either `us-gov-west-1` or `us-gov-east-1`. If not filter region is used it will look for resources in both GovCloud regions by default:

|

||||

|

||||

```sh

|

||||

./prowler -r us-gov-west-1

|

||||

```

|

||||

|

||||

> For Security Hub integration see below in Security Hub section.

|

||||

|

||||

### Custom folder for custom checks

|

||||

@@ -410,11 +614,12 @@ Prowler runs in GovCloud regions as well. To make sure it points to the right AP

|

||||

Flag `-x /my/own/checks` will include any check in that particular directory (files must start by check). To see how to write checks see [Add Custom Checks](#add-custom-checks) section.

|

||||

|

||||

S3 URIs are also supported as custom folders for custom checks, e.g. `s3://bucket/prefix/checks`. Prowler will download the folder locally and run the checks as they are called with default execution,`-c` or `-g`.

|

||||

>Make sure that the used credentials have s3:GetObject permissions in the S3 path where the custom checks are located.

|

||||

|

||||

> Make sure that the used credentials have s3:GetObject permissions in the S3 path where the custom checks are located.

|

||||

|

||||

### Show or log only FAILs

|

||||

|

||||

In order to remove noise and get only FAIL findings there is a `-q` flag that makes Prowler to show and log only FAILs.

|

||||

In order to remove noise and get only FAIL findings there is a `-q` flag that makes Prowler to show and log only FAILs.

|

||||

It can be combined with any other option.

|

||||

Will show WARNINGS when a resource is excluded, just to take into consideration.

|

||||

|

||||

@@ -432,34 +637,39 @@ Sets the entropy limit for high entropy hex strings from environment variable `H

|

||||

export BASE64_LIMIT=4.5

|

||||

export HEX_LIMIT=3.0

|

||||

```

|

||||

|

||||

### Run Prowler using AWS CloudShell

|

||||

|

||||

An easy way to run Prowler to scan your account is using AWS CloudShell. Read more and learn how to do it [here](util/cloudshell/README.md).

|

||||

|

||||

## Security Hub integration

|

||||

|

||||

Since October 30th 2020 (version v2.3RC5), Prowler supports natively and as **official integration** sending findings to [AWS Security Hub](https://aws.amazon.com/security-hub). This integration allows Prowler to import its findings to AWS Security Hub. With Security Hub, you now have a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services, such as Amazon GuardDuty, Amazon Inspector, Amazon Macie, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Firewall Manager, as well as from AWS Partner solutions and from Prowler for free.

|

||||

Since October 30th 2020 (version v2.3RC5), Prowler supports natively and as **official integration** sending findings to [AWS Security Hub](https://aws.amazon.com/security-hub). This integration allows Prowler to import its findings to AWS Security Hub. With Security Hub, you now have a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services, such as Amazon GuardDuty, Amazon Inspector, Amazon Macie, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Firewall Manager, as well as from AWS Partner solutions and from Prowler for free.

|

||||

|

||||

Before sending findings to Prowler, you need to perform next steps:

|

||||

1. Since Security Hub is a region based service, enable it in the region or regions you require. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

|

||||

- `aws securityhub enable-security-hub --region <region>`.

|

||||

2. Enable Prowler as partner integration integration. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

|

||||

- `aws securityhub enable-import-findings-for-product --region <region> --product-arn arn:aws:securityhub:<region>::product/prowler/prowler` (change region also inside the ARN).

|

||||

- Using the AWS Management Console:

|

||||

|

||||

|

||||

1. Since Security Hub is a region based service, enable it in the region or regions you require. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

|

||||

- `aws securityhub enable-security-hub --region <region>`.

|

||||

2. Enable Prowler as partner integration integration. Use the AWS Management Console or using the AWS CLI with this command if you have enough permissions:

|

||||

- `aws securityhub enable-import-findings-for-product --region <region> --product-arn arn:aws:securityhub:<region>::product/prowler/prowler` (change region also inside the ARN).

|

||||

- Using the AWS Management Console:

|

||||

|

||||

3. As mentioned in section "Custom IAM Policy", to allow Prowler to import its findings to AWS Security Hub you need to add the policy below to the role or user running Prowler:

|

||||

- [iam/prowler-security-hub.json](iam/prowler-security-hub.json)

|

||||

- [iam/prowler-security-hub.json](iam/prowler-security-hub.json)

|

||||

|

||||

Once it is enabled, it is as simple as running the command below (for all regions):

|

||||

|

||||

```sh

|

||||

./prowler -M json-asff -S

|

||||

```

|

||||

|

||||

or for only one filtered region like eu-west-1:

|

||||

|

||||

```sh

|

||||

./prowler -M json-asff -q -S -f eu-west-1

|

||||

```

|

||||

> Note 1: It is recommended to send only fails to Security Hub and that is possible adding `-q` to the command.

|

||||

|

||||

> Note 1: It is recommended to send only fails to Security Hub and that is possible adding `-q` to the command.

|

||||

|

||||

> Note 2: Since Prowler perform checks to all regions by defaults you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs).

|

||||

|

||||

@@ -472,6 +682,7 @@ Once you run findings for first time you will be able to see Prowler findings in

|

||||

### Security Hub in GovCloud regions

|

||||

|

||||

To use Prowler and Security Hub integration in GovCloud there is an additional requirement, usage of `-r` is needed to point the API queries to the right API endpoint. Here is a sample command that sends only failed findings to Security Hub in region `us-gov-west-1`:

|

||||

|

||||

```

|

||||

./prowler -r us-gov-west-1 -f us-gov-west-1 -S -M csv,json-asff -q

|

||||

```

|

||||