Compare commits

1 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

32f81c7672 |

2

.github/workflows/pypi-release.yml

vendored

@@ -35,8 +35,6 @@ jobs:

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}"

|

||||

git push -f origin ${{ env.RELEASE_TAG }}

|

||||

git checkout -B release-${{ env.RELEASE_TAG }}

|

||||

git push origin release-${{ env.RELEASE_TAG }}

|

||||

poetry build

|

||||

- name: Publish prowler package to PyPI

|

||||

run: |

|

||||

|

||||

@@ -61,7 +61,6 @@ repos:

|

||||

hooks:

|

||||

- id: poetry-check

|

||||

- id: poetry-lock

|

||||

args: ["--no-update"]

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.12.1-beta

|

||||

@@ -76,15 +75,6 @@ repos:

|

||||

entry: bash -c 'pylint --disable=W,C,R,E -j 0 -rn -sn prowler/'

|

||||

language: system

|

||||

|

||||

- id: trufflehog

|

||||

name: TruffleHog

|

||||

description: Detect secrets in your data.

|

||||

# entry: bash -c 'trufflehog git file://. --only-verified --fail'

|

||||

# For running trufflehog in docker, use the following entry instead:

|

||||

entry: bash -c 'docker run -v "$(pwd):/workdir" -i --rm trufflesecurity/trufflehog:latest git file:///workdir --only-verified --fail'

|

||||

language: system

|

||||

stages: ["commit", "push"]

|

||||

|

||||

- id: pytest-check

|

||||

name: pytest-check

|

||||

entry: bash -c 'pytest tests -n auto'

|

||||

|

||||

@@ -1,13 +0,0 @@

|

||||

# Do you want to learn on how to...

|

||||

|

||||

- Contribute with your code or fixes to Prowler

|

||||

- Create a new check for a provider

|

||||

- Create a new security compliance framework

|

||||

- Add a custom output format

|

||||

- Add a new integration

|

||||

- Contribute with documentation

|

||||

|

||||

Want some swag as appreciation for your contribution?

|

||||

|

||||

# Prowler Developer Guide

|

||||

https://docs.prowler.cloud/en/latest/tutorials/developer-guide/

|

||||

72

README.md

@@ -11,10 +11,11 @@

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://pypi.org/project/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypi.org/project/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg?label=prowler%20downloads"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg?label=prowler-cloud%20downloads"></a>

|

||||

<a href="https://formulae.brew.sh/formula/prowler#default"><img alt="Brew Prowler Downloads" src="https://img.shields.io/homebrew/installs/dm/prowler?label=brew%20downloads"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

@@ -35,14 +36,7 @@

|

||||

|

||||

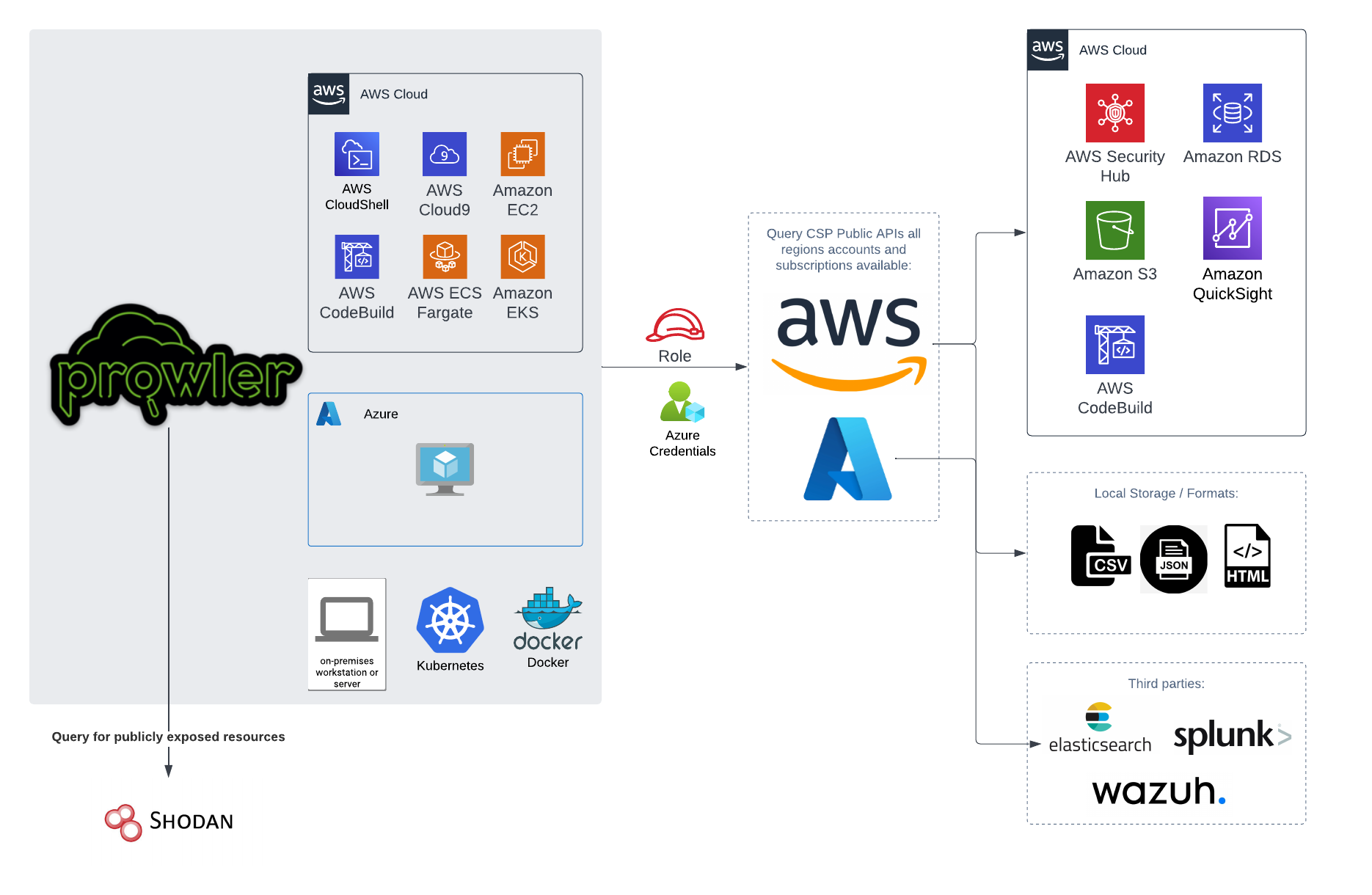

`Prowler` is an Open Source security tool to perform AWS, GCP and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

It contains hundreds of controls covering CIS, NIST 800, NIST CSF, CISA, RBI, FedRAMP, PCI-DSS, GDPR, HIPAA, FFIEC, SOC2, GXP, AWS Well-Architected Framework Security Pillar, AWS Foundational Technical Review (FTR), ENS (Spainish National Security Schema) and your custom security frameworks.

|

||||

|

||||

| Provider | Checks | Services | [Compliance Frameworks](https://docs.prowler.cloud/en/latest/tutorials/compliance/) | [Categories](https://docs.prowler.cloud/en/latest/tutorials/misc/#categories) |

|

||||

|---|---|---|---|---|

|

||||

| AWS | 283 | 55 -> `prowler aws --list-services` | 21 -> `prowler aws --list-compliance` | 5 -> `prowler aws --list-categories` |

|

||||

| GCP | 73 | 11 -> `prowler gcp --list-services` | 1 -> `prowler gcp --list-compliance` | 0 -> `prowler gcp --list-categories`|

|

||||

| Azure | 20 | 3 -> `prowler azure --list-services` | CIS soon | 1 -> `prowler azure --list-categories` |

|

||||

| Kubernetes | Planned | - | - | - |

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

@@ -91,11 +85,11 @@ python prowler.py -v

|

||||

|

||||

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

|

||||

# 📝 Requirements

|

||||

|

||||

Prowler has been written in Python using the [AWS SDK (Boto3)](https://boto3.amazonaws.com/v1/documentation/api/latest/index.html#), [Azure SDK](https://azure.github.io/azure-sdk-for-python/) and [GCP API Python Client](https://github.com/googleapis/google-api-python-client/).

|

||||

Prowler has been written in Python using the [AWS SDK (Boto3)](https://boto3.amazonaws.com/v1/documentation/api/latest/index.html#) and [Azure SDK](https://azure.github.io/azure-sdk-for-python/).

|

||||

## AWS

|

||||

|

||||

Since Prowler uses AWS Credentials under the hood, you can follow any authentication method as described [here](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-quickstart.html#cli-configure-quickstart-precedence).

|

||||

@@ -122,6 +116,22 @@ Those credentials must be associated to a user or role with proper permissions t

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

## Azure

|

||||

|

||||

Prowler for Azure supports the following authentication types:

|

||||

@@ -144,7 +154,7 @@ export AZURE_CLIENT_SECRET="XXXXXXX"

|

||||

If you try to execute Prowler with the `--sp-env-auth` flag and those variables are empty or not exported, the execution is going to fail.

|

||||

### AZ CLI / Browser / Managed Identity authentication

|

||||

|

||||

The other three cases do not need additional configuration, `--az-cli-auth` and `--managed-identity-auth` are automated options, `--browser-auth` needs the user to authenticate using the default browser to start the scan. Also `--browser-auth` needs the tenant id to be specified with `--tenant-id`.

|

||||

The other three cases do not need additional configuration, `--az-cli-auth` and `--managed-identity-auth` are automated options, `--browser-auth` needs the user to authenticate using the default browser to start the scan.

|

||||

|

||||

### Permissions

|

||||

|

||||

@@ -170,22 +180,6 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

- `Reader`

|

||||

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> By default, `prowler` will scan all accessible GCP Projects, use flag `--project-ids` to specify the projects to be scanned.

|

||||

|

||||

# 💻 Basic Usage

|

||||

|

||||

To run prowler, you will need to specify the provider (e.g aws or azure):

|

||||

@@ -251,6 +245,14 @@ prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

```

|

||||

> By default, `prowler` will scan all AWS regions.

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

## Azure

|

||||

|

||||

With Azure you need to specify which auth method is going to be used:

|

||||

@@ -260,14 +262,12 @@ prowler azure [--sp-env-auth, --az-cli-auth, --browser-auth, --managed-identity-

|

||||

```

|

||||

> By default, `prowler` will scan all Azure subscriptions.

|

||||

|

||||

## Google Cloud Platform

|

||||

# 🎉 New Features

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

> By default, `prowler` will scan all accessible GCP Projects, use flag `--project-ids` to specify the projects to be scanned.

|

||||

- Python: we got rid of all bash and it is now all in Python.

|

||||

- Faster: huge performance improvements (same account from 2.5 hours to 4 minutes).

|

||||

- Developers and community: we have made it easier to contribute with new checks and new compliance frameworks. We also included unit tests.

|

||||

- Multi-cloud: in addition to AWS, we have added Azure, we plan to include GCP and OCI soon, let us know if you want to contribute!

|

||||

|

||||

# 📃 License

|

||||

|

||||

|

||||

@@ -1,24 +1,45 @@

|

||||

# Build command

|

||||

# docker build --platform=linux/amd64 --no-cache -t prowler:latest .

|

||||

|

||||

ARG PROWLER_VERSION=latest

|

||||

FROM public.ecr.aws/amazonlinux/amazonlinux:2022

|

||||

|

||||

FROM toniblyx/prowler:${PROWLER_VERSION}

|

||||

ARG PROWLERVER=2.9.0

|

||||

ARG USERNAME=prowler

|

||||

ARG USERID=34000

|

||||

|

||||

USER 0

|

||||

# hadolint ignore=DL3018

|

||||

RUN apk --no-cache add bash aws-cli jq

|

||||

# Install Dependencies

|

||||

RUN \

|

||||

dnf update -y && \

|

||||

dnf install -y bash file findutils git jq python3 python3-pip \

|

||||

python3-setuptools python3-wheel shadow-utils tar unzip which && \

|

||||

dnf remove -y awscli && \

|

||||

dnf clean all && \

|

||||

useradd -l -s /bin/sh -U -u ${USERID} ${USERNAME} && \

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" && \

|

||||

unzip awscliv2.zip && \

|

||||

./aws/install && \

|

||||

pip3 install --no-cache-dir --upgrade pip && \

|

||||

pip3 install --no-cache-dir "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets" && \

|

||||

rm -rf aws awscliv2.zip /var/cache/dnf

|

||||

|

||||

ARG MULTI_ACCOUNT_SECURITY_HUB_PATH=/home/prowler/multi-account-securityhub

|

||||

# Place script and env vars

|

||||

COPY .awsvariables run-prowler-securityhub.sh /

|

||||

|

||||

USER prowler

|

||||

# Installs prowler and change permissions

|

||||

RUN \

|

||||

curl -L "https://github.com/prowler-cloud/prowler/archive/refs/tags/${PROWLERVER}.tar.gz" -o "prowler.tar.gz" && \

|

||||

tar xvzf prowler.tar.gz && \

|

||||

rm -f prowler.tar.gz && \

|

||||

mv prowler-${PROWLERVER} prowler && \

|

||||

chown ${USERNAME}:${USERNAME} /run-prowler-securityhub.sh && \

|

||||

chmod 500 /run-prowler-securityhub.sh && \

|

||||

chown ${USERNAME}:${USERNAME} /.awsvariables && \

|

||||

chmod 400 /.awsvariables && \

|

||||

chown ${USERNAME}:${USERNAME} -R /prowler && \

|

||||

chmod +x /prowler/prowler

|

||||

|

||||

# Move script and environment variables

|

||||

RUN mkdir "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"

|

||||

COPY --chown=prowler:prowler .awsvariables run-prowler-securityhub.sh "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/

|

||||

RUN chmod 500 "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/run-prowler-securityhub.sh & \

|

||||

chmod 400 "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/.awsvariables

|

||||

# Drop to user

|

||||

USER ${USERNAME}

|

||||

|

||||

WORKDIR ${MULTI_ACCOUNT_SECURITY_HUB_PATH}

|

||||

|

||||

ENTRYPOINT ["./run-prowler-securityhub.sh"]

|

||||

# Run script

|

||||

ENTRYPOINT ["/run-prowler-securityhub.sh"]

|

||||

|

||||

51

contrib/multi-account-securityhub/run-prowler-securityhub.sh

Executable file → Normal file

@@ -1,17 +1,20 @@

|

||||

#!/bin/bash

|

||||

# Run Prowler against All AWS Accounts in an AWS Organization

|

||||

|

||||

# Change Directory (rest of the script, assumes you're in the root directory)

|

||||

cd / || exit

|

||||

|

||||

# Show Prowler Version

|

||||

prowler -v

|

||||

./prowler/prowler -V

|

||||

|

||||

# Source .awsvariables

|

||||

# shellcheck disable=SC1091

|

||||

source .awsvariables

|

||||

|

||||

# Get Values from Environment Variables

|

||||

echo "ROLE: ${ROLE}"

|

||||

echo "PARALLEL_ACCOUNTS: ${PARALLEL_ACCOUNTS}"

|

||||

echo "REGION: ${REGION}"

|

||||

echo "ROLE: $ROLE"

|

||||

echo "PARALLEL_ACCOUNTS: $PARALLEL_ACCOUNTS"

|

||||

echo "REGION: $REGION"

|

||||

|

||||

# Function to unset AWS Profile Variables

|

||||

unset_aws() {

|

||||

@@ -21,33 +24,33 @@ unset_aws

|

||||

|

||||

# Find THIS Account AWS Number

|

||||

CALLER_ARN=$(aws sts get-caller-identity --output text --query "Arn")

|

||||

PARTITION=$(echo "${CALLER_ARN}" | cut -d: -f2)

|

||||

THISACCOUNT=$(echo "${CALLER_ARN}" | cut -d: -f5)

|

||||

echo "THISACCOUNT: ${THISACCOUNT}"

|

||||

echo "PARTITION: ${PARTITION}"

|

||||

PARTITION=$(echo "$CALLER_ARN" | cut -d: -f2)

|

||||

THISACCOUNT=$(echo "$CALLER_ARN" | cut -d: -f5)

|

||||

echo "THISACCOUNT: $THISACCOUNT"

|

||||

echo "PARTITION: $PARTITION"

|

||||

|

||||

# Function to Assume Role to THIS Account & Create Session

|

||||

this_account_session() {

|

||||

unset_aws

|

||||

role_credentials=$(aws sts assume-role --role-arn arn:"${PARTITION}":iam::"${THISACCOUNT}":role/"${ROLE}" --role-session-name ProwlerRun --output json)

|

||||

AWS_ACCESS_KEY_ID=$(echo "${role_credentials}" | jq -r .Credentials.AccessKeyId)

|

||||

AWS_SECRET_ACCESS_KEY=$(echo "${role_credentials}" | jq -r .Credentials.SecretAccessKey)

|

||||

AWS_SESSION_TOKEN=$(echo "${role_credentials}" | jq -r .Credentials.SessionToken)

|

||||

role_credentials=$(aws sts assume-role --role-arn arn:"$PARTITION":iam::"$THISACCOUNT":role/"$ROLE" --role-session-name ProwlerRun --output json)

|

||||

AWS_ACCESS_KEY_ID=$(echo "$role_credentials" | jq -r .Credentials.AccessKeyId)

|

||||

AWS_SECRET_ACCESS_KEY=$(echo "$role_credentials" | jq -r .Credentials.SecretAccessKey)

|

||||

AWS_SESSION_TOKEN=$(echo "$role_credentials" | jq -r .Credentials.SessionToken)

|

||||

export AWS_ACCESS_KEY_ID AWS_SECRET_ACCESS_KEY AWS_SESSION_TOKEN

|

||||

}

|

||||

|

||||

# Find AWS Master Account

|

||||

this_account_session

|

||||

AWSMASTER=$(aws organizations describe-organization --query Organization.MasterAccountId --output text)

|

||||

echo "AWSMASTER: ${AWSMASTER}"

|

||||

echo "AWSMASTER: $AWSMASTER"

|

||||

|

||||

# Function to Assume Role to Master Account & Create Session

|

||||

master_account_session() {

|

||||

unset_aws

|

||||

role_credentials=$(aws sts assume-role --role-arn arn:"${PARTITION}":iam::"${AWSMASTER}":role/"${ROLE}" --role-session-name ProwlerRun --output json)

|

||||

AWS_ACCESS_KEY_ID=$(echo "${role_credentials}" | jq -r .Credentials.AccessKeyId)

|

||||

AWS_SECRET_ACCESS_KEY=$(echo "${role_credentials}" | jq -r .Credentials.SecretAccessKey)

|

||||

AWS_SESSION_TOKEN=$(echo "${role_credentials}" | jq -r .Credentials.SessionToken)

|

||||

role_credentials=$(aws sts assume-role --role-arn arn:"$PARTITION":iam::"$AWSMASTER":role/"$ROLE" --role-session-name ProwlerRun --output json)

|

||||

AWS_ACCESS_KEY_ID=$(echo "$role_credentials" | jq -r .Credentials.AccessKeyId)

|

||||

AWS_SECRET_ACCESS_KEY=$(echo "$role_credentials" | jq -r .Credentials.SecretAccessKey)

|

||||

AWS_SESSION_TOKEN=$(echo "$role_credentials" | jq -r .Credentials.SessionToken)

|

||||

export AWS_ACCESS_KEY_ID AWS_SECRET_ACCESS_KEY AWS_SESSION_TOKEN

|

||||

}

|

||||

|

||||

@@ -57,20 +60,20 @@ ACCOUNTS_IN_ORGS=$(aws organizations list-accounts --query Accounts[*].Id --outp

|

||||

|

||||

# Run Prowler against Accounts in AWS Organization

|

||||

echo "AWS Accounts in Organization"

|

||||

echo "${ACCOUNTS_IN_ORGS}"

|

||||

for accountId in ${ACCOUNTS_IN_ORGS}; do

|

||||

echo "$ACCOUNTS_IN_ORGS"

|

||||

for accountId in $ACCOUNTS_IN_ORGS; do

|

||||

# shellcheck disable=SC2015

|

||||

test "$(jobs | wc -l)" -ge "${PARALLEL_ACCOUNTS}" && wait -n || true

|

||||

test "$(jobs | wc -l)" -ge $PARALLEL_ACCOUNTS && wait -n || true

|

||||

{

|

||||

START_TIME=${SECONDS}

|

||||

START_TIME=$SECONDS

|

||||

# Unset AWS Profile Variables

|

||||

unset_aws

|

||||

# Run Prowler

|

||||

echo -e "Assessing AWS Account: ${accountId}, using Role: ${ROLE} on $(date)"

|

||||

echo -e "Assessing AWS Account: $accountId, using Role: $ROLE on $(date)"

|

||||

# Pipe stdout to /dev/null to reduce unnecessary Cloudwatch logs

|

||||

prowler aws -R arn:"${PARTITION}":iam::"${accountId}":role/"${ROLE}" -q -S -f "${REGION}" > /dev/null

|

||||

./prowler/prowler -R "$ROLE" -A "$accountId" -M json-asff -q -S -f "$REGION" > /dev/null

|

||||

TOTAL_SEC=$((SECONDS - START_TIME))

|

||||

printf "Completed AWS Account: ${accountId} in %02dh:%02dm:%02ds" $((TOTAL_SEC / 3600)) $((TOTAL_SEC % 3600 / 60)) $((TOTAL_SEC % 60))

|

||||

printf "Completed AWS Account: $accountId in %02dh:%02dm:%02ds" $((TOTAL_SEC / 3600)) $((TOTAL_SEC % 3600 / 60)) $((TOTAL_SEC % 60))

|

||||

echo ""

|

||||

} &

|

||||

done

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Requirements

|

||||

|

||||

Prowler has been written in Python using the [AWS SDK (Boto3)](https://boto3.amazonaws.com/v1/documentation/api/latest/index.html#), [Azure SDK](https://azure.github.io/azure-sdk-for-python/) and [GCP API Python Client](https://github.com/googleapis/google-api-python-client/).

|

||||

Prowler has been written in Python using the [AWS SDK (Boto3)](https://boto3.amazonaws.com/v1/documentation/api/latest/index.html#) and [Azure SDK](https://learn.microsoft.com/en-us/python/api/overview/azure/?view=azure-python).

|

||||

## AWS

|

||||

|

||||

Since Prowler uses AWS Credentials under the hood, you can follow any authentication method as described [here](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-quickstart.html#cli-configure-quickstart-precedence).

|

||||

@@ -30,12 +30,23 @@ Those credentials must be associated to a user or role with proper permissions t

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

### Multi-Factor Authentication

|

||||

## Google Cloud

|

||||

|

||||

If your IAM entity enforces MFA you can use `--mfa` and Prowler will ask you to input the following values to get a new session:

|

||||

### GCP Authentication

|

||||

|

||||

- ARN of your MFA device

|

||||

- TOTP (Time-Based One-Time Password)

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

## Azure

|

||||

|

||||

@@ -59,7 +70,7 @@ export AZURE_CLIENT_SECRET="XXXXXXX"

|

||||

If you try to execute Prowler with the `--sp-env-auth` flag and those variables are empty or not exported, the execution is going to fail.

|

||||

### AZ CLI / Browser / Managed Identity authentication

|

||||

|

||||

The other three cases does not need additional configuration, `--az-cli-auth` and `--managed-identity-auth` are automated options. To use `--browser-auth` the user needs to authenticate against Azure using the default browser to start the scan, also `tenant-id` is required.

|

||||

The other three cases does not need additional configuration, `--az-cli-auth` and `--managed-identity-auth` are automated options, `--browser-auth` needs the user to authenticate using the default browser to start the scan.

|

||||

|

||||

### Permissions

|

||||

|

||||

@@ -86,21 +97,3 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

|

||||

- `Security Reader`

|

||||

- `Reader`

|

||||

|

||||

## Google Cloud

|

||||

|

||||

### GCP Authentication

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> By default, `prowler` will scan all accessible GCP Projects, use flag `--project-ids` to specify the projects to be scanned.

|

||||

|

||||

|

Before Width: | Height: | Size: 283 KiB After Width: | Height: | Size: 258 KiB |

|

Before Width: | Height: | Size: 11 KiB |

|

Before Width: | Height: | Size: 22 KiB |

@@ -109,7 +109,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

_Requirements_:

|

||||

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* Latest Amazon Linux 2 should come with Python 3.9 already installed however it may need pip. Install Python pip 3.9 with: `sudo yum install -y python3-pip`.

|

||||

* Latest Amazon Linux 2 should come with Python 3.9 already installed however it may need pip. Install Python pip 3.9 with: `sudo dnf install -y python3-pip`.

|

||||

* Make sure setuptools for python is already installed with: `pip3 install setuptools`

|

||||

|

||||

_Commands_:

|

||||

@@ -254,7 +254,13 @@ prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

```

|

||||

> By default, `prowler` will scan all AWS regions.

|

||||

|

||||

See more details about AWS Authentication in [Requirements](getting-started/requirements.md)

|

||||

### Google Cloud

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

### Azure

|

||||

|

||||

@@ -274,31 +280,9 @@ prowler azure --browser-auth

|

||||

prowler azure --managed-identity-auth

|

||||

```

|

||||

|

||||

See more details about Azure Authentication in [Requirements](getting-started/requirements.md)

|

||||

More details in [Requirements](getting-started/requirements.md)

|

||||

|

||||

Prowler by default scans all the subscriptions that is allowed to scan, if you want to scan a single subscription or various specific subscriptions you can use the following flag (using az cli auth as example):

|

||||

Prowler by default scans all the subscriptions that is allowed to scan, if you want to scan a single subscription or various concrete subscriptions you can use the following flag (using az cli auth as example):

|

||||

```console

|

||||

prowler azure --az-cli-auth --subscription-ids <subscription ID 1> <subscription ID 2> ... <subscription ID N>

|

||||

```

|

||||

|

||||

### Google Cloud

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

Prowler by default scans all the GCP Projects that is allowed to scan, if you want to scan a single project or various specific projects you can use the following flag:

|

||||

```console

|

||||

prowler gcp --project-ids <Project ID 1> <Project ID 2> ... <Project ID N>

|

||||

```

|

||||

|

||||

See more details about GCP Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

@@ -7,11 +7,9 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

|

||||

## Allowlist Yaml File Syntax

|

||||

|

||||

### Account, Check and/or Region can be * to apply for all the cases.

|

||||

### Resources and tags are lists that can have either Regex or Keywords.

|

||||

### Tags is an optional list that matches on tuples of 'key=value' and are "ANDed" together.

|

||||

### Use an alternation Regex to match one of multiple tags with "ORed" logic.

|

||||

### For each check you can except Accounts, Regions, Resources and/or Tags.

|

||||

### Account, Check and/or Region can be * to apply for all the cases

|

||||

### Resources is a list that can have either Regex or Keywords

|

||||

### Tags is an optional list containing tuples of 'key=value'

|

||||

########################### ALLOWLIST EXAMPLE ###########################

|

||||

Allowlist:

|

||||

Accounts:

|

||||

@@ -23,19 +21,14 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

Resources:

|

||||

- "user-1" # Will ignore user-1 in check iam_user_hardware_mfa_enabled

|

||||

- "user-2" # Will ignore user-2 in check iam_user_hardware_mfa_enabled

|

||||

"ec2_*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*" # Will ignore every EC2 check in every account and region

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "test"

|

||||

- "test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

Tags:

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and

|

||||

- "project=test|project=stage" # either of ('project=test' OR project=stage) in account 123456789012 and every region

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

- "project=test"

|

||||

|

||||

"*":

|

||||

Checks:

|

||||

@@ -46,7 +39,7 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

Resources:

|

||||

- "ci-logs" # Will ignore bucket "ci-logs" AND ALSO bucket "ci-logs-replica" in specified check and regions

|

||||

- "logs" # Will ignore EVERY BUCKET containing the string "logs" in specified check and regions

|

||||

- ".+-logs" # Will ignore all buckets containing the terms ci-logs, qa-logs, etc. in specified check and regions

|

||||

- "[[:alnum:]]+-logs" # Will ignore all buckets containing the terms ci-logs, qa-logs, etc. in specified check and regions

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

@@ -55,33 +48,6 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

Tags:

|

||||

- "environment=dev" # Will ignore every resource containing the tag 'environment=dev' in every account and region

|

||||

|

||||

"*":

|

||||

Checks:

|

||||

"ecs_task_definitions_no_environment_secrets":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*"

|

||||

Exceptions:

|

||||

Accounts:

|

||||

- "0123456789012"

|

||||

Regions:

|

||||

- "eu-west-1"

|

||||

- "eu-south-2" # Will ignore every resource in check ecs_task_definitions_no_environment_secrets except the ones in account 0123456789012 located in eu-south-2 or eu-west-1

|

||||

|

||||

"123456789012":

|

||||

Checks:

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*"

|

||||

Exceptions:

|

||||

Resources:

|

||||

- "test"

|

||||

Tags:

|

||||

- "environment=prod" # Will ignore every resource except in account 123456789012 except the ones containing the string "test" and tag environment=prod

|

||||

|

||||

|

||||

## Supported Allowlist Locations

|

||||

|

||||

@@ -116,9 +82,6 @@ prowler aws -w arn:aws:dynamodb:<region_name>:<account_id>:table/<table_name>

|

||||

- Regions (List): This field contains a list of regions where this allowlist rule is applied (it can also contains an `*` to apply all scanned regions).

|

||||

- Resources (List): This field contains a list of regex expressions that applies to the resources that are wanted to be allowlisted.

|

||||

- Tags (List): -Optional- This field contains a list of tuples in the form of 'key=value' that applies to the resources tags that are wanted to be allowlisted.

|

||||

- Exceptions (Map): -Optional- This field contains a map of lists of accounts/regions/resources/tags that are wanted to be excepted in the allowlist.

|

||||

|

||||

The following example will allowlist all resources in all accounts for the EC2 checks in the regions `eu-west-1` and `us-east-1` with the tags `environment=dev` and `environment=prod`, except the resources containing the string `test` in the account `012345678912` and region `eu-west-1` with the tag `environment=prod`:

|

||||

|

||||

<img src="../img/allowlist-row.png"/>

|

||||

|

||||

|

||||

@@ -1,31 +0,0 @@

|

||||

# AWS Authentication

|

||||

|

||||

Make sure you have properly configured your AWS-CLI with a valid Access Key and Region or declare AWS variables properly (or instance profile/role):

|

||||

|

||||

```console

|

||||

aws configure

|

||||

```

|

||||

|

||||

or

|

||||

|

||||

```console

|

||||

export AWS_ACCESS_KEY_ID="ASXXXXXXX"

|

||||

export AWS_SECRET_ACCESS_KEY="XXXXXXXXX"

|

||||

export AWS_SESSION_TOKEN="XXXXXXXXX"

|

||||

```

|

||||

|

||||

Those credentials must be associated to a user or role with proper permissions to do all checks. To make sure, add the following AWS managed policies to the user or role being used:

|

||||

|

||||

- arn:aws:iam::aws:policy/SecurityAudit

|

||||

- arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

|

||||

|

||||

> Moreover, some read-only additional permissions are needed for several checks, make sure you attach also the custom policy [prowler-additions-policy.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-additions-policy.json) to the role you are using.

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

## Multi-Factor Authentication

|

||||

|

||||

If your IAM entity enforces MFA you can use `--mfa` and Prowler will ask you to input the following values to get a new session:

|

||||

|

||||

- ARN of your MFA device

|

||||

- TOTP (Time-Based One-Time Password)

|

||||

@@ -1,81 +0,0 @@

|

||||

# AWS Regions and Partitions

|

||||

|

||||

By default Prowler is able to scan the following AWS partitions:

|

||||

|

||||

- Commercial: `aws`

|

||||

- China: `aws-cn`

|

||||

- GovCloud (US): `aws-us-gov`

|

||||

|

||||

> To check the available regions for each partition and service please refer to the following document [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json)

|

||||

|

||||

It is important to take into consideration that to scan the China (`aws-cn`) or GovCloud (`aws-us-gov`) partitions it is either required to have a valid region for that partition in your AWS credentials or to specify the regions you want to audit for that partition using the `-f/--region` flag.

|

||||

> Please, refer to https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html#configuring-credentials for more information about the AWS credentials configuration.

|

||||

|

||||

You can get more information about the available partitions and regions in the following [Botocore](https://github.com/boto/botocore) [file](https://github.com/boto/botocore/blob/22a19ea7c4c2c4dd7df4ab8c32733cba0c7597a4/botocore/data/partitions.json).

|

||||

## AWS China

|

||||

|

||||

To scan your AWS account in the China partition (`aws-cn`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region cn-north-1 cn-northwest-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = cn-north-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS GovCloud (US)

|

||||

|

||||

To scan your AWS account in the GovCloud (US) partition (`aws-us-gov`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region us-gov-east-1 us-gov-west-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = us-gov-east-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS ISO (US & Europe)

|

||||

|

||||

For the AWS ISO partitions, which are known as "secret partitions" and are air-gapped from the Internet, there is no builtin way to scan it. If you want to audit an AWS account in one of the AWS ISO partitions you should manually update the [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json) and include the partition, region and services, e.g.:

|

||||

```json

|

||||

"iam": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"eu-west-1",

|

||||

"us-east-1",

|

||||

],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

],

|

||||

"aws-iso": [

|

||||

"aws-iso-global",

|

||||

"us-iso-east-1",

|

||||

"us-iso-west-1"

|

||||

],

|

||||

"aws-iso-b": [

|

||||

"aws-iso-b-global",

|

||||

"us-isob-east-1"

|

||||

],

|

||||

"aws-iso-e": [],

|

||||

}

|

||||

},

|

||||

```

|

||||

@@ -5,7 +5,7 @@ Prowler uses the AWS SDK (Boto3) underneath so it uses the same authentication m

|

||||

However, there are few ways to run Prowler against multiple accounts using IAM Assume Role feature depending on each use case:

|

||||

|

||||

1. You can just set up your custom profile inside `~/.aws/config` with all needed information about the role to assume then call it with `prowler aws -p/--profile your-custom-profile`.

|

||||

- An example profile that performs role-chaining is given below. The `credential_source` can either be set to `Environment`, `Ec2InstanceMetadata`, or `EcsContainer`.

|

||||

- An example profile that performs role-chaining is given below. The `credential_source` can either be set to `Environment`, `Ec2InstanceMetadata`, or `EcsContainer`.

|

||||

- Alternatively, you could use the `source_profile` instead of `credential_source` to specify a separate named profile that contains IAM user credentials with permission to assume the target the role. More information can be found [here](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-role.html).

|

||||

```

|

||||

[profile crossaccountrole]

|

||||

@@ -23,13 +23,6 @@ prowler aws -R arn:aws:iam::<account_id>:role/<role_name>

|

||||

prowler aws -T/--session-duration <seconds> -I/--external-id <external_id> -R arn:aws:iam::<account_id>:role/<role_name>

|

||||

```

|

||||

|

||||

## Role MFA

|

||||

|

||||

If your IAM Role has MFA configured you can use `--mfa` along with `-R`/`--role <role_arn>` and Prowler will ask you to input the following values to get a new temporary session for the IAM Role provided:

|

||||

- ARN of your MFA device

|

||||

- TOTP (Time-Based One-Time Password)

|

||||

|

||||

|

||||

## Create Role

|

||||

|

||||

To create a role to be assumed in one or multiple accounts you can use either as CloudFormation Stack or StackSet the following [template](https://github.com/prowler-cloud/prowler/blob/master/permissions/create_role_to_assume_cfn.yaml) and adapt it.

|

||||

|

||||

@@ -29,34 +29,14 @@ prowler -S -f eu-west-1

|

||||

|

||||

> **Note 1**: It is recommended to send only fails to Security Hub and that is possible adding `-q` to the command.

|

||||

|

||||

> **Note 2**: Since Prowler perform checks to all regions by default you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs). Prowler will send findings to the Security Hub on the region where the scanned resource is located.

|

||||

> **Note 2**: Since Prowler perform checks to all regions by defauls you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs).

|

||||

|

||||

> **Note 3**: To have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

> **Note 3** to have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

|

||||

Once you run findings for first time you will be able to see Prowler findings in Findings section:

|

||||

|

||||

|

||||

|

||||

## Send findings to Security Hub assuming an IAM Role

|

||||

|

||||

When you are auditing a multi-account AWS environment, you can send findings to a Security Hub of another account by assuming an IAM role from that account using the `-R` flag in the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -R arn:aws:iam::123456789012:role/ProwlerExecRole

|

||||

```

|

||||

|

||||

> Remember that the used role needs to have permissions to send findings to Security Hub. To get more information about the permissions required, please refer to the following IAM policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json)

|

||||

|

||||

|

||||

## Send only failed findings to Security Hub

|

||||

|

||||

When using Security Hub it is recommended to send only the failed findings generated. To follow that recommendation you could add the `-q` flag to the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -q

|

||||

```

|

||||

|

||||

|

||||

## Skip sending updates of findings to Security Hub

|

||||

|

||||

By default, Prowler archives all its findings in Security Hub that have not appeared in the last scan.

|

||||

|

||||

@@ -18,7 +18,7 @@ prowler azure --sp-env-auth

|

||||

prowler azure --az-cli-auth

|

||||

|

||||

# To use browser authentication

|

||||

prowler azure --browser-auth --tenant-id "XXXXXXXX"

|

||||

prowler azure --browser-auth

|

||||

|

||||

# To use managed identity auth

|

||||

prowler azure --managed-identity-auth

|

||||

|

||||

@@ -13,7 +13,6 @@ Currently, the available frameworks are:

|

||||

- `ens_rd2022_aws`

|

||||

- `aws_audit_manager_control_tower_guardrails_aws`

|

||||

- `aws_foundational_security_best_practices_aws`

|

||||

- `aws_well_architected_framework_security_pillar_aws`

|

||||

- `cisa_aws`

|

||||

- `fedramp_low_revision_4_aws`

|

||||

- `fedramp_moderate_revision_4_aws`

|

||||

|

||||

@@ -1,29 +0,0 @@

|

||||

# GCP authentication

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

> `prowler` will scan the GCP project associated with the credentials.

|

||||

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

Before Width: | Height: | Size: 94 KiB After Width: | Height: | Size: 51 KiB |

|

Before Width: | Height: | Size: 61 KiB |

|

Before Width: | Height: | Size: 67 KiB |

|

Before Width: | Height: | Size: 200 KiB |

|

Before Width: | Height: | Size: 456 KiB |

|

Before Width: | Height: | Size: 69 KiB |

@@ -1,36 +0,0 @@

|

||||

# Integrations

|

||||

|

||||

## Slack

|

||||

|

||||

Prowler can be integrated with [Slack](https://slack.com/) to send a summary of the execution having configured a Slack APP in your channel with the following command:

|

||||

|

||||

```sh

|

||||

prowler <provider> --slack

|

||||

```

|

||||

|

||||

|

||||

|

||||

> Slack integration needs SLACK_API_TOKEN and SLACK_CHANNEL_ID environment variables.

|

||||

### Configuration

|

||||

|

||||

To configure the Slack Integration, follow the next steps:

|

||||

|

||||

1. Create a Slack Application:

|

||||

- Go to [Slack API page](https://api.slack.com/tutorials/tracks/getting-a-token), scroll down to the *Create app* button and select your workspace:

|

||||

|

||||

|

||||

- Install the application in your selected workspaces:

|

||||

|

||||

|

||||

- Get the *Slack App OAuth Token* that Prowler needs to send the message:

|

||||

|

||||

|

||||

2. Optionally, create a Slack Channel (you can use an existing one)

|

||||

|

||||

3. Integrate the created Slack App to your Slack channel:

|

||||

- Click on the channel, go to the Integrations tab, and Add an App.

|

||||

|

||||

|

||||

4. Set the following environment variables that Prowler will read:

|

||||

- `SLACK_API_TOKEN`: the *Slack App OAuth Token* that was previously get.

|

||||

- `SLACK_CHANNEL_ID`: the name of your Slack Channel where Prowler will send the message.

|

||||

@@ -51,26 +51,6 @@ prowler <provider> -e/--excluded-checks ec2 rds

|

||||

```console

|

||||

prowler <provider> -C/--checks-file <checks_list>.json

|

||||

```

|

||||

## Custom Checks

|

||||

Prowler allows you to include your custom checks with the flag:

|

||||

```console

|

||||

prowler <provider> -x/--checks-folder <custom_checks_folder>

|

||||

```

|

||||

> S3 URIs are also supported as folders for custom checks, e.g. s3://bucket/prefix/checks_folder/. Make sure that the used credentials have s3:GetObject permissions in the S3 path where the custom checks are located.

|

||||

|

||||

The custom checks folder must contain one subfolder per check, each subfolder must be named as the check and must contain:

|

||||

|

||||

- An empty `__init__.py`: to make Python treat this check folder as a package.

|

||||

- A `check_name.py` containing the check's logic.

|

||||

- A `check_name.metadata.json` containing the check's metadata.

|

||||

>The check name must start with the service name followed by an underscore (e.g., ec2_instance_public_ip).

|

||||

|

||||

To see more information about how to write checks see the [Developer Guide](../developer-guide/#create-a-new-check-for-a-provider).

|

||||

|

||||

> If you want to run ONLY your custom check(s), import it with -x (--checks-folder) and then run it with -c (--checks), e.g.:

|

||||

```console

|

||||

prowler aws -x s3://bucket/prowler/providers/aws/services/s3/s3_bucket_policy/ -c s3_bucket_policy

|

||||

```

|

||||

|

||||

## Severities

|

||||

Each of Prowler's checks has a severity, which can be:

|

||||

|

||||

@@ -11,7 +11,7 @@ The actual checks that have this funcionality are:

|

||||

1. autoscaling_find_secrets_ec2_launch_configuration

|

||||

- awslambda_function_no_secrets_in_code

|

||||

- awslambda_function_no_secrets_in_variables

|

||||

- cloudformation_stack_outputs_find_secrets

|

||||

- cloudformation_outputs_find_secrets

|

||||

- ec2_instance_secrets_user_data

|

||||

- ecs_task_definitions_no_environment_secrets

|

||||

- ssm_document_secrets

|

||||

|

||||

@@ -16,5 +16,4 @@ prowler <provider> -i

|

||||

|

||||

|

||||

|

||||

## Objections

|

||||

The inventorying process is done with `resourcegroupstaggingapi` calls which means that only resources they have or have had tags will appear (except for the IAM and S3 resources which are done with Boto3 API calls).

|

||||

> The inventorying process is done with `resourcegroupstaggingapi` calls (except for the IAM resources which are done with Boto3 API calls.)

|

||||

|

||||

@@ -1,9 +1,9 @@

|

||||

# Reporting

|

||||

|

||||

By default, Prowler will generate a CSV, JSON, JSON-OCSF and a HTML report, however you could generate a JSON-ASFF (used by AWS Security Hub) report with `-M` or `--output-modes`:

|

||||

By default, Prowler will generate a CSV, JSON and a HTML report, however you could generate a JSON-ASFF (used by AWS Security Hub) report with `-M` or `--output-modes`:

|

||||

|

||||

```console

|

||||

prowler <provider> -M csv json json-ocsf json-asff html

|

||||

prowler <provider> -M csv json json-asff html

|

||||

```

|

||||

|

||||

## Custom Output Flags

|

||||

@@ -25,19 +25,13 @@ prowler <provider> -M csv json json-asff html -F <custom_report_name> -o <custom

|

||||

```

|

||||

## Send report to AWS S3 Bucket

|

||||

|

||||

To save your report in an S3 bucket, use `-B`/`--output-bucket`.

|

||||

|

||||

```sh

|

||||

prowler <provider> -B my-bucket/folder/

|

||||

```

|

||||

|

||||

By default Prowler sends HTML, JSON and CSV output formats, if you want to send a custom output format or a single one of the defaults you can specify it with the `-M` flag.

|

||||

To save your report in an S3 bucket, use `-B`/`--output-bucket` to define a custom output bucket along with `-M` to define the output format that is going to be uploaded to S3:

|

||||

|

||||

```sh

|

||||

prowler <provider> -M csv -B my-bucket/folder/

|

||||

```

|

||||

|

||||

> In the case you do not want to use the assumed role credentials but the initial credentials to put the reports into the S3 bucket, use `-D`/`--output-bucket-no-assume` instead of `-B`/`--output-bucket`.

|

||||

> In the case you do not want to use the assumed role credentials but the initial credentials to put the reports into the S3 bucket, use `-D`/`--output-bucket-no-assume` instead of `-B`/`--output-bucket.

|

||||

|

||||

> Make sure that the used credentials have s3:PutObject permissions in the S3 path where the reports are going to be uploaded.

|

||||

|

||||

@@ -47,7 +41,6 @@ Prowler supports natively the following output formats:

|

||||

|

||||

- CSV

|

||||

- JSON

|

||||

- JSON-OCSF

|

||||

- JSON-ASFF

|

||||

- HTML

|

||||

|

||||

@@ -154,265 +147,6 @@ Hereunder is the structure for each of the supported report formats by Prowler:

|

||||

|

||||

> NOTE: Each finding is a `json` object.

|

||||

|

||||

### JSON-OCSF

|

||||

|

||||

Based on [Open Cybersecurity Schema Framework Security Finding v1.0.0-rc.3](https://schema.ocsf.io/1.0.0-rc.3/classes/security_finding?extensions=)

|

||||

|

||||

```

|

||||

[{

|

||||

"finding": {

|

||||

"title": "Check if ACM Certificates are about to expire in specific days or less",

|

||||

"desc": "Check if ACM Certificates are about to expire in specific days or less",

|

||||

"supporting_data": {

|

||||

"Risk": "Expired certificates can impact service availability.",

|

||||

"Notes": ""

|

||||

},

|

||||

"remediation": {

|

||||

"kb_articles": [

|

||||

"https://docs.aws.amazon.com/config/latest/developerguide/acm-certificate-expiration-check.html"

|

||||

],

|

||||

"desc": "Monitor certificate expiration and take automated action to renew; replace or remove. Having shorter TTL for any security artifact is a general recommendation; but requires additional automation in place. If not longer required delete certificate. Use AWS config using the managed rule: acm-certificate-expiration-check."

|

||||

},

|

||||

"types": [

|

||||

"Data Protection"

|

||||

],

|

||||

"src_url": "https://docs.aws.amazon.com/config/latest/developerguide/acm-certificate-expiration-check.html",

|

||||

"uid": "prowler-aws-acm_certificates_expiration_check-012345678912-eu-west-1-*.xxxxxxxxxxxxxx",

|

||||

"related_events": []

|

||||

},

|

||||

"resources": [

|

||||

{

|

||||

"group": {

|

||||

"name": "acm"

|

||||

},

|

||||

"region": "eu-west-1",

|

||||

"name": "xxxxxxxxxxxxxx",

|

||||

"uid": "arn:aws:acm:eu-west-1:012345678912:certificate/xxxxxxxxxxxxxx",

|

||||

"labels": [

|

||||

{

|

||||

"Key": "project",

|

||||

"Value": "prowler-pro"

|

||||

},

|

||||

{

|

||||

"Key": "environment",

|

||||

"Value": "dev"

|

||||

},

|

||||

{

|

||||

"Key": "terraform",

|

||||

"Value": "true"

|

||||

},

|

||||

{

|

||||

"Key": "terraform_state",

|

||||

"Value": "aws"

|

||||

}

|

||||

],

|

||||

"type": "AwsCertificateManagerCertificate",

|

||||

"details": ""

|

||||

}

|

||||

],

|

||||

"status_detail": "ACM Certificate for xxxxxxxxxxxxxx expires in 111 days.",

|

||||

"compliance": {

|

||||

"status": "Success",

|

||||

"requirements": [

|

||||

"CISA: ['your-data-2']",

|

||||

"SOC2: ['cc_6_7']",

|

||||

"MITRE-ATTACK: ['T1040']",

|

||||

"GDPR: ['article_32']",

|

||||

"HIPAA: ['164_308_a_4_ii_a', '164_312_e_1']",

|

||||

"AWS-Well-Architected-Framework-Security-Pillar: ['SEC09-BP01']",

|

||||

"NIST-800-171-Revision-2: ['3_13_1', '3_13_2', '3_13_8', '3_13_11']",

|

||||

"NIST-800-53-Revision-4: ['ac_4', 'ac_17_2', 'sc_12']",

|

||||

"NIST-800-53-Revision-5: ['sc_7_12', 'sc_7_16']",

|

||||

"NIST-CSF-1.1: ['ac_5', 'ds_2']",

|

||||

"RBI-Cyber-Security-Framework: ['annex_i_1_3']",

|

||||

"FFIEC: ['d3-pc-im-b-1']",

|

||||

"FedRamp-Moderate-Revision-4: ['ac-4', 'ac-17-2', 'sc-12']",

|

||||

"FedRAMP-Low-Revision-4: ['ac-17', 'sc-12']"

|

||||

],

|

||||

"status_detail": "ACM Certificate for xxxxxxxxxxxxxx expires in 111 days."

|

||||

},

|

||||

"message": "ACM Certificate for xxxxxxxxxxxxxx expires in 111 days.",

|

||||

"severity_id": 4,

|

||||

"severity": "High",

|

||||

"cloud": {

|

||||

"account": {

|

||||

"name": "",

|

||||

"uid": "012345678912"

|

||||

},

|

||||

"region": "eu-west-1",

|

||||

"org": {

|

||||

"uid": "",

|

||||

"name": ""

|

||||

},

|

||||

"provider": "aws",

|

||||

"project_uid": ""

|

||||

},

|

||||

"time": "2023-06-30 10:28:55.297615",

|

||||

"metadata": {

|

||||

"original_time": "2023-06-30T10:28:55.297615",

|

||||

"profiles": [

|

||||

"dev"

|

||||

],

|

||||

"product": {

|

||||

"language": "en",

|

||||

"name": "Prowler",

|

||||

"version": "3.6.1",

|

||||

"vendor_name": "Prowler/ProwlerPro",

|

||||

"feature": {

|

||||

"name": "acm_certificates_expiration_check",

|

||||

"uid": "acm_certificates_expiration_check",

|

||||

"version": "3.6.1"

|

||||

}

|

||||

},

|

||||

"version": "1.0.0-rc.3"

|

||||

},

|

||||

"state_id": 0,

|

||||

"state": "New",

|

||||

"status_id": 1,

|

||||

"status": "Success",

|

||||

"type_uid": 200101,

|

||||

"type_name": "Security Finding: Create",

|

||||

"impact_id": 0,

|

||||

"impact": "Unknown",

|

||||

"confidence_id": 0,

|

||||

"confidence": "Unknown",

|

||||

"activity_id": 1,

|

||||

"activity_name": "Create",

|

||||

"category_uid": 2,

|

||||

"category_name": "Findings",

|

||||

"class_uid": 2001,

|

||||

"class_name": "Security Finding"

|

||||

},{

|

||||

"finding": {

|

||||

"title": "Check if ACM Certificates are about to expire in specific days or less",

|

||||

"desc": "Check if ACM Certificates are about to expire in specific days or less",

|

||||

"supporting_data": {

|

||||

"Risk": "Expired certificates can impact service availability.",

|

||||

"Notes": ""

|

||||

},

|

||||

"remediation": {

|

||||

"kb_articles": [

|

||||

"https://docs.aws.amazon.com/config/latest/developerguide/acm-certificate-expiration-check.html"

|

||||

],

|

||||

"desc": "Monitor certificate expiration and take automated action to renew; replace or remove. Having shorter TTL for any security artifact is a general recommendation; but requires additional automation in place. If not longer required delete certificate. Use AWS config using the managed rule: acm-certificate-expiration-check."

|

||||

},

|

||||

"types": [

|

||||

"Data Protection"

|

||||

],

|

||||

"src_url": "https://docs.aws.amazon.com/config/latest/developerguide/acm-certificate-expiration-check.html",

|

||||

"uid": "prowler-aws-acm_certificates_expiration_check-012345678912-eu-west-1-xxxxxxxxxxxxx",

|

||||

"related_events": []

|

||||

},

|

||||

"resources": [

|

||||

{

|

||||

"group": {

|

||||

"name": "acm"

|

||||

},

|

||||

"region": "eu-west-1",

|

||||

"name": "xxxxxxxxxxxxx",

|

||||

"uid": "arn:aws:acm:eu-west-1:012345678912:certificate/3ea965a0-368d-4d13-95eb-5042a994edc4",

|

||||

"labels": [

|

||||

{

|

||||

"Key": "name",

|

||||

"Value": "prowler-pro-saas-dev-acm-internal-wildcard"

|

||||

},

|

||||

{

|

||||

"Key": "project",

|

||||

"Value": "prowler-pro-saas"

|

||||

},

|

||||

{

|

||||

"Key": "environment",

|

||||

"Value": "dev"

|

||||

},

|

||||

{

|

||||

"Key": "terraform",

|

||||

"Value": "true"

|

||||

},

|

||||

{

|

||||

"Key": "terraform_state",

|

||||

"Value": "aws/saas/base"

|

||||

}

|

||||

],

|

||||

"type": "AwsCertificateManagerCertificate",

|

||||

"details": ""

|

||||

}

|

||||

],

|

||||

"status_detail": "ACM Certificate for xxxxxxxxxxxxx expires in 119 days.",

|

||||

"compliance": {

|

||||

"status": "Success",

|

||||

"requirements": [

|

||||

"CISA: ['your-data-2']",

|

||||

"SOC2: ['cc_6_7']",

|

||||

"MITRE-ATTACK: ['T1040']",

|

||||

"GDPR: ['article_32']",

|

||||

"HIPAA: ['164_308_a_4_ii_a', '164_312_e_1']",

|

||||

"AWS-Well-Architected-Framework-Security-Pillar: ['SEC09-BP01']",

|

||||

"NIST-800-171-Revision-2: ['3_13_1', '3_13_2', '3_13_8', '3_13_11']",

|

||||

"NIST-800-53-Revision-4: ['ac_4', 'ac_17_2', 'sc_12']",

|

||||

"NIST-800-53-Revision-5: ['sc_7_12', 'sc_7_16']",

|

||||

"NIST-CSF-1.1: ['ac_5', 'ds_2']",

|

||||

"RBI-Cyber-Security-Framework: ['annex_i_1_3']",

|

||||

"FFIEC: ['d3-pc-im-b-1']",

|

||||

"FedRamp-Moderate-Revision-4: ['ac-4', 'ac-17-2', 'sc-12']",

|

||||

"FedRAMP-Low-Revision-4: ['ac-17', 'sc-12']"

|

||||

],

|

||||

"status_detail": "ACM Certificate for xxxxxxxxxxxxx expires in 119 days."

|

||||

},

|

||||

"message": "ACM Certificate for xxxxxxxxxxxxx expires in 119 days.",

|

||||

"severity_id": 4,

|

||||

"severity": "High",

|

||||

"cloud": {

|

||||

"account": {

|

||||

"name": "",

|

||||

"uid": "012345678912"

|

||||

},

|

||||

"region": "eu-west-1",

|

||||

"org": {

|

||||

"uid": "",

|

||||

"name": ""

|

||||

},

|