Compare commits

29 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

0fa725d2d0 | ||

|

|

2580d820ec | ||

|

|

3f3888f499 | ||

|

|

b20a532ab5 | ||

|

|

b11c9991a3 | ||

|

|

fd9cbe6ba3 | ||

|

|

227b1db493 | ||

|

|

10d8c12913 | ||

|

|

d9a1cc333e | ||

|

|

d1670c8347 | ||

|

|

b03bc8fc0b | ||

|

|

79c975ab9c | ||

|

|

68cf285fb9 | ||

|

|

36ba0ffb1f | ||

|

|

d2b1818700 | ||

|

|

2df02acbd8 | ||

|

|

7fd11b2f0e | ||

|

|

47bab87603 | ||

|

|

1835e1df98 | ||

|

|

dc88b033a0 | ||

|

|

0f784e34db | ||

|

|

698f299edf | ||

|

|

172bb36dfb | ||

|

|

4b1e6c7493 | ||

|

|

6d5c277c73 | ||

|

|

2d4b7ce499 | ||

|

|

3ee3a3b2f1 | ||

|

|

e9e6ff0e24 | ||

|

|

e0c6de76d4 |

2

.github/CODEOWNERS

vendored

@@ -1 +1 @@

|

||||

* @prowler-cloud/prowler-oss @prowler-cloud/prowler-dev

|

||||

* @prowler-cloud/prowler-oss

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

@@ -1,6 +1,6 @@

|

||||

name: 💡 Feature Request

|

||||

description: Suggest an idea for this project

|

||||

labels: ["feature-request", "status/needs-triage"]

|

||||

labels: ["enhancement", "status/needs-triage"]

|

||||

|

||||

|

||||

body:

|

||||

|

||||

31

.github/dependabot.yml

vendored

@@ -5,38 +5,11 @@

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/"

|

||||

- package-ecosystem: "pip" # See documentation for possible values

|

||||

directory: "/" # Location of package manifests

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

open-pull-requests-limit: 10

|

||||

target-branch: master

|

||||

labels:

|

||||

- "dependencies"

|

||||

- "pip"

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

open-pull-requests-limit: 10

|

||||

target-branch: master

|

||||

|

||||

- package-ecosystem: "pip"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

open-pull-requests-limit: 10

|

||||

target-branch: v3

|

||||

labels:

|

||||

- "dependencies"

|

||||

- "pip"

|

||||

- "v3"

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

open-pull-requests-limit: 10

|

||||

target-branch: v3

|

||||

labels:

|

||||

- "github_actions"

|

||||

- "v3"

|

||||

|

||||

27

.github/labeler.yml

vendored

@@ -1,27 +0,0 @@

|

||||

documentation:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: "docs/**"

|

||||

|

||||

provider/aws:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: "prowler/providers/aws/**"

|

||||

- any-glob-to-any-file: "tests/providers/aws/**"

|

||||

|

||||

provider/azure:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: "prowler/providers/azure/**"

|

||||

- any-glob-to-any-file: "tests/providers/azure/**"

|

||||

|

||||

provider/gcp:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: "prowler/providers/gcp/**"

|

||||

- any-glob-to-any-file: "tests/providers/gcp/**"

|

||||

|

||||

provider/kubernetes:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: "prowler/providers/kubernetes/**"

|

||||

- any-glob-to-any-file: "tests/providers/kubernetes/**"

|

||||

|

||||

github_actions:

|

||||

- changed-files:

|

||||

- any-glob-to-any-file: ".github/workflows/*"

|

||||

24

.github/workflows/build-documentation-on-pr.yml

vendored

@@ -1,24 +0,0 @@

|

||||

name: Pull Request Documentation Link

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- 'master'

|

||||

- 'v3'

|

||||

paths:

|

||||

- 'docs/**'

|

||||

|

||||

env:

|

||||

PR_NUMBER: ${{ github.event.pull_request.number }}

|

||||

|

||||

jobs:

|

||||

documentation-link:

|

||||

name: Documentation Link

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Leave PR comment with the SaaS Documentation URI

|

||||

uses: peter-evans/create-or-update-comment@v4

|

||||

with:

|

||||

issue-number: ${{ env.PR_NUMBER }}

|

||||

body: |

|

||||

You can check the documentation for this PR here -> [SaaS Documentation](https://prowler-prowler-docs--${{ env.PR_NUMBER }}.com.readthedocs.build/projects/prowler-open-source/en/${{ env.PR_NUMBER }}/)

|

||||

113

.github/workflows/build-lint-push-containers.yml

vendored

@@ -3,7 +3,6 @@ name: build-lint-push-containers

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- "v3"

|

||||

- "master"

|

||||

paths-ignore:

|

||||

- ".github/**"

|

||||

@@ -14,98 +13,52 @@ on:

|

||||

types: [published]

|

||||

|

||||

env:

|

||||

# AWS Configuration

|

||||

AWS_REGION_STG: eu-west-1

|

||||

AWS_REGION_PLATFORM: eu-west-1

|

||||

AWS_REGION: us-east-1

|

||||

|

||||

# Container's configuration

|

||||

IMAGE_NAME: prowler

|

||||

DOCKERFILE_PATH: ./Dockerfile

|

||||

|

||||

# Tags

|

||||

LATEST_TAG: latest

|

||||

STABLE_TAG: stable

|

||||

# The RELEASE_TAG is set during runtime in releases

|

||||

RELEASE_TAG: ""

|

||||

# The PROWLER_VERSION and PROWLER_VERSION_MAJOR are set during runtime in releases

|

||||

PROWLER_VERSION: ""

|

||||

PROWLER_VERSION_MAJOR: ""

|

||||

# TEMPORARY_TAG: temporary

|

||||

|

||||

# Python configuration

|

||||

PYTHON_VERSION: 3.12

|

||||

TEMPORARY_TAG: temporary

|

||||

DOCKERFILE_PATH: ./Dockerfile

|

||||

PYTHON_VERSION: 3.9

|

||||

|

||||

jobs:

|

||||

# Build Prowler OSS container

|

||||

container-build-push:

|

||||

# needs: dockerfile-linter

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

prowler_version_major: ${{ steps.get-prowler-version.outputs.PROWLER_VERSION_MAJOR }}

|

||||

prowler_version: ${{ steps.update-prowler-version.outputs.PROWLER_VERSION }}

|

||||

env:

|

||||

POETRY_VIRTUALENVS_CREATE: "false"

|

||||

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v5

|

||||

- name: Setup python (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: ${{ env.PYTHON_VERSION }}

|

||||

|

||||

- name: Install Poetry

|

||||

- name: Install dependencies (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

|

||||

- name: Get Prowler version

|

||||

id: get-prowler-version

|

||||

run: |

|

||||

PROWLER_VERSION="$(poetry version -s 2>/dev/null)"

|

||||

|

||||

# Store prowler version major just for the release

|

||||

PROWLER_VERSION_MAJOR="${PROWLER_VERSION%%.*}"

|

||||

echo "PROWLER_VERSION_MAJOR=${PROWLER_VERSION_MAJOR}" >> "${GITHUB_ENV}"

|

||||

echo "PROWLER_VERSION_MAJOR=${PROWLER_VERSION_MAJOR}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

case ${PROWLER_VERSION_MAJOR} in

|

||||

3)

|

||||

echo "LATEST_TAG=v3-latest" >> "${GITHUB_ENV}"

|

||||

echo "STABLE_TAG=v3-stable" >> "${GITHUB_ENV}"

|

||||

;;

|

||||

|

||||

4)

|

||||

echo "LATEST_TAG=latest" >> "${GITHUB_ENV}"

|

||||

echo "STABLE_TAG=stable" >> "${GITHUB_ENV}"

|

||||

;;

|

||||

|

||||

*)

|

||||

# Fallback if any other version is present

|

||||

echo "Releasing another Prowler major version, aborting..."

|

||||

exit 1

|

||||

;;

|

||||

esac

|

||||

|

||||

- name: Update Prowler version (release)

|

||||

id: update-prowler-version

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

PROWLER_VERSION="${{ github.event.release.tag_name }}"

|

||||

poetry version "${PROWLER_VERSION}"

|

||||

echo "PROWLER_VERSION=${PROWLER_VERSION}" >> "${GITHUB_ENV}"

|

||||

echo "PROWLER_VERSION=${PROWLER_VERSION}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

poetry version ${{ github.event.release.tag_name }}

|

||||

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v3

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Login to Public ECR

|

||||

uses: docker/login-action@v3

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: public.ecr.aws

|

||||

username: ${{ secrets.PUBLIC_ECR_AWS_ACCESS_KEY_ID }}

|

||||

@@ -114,32 +67,32 @@ jobs:

|

||||

AWS_REGION: ${{ env.AWS_REGION }}

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Build and push container image (latest)

|

||||

- name: Build container image (latest)

|

||||

if: github.event_name == 'push'

|

||||

uses: docker/build-push-action@v5

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

- name: Build and push container image (release)

|

||||

- name: Build container image (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: docker/build-push-action@v5

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

# Use local context to get changes

|

||||

# https://github.com/docker/build-push-action#path-context

|

||||

context: .

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.PROWLER_VERSION }}

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.PROWLER_VERSION }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

@@ -149,26 +102,16 @@ jobs:

|

||||

needs: container-build-push

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Get latest commit info (latest)

|

||||

- name: Get latest commit info

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

LATEST_COMMIT_HASH=$(echo ${{ github.event.after }} | cut -b -7)

|

||||

echo "LATEST_COMMIT_HASH=${LATEST_COMMIT_HASH}" >> $GITHUB_ENV

|

||||

|

||||

- name: Dispatch event (latest)

|

||||

if: github.event_name == 'push' && needs.container-build-push.outputs.prowler_version_major == '3'

|

||||

- name: Dispatch event for latest

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches \

|

||||

-H "Accept: application/vnd.github+json" \

|

||||

-H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" \

|

||||

-H "X-GitHub-Api-Version: 2022-11-28" \

|

||||

--data '{"event_type":"dispatch","client_payload":{"version":"v3-latest", "tag": "${{ env.LATEST_COMMIT_HASH }}"}}'

|

||||

|

||||

- name: Dispatch event (release)

|

||||

if: github.event_name == 'release' && needs.container-build-push.outputs.prowler_version_major == '3'

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"latest", "tag": "${{ env.LATEST_COMMIT_HASH }}"}}'

|

||||

- name: Dispatch event for release

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches \

|

||||

-H "Accept: application/vnd.github+json" \

|

||||

-H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" \

|

||||

-H "X-GitHub-Api-Version: 2022-11-28" \

|

||||

--data '{"event_type":"dispatch","client_payload":{"version":"release", "tag":"${{ needs.container-build-push.outputs.prowler_version }}"}}'

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"release", "tag":"${{ github.event.release.tag_name }}"}}'

|

||||

|

||||

10

.github/workflows/codeql.yml

vendored

@@ -13,10 +13,10 @@ name: "CodeQL"

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ "master", "v3" ]

|

||||

branches: [ "master", prowler-2, prowler-3.0-dev ]

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches: [ "master", "v3" ]

|

||||

branches: [ "master" ]

|

||||

schedule:

|

||||

- cron: '00 12 * * *'

|

||||

|

||||

@@ -37,11 +37,11 @@ jobs:

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v3

|

||||

uses: github/codeql-action/init@v2

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

@@ -52,6 +52,6 @@ jobs:

|

||||

# queries: security-extended,security-and-quality

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v3

|

||||

uses: github/codeql-action/analyze@v2

|

||||

with:

|

||||

category: "/language:${{matrix.language}}"

|

||||

|

||||

5

.github/workflows/find-secrets.yml

vendored

@@ -7,13 +7,12 @@ jobs:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: TruffleHog OSS

|

||||

uses: trufflesecurity/trufflehog@v3.73.0

|

||||

uses: trufflesecurity/trufflehog@v3.4.4

|

||||

with:

|

||||

path: ./

|

||||

base: ${{ github.event.repository.default_branch }}

|

||||

head: HEAD

|

||||

extra_args: --only-verified

|

||||

16

.github/workflows/labeler.yml

vendored

@@ -1,16 +0,0 @@

|

||||

name: "Pull Request Labeler"

|

||||

|

||||

on:

|

||||

pull_request_target:

|

||||

branches:

|

||||

- "master"

|

||||

- "v3"

|

||||

|

||||

jobs:

|

||||

labeler:

|

||||

permissions:

|

||||

contents: read

|

||||

pull-requests: write

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/labeler@v5

|

||||

45

.github/workflows/pull-request.yml

vendored

@@ -4,44 +4,29 @@ on:

|

||||

push:

|

||||

branches:

|

||||

- "master"

|

||||

- "v3"

|

||||

pull_request:

|

||||

branches:

|

||||

- "master"

|

||||

- "v3"

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: ["3.9", "3.10", "3.11", "3.12"]

|

||||

python-version: ["3.9"]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Test if changes are in not ignored paths

|

||||

id: are-non-ignored-files-changed

|

||||

uses: tj-actions/changed-files@v44

|

||||

with:

|

||||

files: ./**

|

||||

files_ignore: |

|

||||

.github/**

|

||||

README.md

|

||||

docs/**

|

||||

permissions/**

|

||||

mkdocs.yml

|

||||

- uses: actions/checkout@v3

|

||||

- name: Install poetry

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pipx install poetry

|

||||

python -m pip install --upgrade pip

|

||||

pipx install poetry

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

uses: actions/setup-python@v5

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: "poetry"

|

||||

cache: 'poetry'

|

||||

- name: Install dependencies

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry install

|

||||

poetry run pip list

|

||||

@@ -51,43 +36,29 @@ jobs:

|

||||

) && curl -L -o /tmp/hadolint "https://github.com/hadolint/hadolint/releases/download/v${VERSION}/hadolint-Linux-x86_64" \

|

||||

&& chmod +x /tmp/hadolint

|

||||

- name: Poetry check

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry lock --check

|

||||

- name: Lint with flake8

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run flake8 . --ignore=E266,W503,E203,E501,W605,E128 --exclude contrib

|

||||

- name: Checking format with black

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run black --check .

|

||||

- name: Lint with pylint

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run pylint --disable=W,C,R,E -j 0 -rn -sn prowler/

|

||||

- name: Bandit

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run bandit -q -lll -x '*_test.py,./contrib/' -r .

|

||||

- name: Safety

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run safety check

|

||||

- name: Vulture

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run vulture --exclude "contrib" --min-confidence 100 .

|

||||

- name: Hadolint

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

/tmp/hadolint Dockerfile --ignore=DL3013

|

||||

- name: Test with pytest

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

run: |

|

||||

poetry run pytest -n auto --cov=./prowler --cov-report=xml tests

|

||||

- name: Upload coverage reports to Codecov

|

||||

if: steps.are-non-ignored-files-changed.outputs.any_changed == 'true'

|

||||

uses: codecov/codecov-action@v4

|

||||

env:

|

||||

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

||||

poetry run pytest tests -n auto

|

||||

|

||||

93

.github/workflows/pypi-release.yml

vendored

@@ -6,10 +6,6 @@ on:

|

||||

|

||||

env:

|

||||

RELEASE_TAG: ${{ github.event.release.tag_name }}

|

||||

PYTHON_VERSION: 3.11

|

||||

CACHE: "poetry"

|

||||

# TODO: create a bot user for this kind of tasks, like prowler-bot

|

||||

GIT_COMMITTER_EMAIL: "sergio@prowler.com"

|

||||

|

||||

jobs:

|

||||

release-prowler-job:

|

||||

@@ -18,81 +14,64 @@ jobs:

|

||||

POETRY_VIRTUALENVS_CREATE: "false"

|

||||

name: Release Prowler to PyPI

|

||||

steps:

|

||||

- name: Get Prowler version

|

||||

run: |

|

||||

PROWLER_VERSION="${{ env.RELEASE_TAG }}"

|

||||

|

||||

case ${PROWLER_VERSION%%.*} in

|

||||

3)

|

||||

echo "Releasing Prowler v3 with tag ${PROWLER_VERSION}"

|

||||

;;

|

||||

4)

|

||||

echo "Releasing Prowler v4 with tag ${PROWLER_VERSION}"

|

||||

;;

|

||||

*)

|

||||

echo "Releasing another Prowler major version, aborting..."

|

||||

exit 1

|

||||

;;

|

||||

esac

|

||||

|

||||

- uses: actions/checkout@v4

|

||||

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

|

||||

- name: Setup Python

|

||||

uses: actions/setup-python@v5

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ env.PYTHON_VERSION }}

|

||||

cache: ${{ env.CACHE }}

|

||||

|

||||

- name: Update Poetry and config version

|

||||

python-version: 3.9

|

||||

cache: 'poetry'

|

||||

- name: Change version and Build package

|

||||

run: |

|

||||

poetry version ${{ env.RELEASE_TAG }}

|

||||

|

||||

- name: Import GPG key

|

||||

uses: crazy-max/ghaction-import-gpg@v6

|

||||

with:

|

||||

gpg_private_key: ${{ secrets.GPG_PRIVATE_KEY }}

|

||||

passphrase: ${{ secrets.GPG_PASSPHRASE }}

|

||||

git_user_signingkey: true

|

||||

git_commit_gpgsign: true

|

||||

|

||||

- name: Push updated version to the release tag

|

||||

run: |

|

||||

# Configure Git

|

||||

git config user.name "github-actions"

|

||||

git config user.email "${{ env.GIT_COMMITTER_EMAIL }}"

|

||||

|

||||

# Add the files with the version changed

|

||||

git config user.email "<noreply@github.com>"

|

||||

git add prowler/config/config.py pyproject.toml

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify -S

|

||||

|

||||

# Replace the tag with the version updated

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}" --sign

|

||||

|

||||

# Push the tag

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}"

|

||||

git push -f origin ${{ env.RELEASE_TAG }}

|

||||

|

||||

- name: Build Prowler package

|

||||

run: |

|

||||

poetry build

|

||||

|

||||

- name: Publish Prowler package to PyPI

|

||||

- name: Publish prowler package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request with new version

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

commit-message: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}."

|

||||

branch: release-${{ env.RELEASE_TAG }}

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}"

|

||||

body: |

|

||||

### Description

|

||||

|

||||

- name: Replicate PyPI package

|

||||

This PR updates Prowler Version to ${{ env.RELEASE_TAG }}.

|

||||

|

||||

### License

|

||||

|

||||

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

|

||||

- name: Replicate PyPi Package

|

||||

run: |

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler.egg-info

|

||||

pip install toml

|

||||

python util/replicate_pypi_package.py

|

||||

poetry build

|

||||

|

||||

- name: Publish prowler-cloud package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request to github.com/Homebrew/homebrew-core to update prowler formula

|

||||

- name: Bump Homebrew formula

|

||||

uses: mislav/bump-homebrew-formula-action@v2

|

||||

with:

|

||||

formula-name: prowler

|

||||

base-branch: release-${{ env.RELEASE_TAG }}

|

||||

env:

|

||||

COMMITTER_TOKEN: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

|

||||

@@ -23,12 +23,12 @@ jobs:

|

||||

# Steps represent a sequence of tasks that will be executed as part of the job

|

||||

steps:

|

||||

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: ${{ env.GITHUB_BRANCH }}

|

||||

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v5

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9 #install the python needed

|

||||

|

||||

@@ -38,7 +38,7 @@ jobs:

|

||||

pip install boto3

|

||||

|

||||

- name: Configure AWS Credentials -- DEV

|

||||

uses: aws-actions/configure-aws-credentials@v4

|

||||

uses: aws-actions/configure-aws-credentials@v1

|

||||

with:

|

||||

aws-region: ${{ env.AWS_REGION_DEV }}

|

||||

role-to-assume: ${{ secrets.DEV_IAM_ROLE_ARN }}

|

||||

@@ -50,12 +50,12 @@ jobs:

|

||||

|

||||

# Create pull request

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v6

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

commit-message: "feat(regions_update): Update regions for AWS services."

|

||||

branch: "aws-services-regions-updated-${{ github.sha }}"

|

||||

labels: "status/waiting-for-revision, severity/low, provider/aws, backport-v3"

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "chore(regions_update): Changes in regions for AWS services."

|

||||

body: |

|

||||

### Description

|

||||

|

||||

11

.gitignore

vendored

@@ -9,9 +9,8 @@

|

||||

__pycache__

|

||||

venv/

|

||||

build/

|

||||

/dist/

|

||||

dist/

|

||||

*.egg-info/

|

||||

*/__pycache__/*.pyc

|

||||

|

||||

# Session

|

||||

Session.vim

|

||||

@@ -47,11 +46,3 @@ junit-reports/

|

||||

|

||||

# .env

|

||||

.env*

|

||||

|

||||

# Coverage

|

||||

.coverage*

|

||||

.coverage

|

||||

coverage*

|

||||

|

||||

# Node

|

||||

node_modules

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

repos:

|

||||

## GENERAL

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v4.5.0

|

||||

rev: v4.4.0

|

||||

hooks:

|

||||

- id: check-merge-conflict

|

||||

- id: check-yaml

|

||||

@@ -15,7 +15,7 @@ repos:

|

||||

|

||||

## TOML

|

||||

- repo: https://github.com/macisamuele/language-formatters-pre-commit-hooks

|

||||

rev: v2.12.0

|

||||

rev: v2.7.0

|

||||

hooks:

|

||||

- id: pretty-format-toml

|

||||

args: [--autofix]

|

||||

@@ -26,10 +26,9 @@ repos:

|

||||

rev: v0.9.0

|

||||

hooks:

|

||||

- id: shellcheck

|

||||

exclude: contrib

|

||||

## PYTHON

|

||||

- repo: https://github.com/myint/autoflake

|

||||

rev: v2.2.1

|

||||

rev: v2.0.1

|

||||

hooks:

|

||||

- id: autoflake

|

||||

args:

|

||||

@@ -40,29 +39,28 @@ repos:

|

||||

]

|

||||

|

||||

- repo: https://github.com/timothycrosley/isort

|

||||

rev: 5.13.2

|

||||

rev: 5.12.0

|

||||

hooks:

|

||||

- id: isort

|

||||

args: ["--profile", "black"]

|

||||

|

||||

- repo: https://github.com/psf/black

|

||||

rev: 24.1.1

|

||||

rev: 23.1.0

|

||||

hooks:

|

||||

- id: black

|

||||

|

||||

- repo: https://github.com/pycqa/flake8

|

||||

rev: 7.0.0

|

||||

rev: 6.0.0

|

||||

hooks:

|

||||

- id: flake8

|

||||

exclude: contrib

|

||||

args: ["--ignore=E266,W503,E203,E501,W605"]

|

||||

|

||||

- repo: https://github.com/python-poetry/poetry

|

||||

rev: 1.7.0

|

||||

rev: 1.4.0 # add version here

|

||||

hooks:

|

||||

- id: poetry-check

|

||||

- id: poetry-lock

|

||||

args: ["--no-update"]

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.12.1-beta

|

||||

@@ -76,23 +74,17 @@ repos:

|

||||

name: pylint

|

||||

entry: bash -c 'pylint --disable=W,C,R,E -j 0 -rn -sn prowler/'

|

||||

language: system

|

||||

files: '.*\.py'

|

||||

|

||||

- id: trufflehog

|

||||

name: TruffleHog

|

||||

description: Detect secrets in your data.

|

||||

entry: bash -c 'trufflehog --no-update git file://. --only-verified --fail'

|

||||

# For running trufflehog in docker, use the following entry instead:

|

||||

# entry: bash -c 'docker run -v "$(pwd):/workdir" -i --rm trufflesecurity/trufflehog:latest git file:///workdir --only-verified --fail'

|

||||

- id: pytest-check

|

||||

name: pytest-check

|

||||

entry: bash -c 'pytest tests -n auto'

|

||||

language: system

|

||||

stages: ["commit", "push"]

|

||||

|

||||

- id: bandit

|

||||

name: bandit

|

||||

description: "Bandit is a tool for finding common security issues in Python code"

|

||||

entry: bash -c 'bandit -q -lll -x '*_test.py,./contrib/' -r .'

|

||||

language: system

|

||||

files: '.*\.py'

|

||||

|

||||

- id: safety

|

||||

name: safety

|

||||

@@ -105,4 +97,3 @@ repos:

|

||||

description: "Vulture finds unused code in Python programs."

|

||||

entry: bash -c 'vulture --exclude "contrib" --min-confidence 100 .'

|

||||

language: system

|

||||

files: '.*\.py'

|

||||

|

||||

@@ -8,18 +8,16 @@ version: 2

|

||||

build:

|

||||

os: "ubuntu-22.04"

|

||||

tools:

|

||||

python: "3.11"

|

||||

python: "3.9"

|

||||

jobs:

|

||||

post_create_environment:

|

||||

# Install poetry

|

||||

# https://python-poetry.org/docs/#installing-manually

|

||||

- python -m pip install poetry

|

||||

- pip install poetry

|

||||

# Tell poetry to not use a virtual environment

|

||||

- poetry config virtualenvs.create false

|

||||

post_install:

|

||||

# Install dependencies with 'docs' dependency group

|

||||

# https://python-poetry.org/docs/managing-dependencies/#dependency-groups

|

||||

# VIRTUAL_ENV needs to be set manually for now.

|

||||

# See https://github.com/readthedocs/readthedocs.org/pull/11152/

|

||||

- VIRTUAL_ENV=${READTHEDOCS_VIRTUALENV_PATH} python -m poetry install --only=docs

|

||||

- poetry install -E docs

|

||||

|

||||

mkdocs:

|

||||

configuration: mkdocs.yml

|

||||

|

||||

@@ -55,7 +55,7 @@ further defined and clarified by project maintainers.

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at [support.prowler.com](https://customer.support.prowler.com/servicedesk/customer/portals). All

|

||||

reported by contacting the project team at community@prowler.cloud. All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

|

||||

@@ -1,13 +0,0 @@

|

||||

# Do you want to learn on how to...

|

||||

|

||||

- Contribute with your code or fixes to Prowler

|

||||

- Create a new check for a provider

|

||||

- Create a new security compliance framework

|

||||

- Add a custom output format

|

||||

- Add a new integration

|

||||

- Contribute with documentation

|

||||

|

||||

Want some swag as appreciation for your contribution?

|

||||

|

||||

# Prowler Developer Guide

|

||||

https://docs.prowler.com/projects/prowler-open-source/en/latest/developer-guide/introduction/

|

||||

12

Dockerfile

@@ -1,10 +1,9 @@

|

||||

FROM python:3.12-alpine

|

||||

FROM python:3.9-alpine

|

||||

|

||||

LABEL maintainer="https://github.com/prowler-cloud/prowler"

|

||||

|

||||

# Update system dependencies

|

||||

#hadolint ignore=DL3018

|

||||

RUN apk --no-cache upgrade && apk --no-cache add curl

|

||||

RUN apk --no-cache upgrade

|

||||

|

||||

# Create nonroot user

|

||||

RUN mkdir -p /home/prowler && \

|

||||

@@ -15,8 +14,7 @@ USER prowler

|

||||

|

||||

# Copy necessary files

|

||||

WORKDIR /home/prowler

|

||||

COPY prowler/ /home/prowler/prowler/

|

||||

COPY dashboard/ /home/prowler/dashboard/

|

||||

COPY prowler/ /home/prowler/prowler/

|

||||

COPY pyproject.toml /home/prowler

|

||||

COPY README.md /home/prowler

|

||||

|

||||

@@ -27,10 +25,6 @@ ENV PATH="$HOME/.local/bin:$PATH"

|

||||

RUN pip install --no-cache-dir --upgrade pip && \

|

||||

pip install --no-cache-dir .

|

||||

|

||||

# Remove deprecated dash dependencies

|

||||

RUN pip uninstall dash-html-components -y && \

|

||||

pip uninstall dash-core-components -y

|

||||

|

||||

# Remove Prowler directory and build files

|

||||

USER 0

|

||||

RUN rm -rf /home/prowler/prowler /home/prowler/pyproject.toml /home/prowler/README.md /home/prowler/build /home/prowler/prowler.egg-info

|

||||

|

||||

2

LICENSE

@@ -186,7 +186,7 @@

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright @ 2024 Toni de la Fuente

|

||||

Copyright 2018 Netflix, Inc.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

|

||||

13

Makefile

@@ -2,19 +2,12 @@

|

||||

|

||||

##@ Testing

|

||||

test: ## Test with pytest

|

||||

rm -rf .coverage && \

|

||||

pytest -n auto -vvv -s --cov=./prowler --cov-report=xml tests

|

||||

pytest -n auto -vvv -s -x

|

||||

|

||||

coverage: ## Show Test Coverage

|

||||

coverage run --skip-covered -m pytest -v && \

|

||||

coverage report -m && \

|

||||

rm -rf .coverage && \

|

||||

coverage report -m

|

||||

|

||||

coverage-html: ## Show Test Coverage

|

||||

rm -rf ./htmlcov && \

|

||||

coverage html && \

|

||||

open htmlcov/index.html

|

||||

rm -rf .coverage

|

||||

|

||||

##@ Linting

|

||||

format: ## Format Code

|

||||

@@ -27,7 +20,7 @@ lint: ## Lint Code

|

||||

@echo "Running black... "

|

||||

black --check .

|

||||

@echo "Running pylint..."

|

||||

pylint --disable=W,C,R,E -j 0 prowler util

|

||||

pylint --disable=W,C,R,E -j 0 providers lib util config

|

||||

|

||||

##@ PyPI

|

||||

pypi-clean: ## Delete the distribution files

|

||||

|

||||

236

README.md

@@ -1,31 +1,25 @@

|

||||

<p align="center">

|

||||

<img align="center" src="https://github.com/prowler-cloud/prowler/blob/master/docs/img/prowler-logo-black.png?raw=True#gh-light-mode-only" width="350" height="115">

|

||||

<img align="center" src="https://github.com/prowler-cloud/prowler/blob/master/docs/img/prowler-logo-white.png?raw=True#gh-dark-mode-only" width="350" height="115">

|

||||

<img align="center" src="https://github.com/prowler-cloud/prowler/blob/62c1ce73bbcdd6b9e5ba03dfcae26dfd165defd9/docs/img/prowler-pro-dark.png?raw=True#gh-dark-mode-only" width="150" height="36">

|

||||

<img align="center" src="https://github.com/prowler-cloud/prowler/blob/62c1ce73bbcdd6b9e5ba03dfcae26dfd165defd9/docs/img/prowler-pro-light.png?raw=True#gh-light-mode-only" width="15%" height="15%">

|

||||

</p>

|

||||

<p align="center">

|

||||

<b><i>Prowler SaaS </b> and <b>Prowler Open Source</b> are as dynamic and adaptable as the environment they’re meant to protect. Trusted by the leaders in security.

|

||||

<b><i>See all the things you and your team can do with ProwlerPro at <a href="https://prowler.pro">prowler.pro</a></i></b>

|

||||

</p>

|

||||

<p align="center">

|

||||

<b>Learn more at <a href="https://prowler.com">prowler.com</i></b>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img width="30" height="30" alt="Prowler community on Slack" src="https://github.com/prowler-cloud/prowler/assets/3985464/3617e470-670c-47c9-9794-ce895ebdb627"></a>

|

||||

<br>

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog">Join our Prowler community!</a>

|

||||

</p>

|

||||

|

||||

<hr>

|

||||

<p align="center">

|

||||

<img src="https://user-images.githubusercontent.com/3985464/113734260-7ba06900-96fb-11eb-82bc-d4f68a1e2710.png" />

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://pypi.org/project/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypi.org/project/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg?label=prowler%20downloads"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg?label=prowler-cloud%20downloads"></a>

|

||||

<a href="https://formulae.brew.sh/formula/prowler#default"><img alt="Brew Prowler Downloads" src="https://img.shields.io/homebrew/installs/dm/prowler?label=brew%20downloads"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

<a href="https://gallery.ecr.aws/prowler-cloud/prowler"><img width="120" height=19" alt="AWS ECR Gallery" src="https://user-images.githubusercontent.com/3985464/151531396-b6535a68-c907-44eb-95a1-a09508178616.png"></a>

|

||||

<a href="https://codecov.io/gh/prowler-cloud/prowler"><img src="https://codecov.io/gh/prowler-cloud/prowler/graph/badge.svg?token=OflBGsdpDl"/></a>

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://github.com/prowler-cloud/prowler"><img alt="Repo size" src="https://img.shields.io/github/repo-size/prowler-cloud/prowler"></a>

|

||||

@@ -37,56 +31,39 @@

|

||||

<a href="https://twitter.com/ToniBlyx"><img alt="Twitter" src="https://img.shields.io/twitter/follow/toniblyx?style=social"></a>

|

||||

<a href="https://twitter.com/prowlercloud"><img alt="Twitter" src="https://img.shields.io/twitter/follow/prowlercloud?style=social"></a>

|

||||

</p>

|

||||

<hr>

|

||||

|

||||

# Description

|

||||

|

||||

**Prowler** is an Open Source security tool to perform AWS, Azure, Google Cloud and Kubernetes security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness, and also remediations! We have Prowler CLI (Command Line Interface) that we call Prowler Open Source and a service on top of it that we call <a href="https://prowler.com">Prowler SaaS</a>.

|

||||

`Prowler` is an Open Source security tool to perform AWS and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

## Prowler CLI

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

```console

|

||||

prowler <provider>

|

||||

```

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

## Prowler Dashboard

|

||||

The full documentation can now be found at [https://docs.prowler.cloud](https://docs.prowler.cloud)

|

||||

|

||||

## Looking for Prowler v2 documentation?

|

||||

For Prowler v2 Documentation, please go to https://github.com/prowler-cloud/prowler/tree/2.12.1.

|

||||

|

||||

```console

|

||||

prowler dashboard

|

||||

```

|

||||

|

||||

|

||||

It contains hundreds of controls covering CIS, NIST 800, NIST CSF, CISA, RBI, FedRAMP, PCI-DSS, GDPR, HIPAA, FFIEC, SOC2, GXP, AWS Well-Architected Framework Security Pillar, AWS Foundational Technical Review (FTR), ENS (Spanish National Security Scheme) and your custom security frameworks.

|

||||

|

||||

| Provider | Checks | Services | [Compliance Frameworks](https://docs.prowler.com/projects/prowler-open-source/en/latest/tutorials/compliance/) | [Categories](https://docs.prowler.com/projects/prowler-open-source/en/latest/tutorials/misc/#categories) |

|

||||

|---|---|---|---|---|

|

||||

| AWS | 304 | 61 -> `prowler aws --list-services` | 28 -> `prowler aws --list-compliance` | 6 -> `prowler aws --list-categories` |

|

||||

| GCP | 75 | 11 -> `prowler gcp --list-services` | 1 -> `prowler gcp --list-compliance` | 2 -> `prowler gcp --list-categories`|

|

||||

| Azure | 127 | 16 -> `prowler azure --list-services` | 2 -> `prowler azure --list-compliance` | 2 -> `prowler azure --list-categories` |

|

||||

| Kubernetes | 83 | 7 -> `prowler kubernetes --list-services` | 1 -> `prowler kubernetes --list-compliance` | 7 -> `prowler kubernetes --list-categories` |

|

||||

|

||||

# 💻 Installation

|

||||

# ⚙️ Install

|

||||

|

||||

## Pip package

|

||||

Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-cloud/), thus can be installed using pip with Python >= 3.9, < 3.13:

|

||||

Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-cloud/), thus can be installed using pip with Python >= 3.9:

|

||||

|

||||

```console

|

||||

pip install prowler

|

||||

prowler -v

|

||||

```

|

||||

More details at [https://docs.prowler.com](https://docs.prowler.com/projects/prowler-open-source/en/latest/)

|

||||

More details at https://docs.prowler.cloud

|

||||

|

||||

## Containers

|

||||

|

||||

The available versions of Prowler are the following:

|

||||

|

||||

- `latest`: in sync with `master` branch (bear in mind that it is not a stable version)

|

||||

- `v3-latest`: in sync with `v3` branch (bear in mind that it is not a stable version)

|

||||

- `latest`: in sync with master branch (bear in mind that it is not a stable version)

|

||||

- `<x.y.z>` (release): you can find the releases [here](https://github.com/prowler-cloud/prowler/releases), those are stable releases.

|

||||

- `stable`: this tag always point to the latest release.

|

||||

- `v3-stable`: this tag always point to the latest release for v3.

|

||||

|

||||

|

||||

The container images are available here:

|

||||

|

||||

- [DockerHub](https://hub.docker.com/r/toniblyx/prowler/tags)

|

||||

@@ -94,7 +71,7 @@ The container images are available here:

|

||||

|

||||

## From Github

|

||||

|

||||

Python >= 3.9, < 3.13 is required with pip and poetry:

|

||||

Python >= 3.9 is required with pip and poetry:

|

||||

|

||||

```

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

@@ -106,30 +83,167 @@ python prowler.py -v

|

||||

|

||||

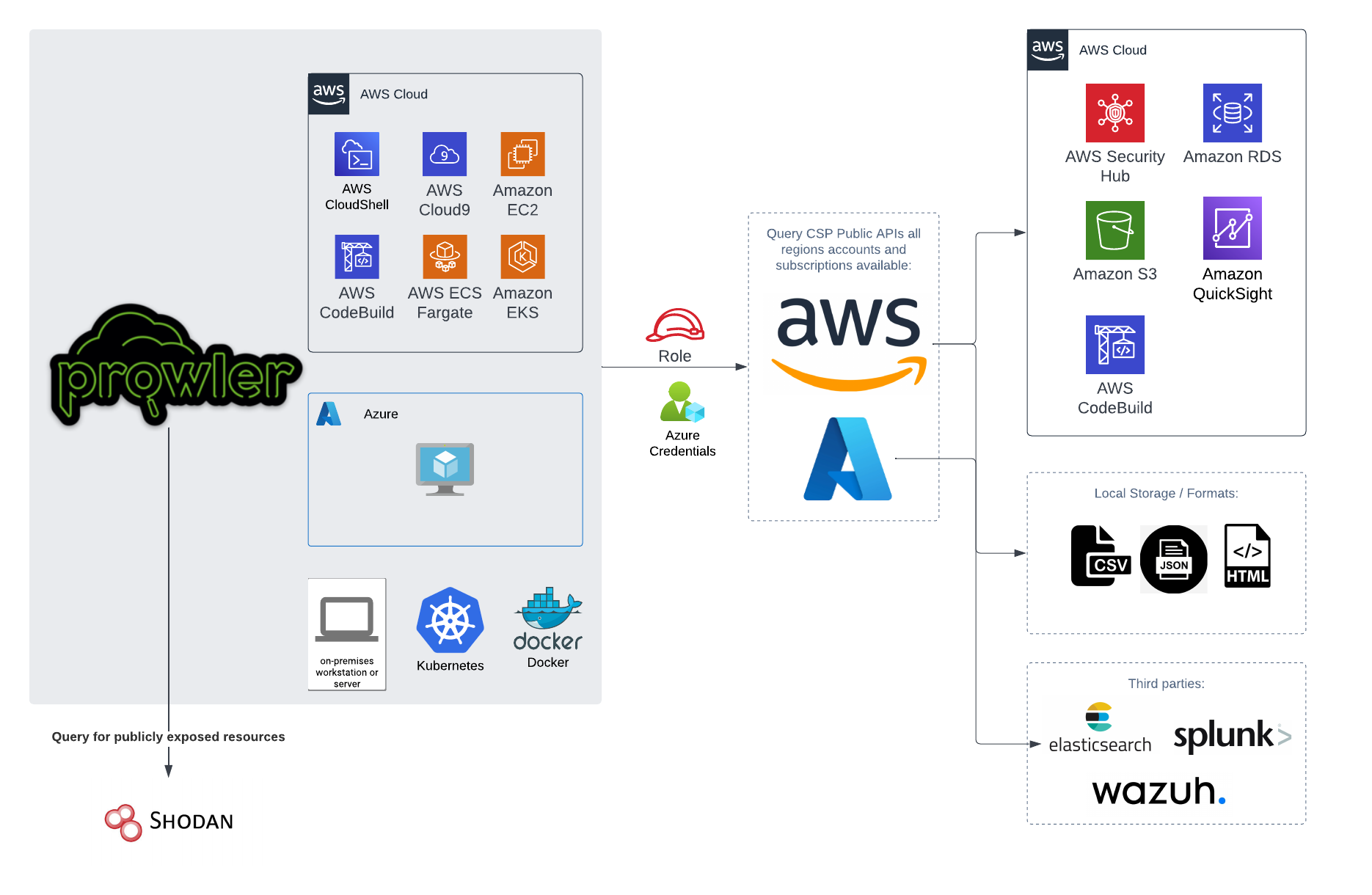

# 📐✏️ High level architecture

|

||||

|

||||

You can run Prowler from your workstation, a Kubernetes Job, a Google Compute Engine, an Azure VM, an EC2 instance, Fargate or any other container, CloudShell and many more.

|

||||

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

|

||||

# Deprecations from v3

|

||||

# 📝 Requirements

|

||||

|

||||

## General

|

||||

- `Allowlist` now is called `Mutelist`.

|

||||

- The `--quiet` option has been deprecated, now use the `--status` flag to select the finding's status you want to get from PASS, FAIL or MANUAL.

|

||||

- All `INFO` finding's status has changed to `MANUAL`.

|

||||

- The CSV output format is common for all the providers.

|

||||

Prowler has been written in Python using the [AWS SDK (Boto3)](https://boto3.amazonaws.com/v1/documentation/api/latest/index.html#) and [Azure SDK](https://azure.github.io/azure-sdk-for-python/).

|

||||

## AWS

|

||||

|

||||

We have deprecated some of our outputs formats:

|

||||

- The HTML is replaced for the new Prowler Dashboard, run `prowler dashboard`.

|

||||

- The native JSON is replaced for the JSON [OCSF](https://schema.ocsf.io/) v1.1.0, common for all the providers.

|

||||

Since Prowler uses AWS Credentials under the hood, you can follow any authentication method as described [here](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-quickstart.html#cli-configure-quickstart-precedence).

|

||||

Make sure you have properly configured your AWS-CLI with a valid Access Key and Region or declare AWS variables properly (or instance profile/role):

|

||||

|

||||

```console

|

||||

aws configure

|

||||

```

|

||||

|

||||

or

|

||||

|

||||

```console

|

||||

export AWS_ACCESS_KEY_ID="ASXXXXXXX"

|

||||

export AWS_SECRET_ACCESS_KEY="XXXXXXXXX"

|

||||

export AWS_SESSION_TOKEN="XXXXXXXXX"

|

||||

```

|

||||

|

||||

Those credentials must be associated to a user or role with proper permissions to do all checks. To make sure, add the following AWS managed policies to the user or role being used:

|

||||

|

||||

- arn:aws:iam::aws:policy/SecurityAudit

|

||||

- arn:aws:iam::aws:policy/job-function/ViewOnlyAccess

|

||||

|

||||

> Moreover, some read-only additional permissions are needed for several checks, make sure you attach also the custom policy [prowler-additions-policy.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-additions-policy.json) to the role you are using.

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

## Azure

|

||||

|

||||

Prowler for Azure supports the following authentication types:

|

||||

|

||||

- Service principal authentication by environment variables (Enterprise Application)

|

||||

- Current az cli credentials stored

|

||||

- Interactive browser authentication

|

||||

- Managed identity authentication

|

||||

|

||||

### Service Principal authentication

|

||||

|

||||

To allow Prowler assume the service principal identity to start the scan, it is needed to configure the following environment variables:

|

||||

|

||||

```console

|

||||

export AZURE_CLIENT_ID="XXXXXXXXX"

|

||||

export AZURE_TENANT_ID="XXXXXXXXX"

|

||||

export AZURE_CLIENT_SECRET="XXXXXXX"

|

||||

```

|

||||

|

||||

If you try to execute Prowler with the `--sp-env-auth` flag and those variables are empty or not exported, the execution is going to fail.

|

||||

### AZ CLI / Browser / Managed Identity authentication

|

||||

|

||||

The other three cases do not need additional configuration, `--az-cli-auth` and `--managed-identity-auth` are automated options, `--browser-auth` needs the user to authenticate using the default browser to start the scan.

|

||||

|

||||

### Permissions

|

||||

|

||||

To use each one, you need to pass the proper flag to the execution. Prowler for Azure handles two types of permission scopes, which are:

|

||||

|

||||

- **Azure Active Directory permissions**: Used to retrieve metadata from the identity assumed by Prowler and future AAD checks (not mandatory to have access to execute the tool)

|

||||

- **Subscription scope permissions**: Required to launch the checks against your resources, mandatory to launch the tool.

|

||||

|

||||

|

||||

#### Azure Active Directory scope

|

||||

|

||||

Azure Active Directory (AAD) permissions required by the tool are the following:

|

||||

|

||||

- `Directory.Read.All`

|

||||

- `Policy.Read.All`

|

||||

|

||||

|

||||

#### Subscriptions scope

|

||||

|

||||

Regarding the subscription scope, Prowler by default scans all the subscriptions that is able to list, so it is required to add the following RBAC builtin roles per subscription to the entity that is going to be assumed by the tool:

|

||||

|

||||

- `Security Reader`

|

||||

- `Reader`

|

||||

|

||||

|

||||

# 💻 Basic Usage

|

||||

|

||||

To run prowler, you will need to specify the provider (e.g aws or azure):

|

||||

|

||||

```console

|

||||

prowler <provider>

|

||||

```

|

||||

|

||||

|

||||

|

||||

> Running the `prowler` command without options will use your environment variable credentials.

|

||||

|

||||

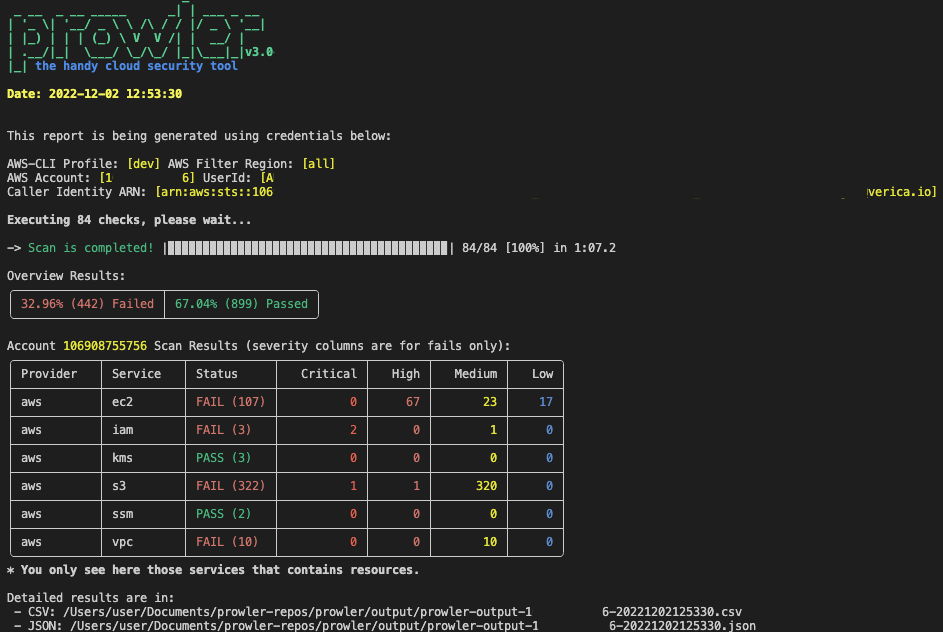

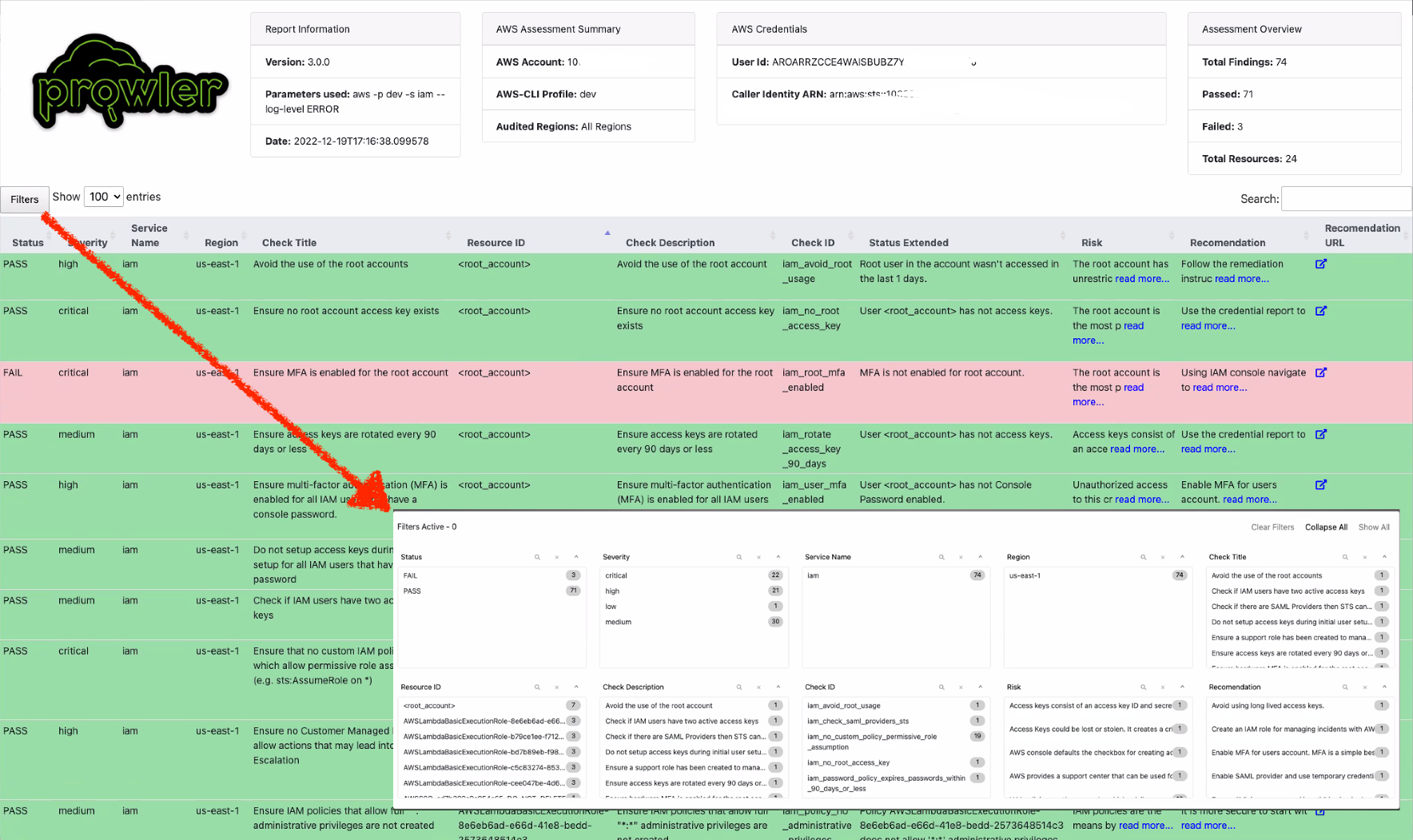

By default, prowler will generate a CSV, a JSON and a HTML report, however you can generate JSON-ASFF (only for AWS Security Hub) report with `-M` or `--output-modes`:

|

||||

|

||||

```console

|

||||

prowler <provider> -M csv json json-asff html

|

||||

```

|

||||

|

||||

The html report will be located in the `output` directory as the other files and it will look like:

|

||||

|

||||

|

||||

|

||||

You can use `-l`/`--list-checks` or `--list-services` to list all available checks or services within the provider.

|

||||

|

||||

```console

|

||||

prowler <provider> --list-checks

|

||||

prowler <provider> --list-services

|

||||

```

|

||||

|

||||

For executing specific checks or services you can use options `-c`/`--checks` or `-s`/`--services`:

|

||||

|

||||

```console

|

||||

prowler aws --checks s3_bucket_public_access

|

||||

prowler aws --services s3 ec2

|

||||

```

|

||||

|

||||

Also, checks and services can be excluded with options `-e`/`--excluded-checks` or `--excluded-services`:

|

||||

|

||||

```console

|

||||

prowler aws --excluded-checks s3_bucket_public_access

|

||||

prowler aws --excluded-services s3 ec2

|

||||

```

|

||||

|

||||

You can always use `-h`/`--help` to access to the usage information and all the possible options:

|

||||

|

||||

```console

|

||||

prowler -h

|

||||

```

|

||||

|

||||

## Checks Configurations

|

||||

Several Prowler's checks have user configurable variables that can be modified in a common **configuration file**.

|

||||

This file can be found in the following path:

|

||||

```

|

||||

prowler/config/config.yaml

|

||||

```

|

||||

|

||||

## AWS

|

||||

- Deprecate the AWS flag --sts-endpoint-region since we use AWS STS regional tokens.

|

||||

- To send only FAILS to AWS Security Hub, now use either `--send-sh-only-fails` or `--security-hub --status FAIL`.

|

||||

|

||||

Use a custom AWS profile with `-p`/`--profile` and/or AWS regions which you want to audit with `-f`/`--filter-region`:

|

||||

|

||||

# 📖 Documentation

|

||||

```console

|

||||

prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

```

|

||||

> By default, `prowler` will scan all AWS regions.

|

||||

|

||||

Install, Usage, Tutorials and Developer Guide is at https://docs.prowler.com/

|

||||

## Azure

|

||||

|

||||

With Azure you need to specify which auth method is going to be used:

|

||||

|

||||

```console

|

||||

prowler azure [--sp-env-auth, --az-cli-auth, --browser-auth, --managed-identity-auth]

|

||||

```

|

||||

> By default, `prowler` will scan all Azure subscriptions.

|

||||

|

||||

# 🎉 New Features

|

||||

|

||||

- Python: we got rid of all bash and it is now all in Python.

|

||||

- Faster: huge performance improvements (same account from 2.5 hours to 4 minutes).

|

||||

- Developers and community: we have made it easier to contribute with new checks and new compliance frameworks. We also included unit tests.

|

||||

- Multi-cloud: in addition to AWS, we have added Azure, we plan to include GCP and OCI soon, let us know if you want to contribute!

|

||||

|

||||

# 📃 License

|

||||

|

||||

|

||||

@@ -14,7 +14,7 @@ As an **AWS Partner** and we have passed the [AWS Foundation Technical Review (F

|

||||

|

||||

If you would like to report a vulnerability or have a security concern regarding Prowler Open Source or ProwlerPro service, please submit the information by contacting to help@prowler.pro.

|

||||

|

||||

The information you share with ProwlerPro as part of this process is kept confidential within ProwlerPro. We will only share this information with a third party if the vulnerability you report is found to affect a third-party product, in which case we will share this information with the third-party product's author or manufacturer. Otherwise, we will only share this information as permitted by you.

|

||||

The information you share with Verica as part of this process is kept confidential within Verica and the Prowler team. We will only share this information with a third party if the vulnerability you report is found to affect a third-party product, in which case we will share this information with the third-party product's author or manufacturer. Otherwise, we will only share this information as permitted by you.

|

||||

|

||||

We will review the submitted report, and assign it a tracking number. We will then respond to you, acknowledging receipt of the report, and outline the next steps in the process.

|

||||

|

||||

|

||||

@@ -1,8 +1,17 @@

|

||||

#!/bin/bash

|

||||

|

||||

sudo bash

|

||||

adduser prowler

|

||||

su prowler

|

||||

pip install prowler

|

||||

cd /tmp

|

||||

prowler aws

|

||||

# Install system dependencies

|

||||

sudo yum -y install openssl-devel bzip2-devel libffi-devel gcc

|

||||

# Upgrade to Python 3.9

|

||||

cd /tmp && wget https://www.python.org/ftp/python/3.9.13/Python-3.9.13.tgz

|

||||

tar zxf Python-3.9.13.tgz

|

||||

cd Python-3.9.13/ || exit

|

||||

./configure --enable-optimizations

|

||||

sudo make altinstall

|

||||

python3.9 --version

|

||||

# Install Prowler

|

||||

cd ~ || exit

|

||||

python3.9 -m pip install prowler-cloud

|

||||

prowler -v

|

||||

# Run Prowler

|

||||

prowler

|

||||

|

||||

@@ -1,24 +1,45 @@

|

||||

# Build command

|

||||

# docker build --platform=linux/amd64 --no-cache -t prowler:latest .

|

||||

|

||||

ARG PROWLER_VERSION=latest

|

||||

FROM public.ecr.aws/amazonlinux/amazonlinux:2022

|

||||

|

||||

FROM toniblyx/prowler:${PROWLER_VERSION}

|

||||

ARG PROWLERVER=2.9.0

|

||||

ARG USERNAME=prowler

|

||||

ARG USERID=34000

|

||||

|

||||

USER 0

|

||||

# hadolint ignore=DL3018

|

||||

RUN apk --no-cache add bash aws-cli jq

|

||||

# Install Dependencies

|

||||

RUN \

|

||||

dnf update -y && \

|

||||

dnf install -y bash file findutils git jq python3 python3-pip \

|

||||

python3-setuptools python3-wheel shadow-utils tar unzip which && \

|

||||

dnf remove -y awscli && \

|

||||

dnf clean all && \

|

||||

useradd -l -s /bin/sh -U -u ${USERID} ${USERNAME} && \

|

||||

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" && \

|

||||

unzip awscliv2.zip && \

|

||||

./aws/install && \

|

||||

pip3 install --no-cache-dir --upgrade pip && \

|

||||

pip3 install --no-cache-dir "git+https://github.com/ibm/detect-secrets.git@master#egg=detect-secrets" && \

|

||||

rm -rf aws awscliv2.zip /var/cache/dnf

|

||||

|

||||

ARG MULTI_ACCOUNT_SECURITY_HUB_PATH=/home/prowler/multi-account-securityhub

|

||||

# Place script and env vars

|

||||

COPY .awsvariables run-prowler-securityhub.sh /

|

||||

|

||||

USER prowler

|

||||

# Installs prowler and change permissions

|

||||

RUN \

|

||||

curl -L "https://github.com/prowler-cloud/prowler/archive/refs/tags/${PROWLERVER}.tar.gz" -o "prowler.tar.gz" && \

|

||||

tar xvzf prowler.tar.gz && \

|

||||

rm -f prowler.tar.gz && \

|

||||

mv prowler-${PROWLERVER} prowler && \

|

||||

chown ${USERNAME}:${USERNAME} /run-prowler-securityhub.sh && \

|

||||

chmod 500 /run-prowler-securityhub.sh && \

|

||||

chown ${USERNAME}:${USERNAME} /.awsvariables && \

|

||||

chmod 400 /.awsvariables && \

|

||||

chown ${USERNAME}:${USERNAME} -R /prowler && \

|

||||

chmod +x /prowler/prowler

|

||||

|

||||

# Move script and environment variables

|

||||

RUN mkdir "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"

|

||||

COPY --chown=prowler:prowler .awsvariables run-prowler-securityhub.sh "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/

|

||||

RUN chmod 500 "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/run-prowler-securityhub.sh & \

|

||||

chmod 400 "${MULTI_ACCOUNT_SECURITY_HUB_PATH}"/.awsvariables

|

||||

# Drop to user

|

||||

USER ${USERNAME}

|

||||

|

||||

WORKDIR ${MULTI_ACCOUNT_SECURITY_HUB_PATH}

|

||||

|

||||

ENTRYPOINT ["./run-prowler-securityhub.sh"]

|

||||

# Run script

|

||||

ENTRYPOINT ["/run-prowler-securityhub.sh"]

|

||||

|

||||

51

contrib/multi-account-securityhub/run-prowler-securityhub.sh

Executable file → Normal file

@@ -1,17 +1,20 @@

|

||||

#!/bin/bash

|

||||

# Run Prowler against All AWS Accounts in an AWS Organization

|

||||

|

||||

# Change Directory (rest of the script, assumes you're in the root directory)

|

||||

cd / || exit

|

||||

|

||||

# Show Prowler Version

|

||||

prowler -v

|

||||

./prowler/prowler -V

|

||||

|

||||

# Source .awsvariables

|

||||

# shellcheck disable=SC1091

|

||||

source .awsvariables

|

||||

|

||||

# Get Values from Environment Variables

|

||||

echo "ROLE: ${ROLE}"

|

||||

echo "PARALLEL_ACCOUNTS: ${PARALLEL_ACCOUNTS}"

|