Compare commits

406 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fad5a1937c | ||

|

|

635c257502 | ||

|

|

58a38c08d7 | ||

|

|

8fbee7737b | ||

|

|

e84f5f184e | ||

|

|

0bd26b19d7 | ||

|

|

64f82d5d51 | ||

|

|

f63ff994ce | ||

|

|

a10ee43271 | ||

|

|

54ed29e08d | ||

|

|

cc097e7a3f | ||

|

|

5de92ada43 | ||

|

|

0c546211cf | ||

|

|

4dc5a3a67c | ||

|

|

c51b226ceb | ||

|

|

0a5ca6cf74 | ||

|

|

96957219e4 | ||

|

|

32b7620db3 | ||

|

|

347f65e089 | ||

|

|

16628a427e | ||

|

|

ed16034a25 | ||

|

|

0c5f144e41 | ||

|

|

acc7d6e7dc | ||

|

|

84b4139052 | ||

|

|

9943643958 | ||

|

|

9ceaefb663 | ||

|

|

ec03ea5bc1 | ||

|

|

5855633c1f | ||

|

|

a53bc2bc2e | ||

|

|

88445820ed | ||

|

|

044ed3ae98 | ||

|

|

6f48012234 | ||

|

|

d344318dd4 | ||

|

|

6273dd3d83 | ||

|

|

0f3f3cbffd | ||

|

|

3244123b21 | ||

|

|

cba2ee3622 | ||

|

|

25ed925df5 | ||

|

|

8c5bd60bab | ||

|

|

c5510556a7 | ||

|

|

bbcfca84ef | ||

|

|

1260e94c2a | ||

|

|

8a02574303 | ||

|

|

c930f08348 | ||

|

|

5204acb5d0 | ||

|

|

784aaa98c9 | ||

|

|

745e2494bc | ||

|

|

c00792519d | ||

|

|

142fe5a12c | ||

|

|

5b127f232e | ||

|

|

c22bf01003 | ||

|

|

05e4911d6f | ||

|

|

9b551ef0ba | ||

|

|

56a8bb2349 | ||

|

|

8503c6a64d | ||

|

|

820f18da4d | ||

|

|

51a2432ebf | ||

|

|

6639534e97 | ||

|

|

0621577c7d | ||

|

|

26a507e3db | ||

|

|

244b540fe0 | ||

|

|

030ca4c173 | ||

|

|

88a2810f29 | ||

|

|

9164ee363a | ||

|

|

4cd47fdcc5 | ||

|

|

708852a3cb | ||

|

|

4a93bdf3ea | ||

|

|

22e7d2a811 | ||

|

|

93eca1dff2 | ||

|

|

9afe7408cd | ||

|

|

5dc2347a25 | ||

|

|

e3a0124b10 | ||

|

|

16af89c281 | ||

|

|

621e4258c8 | ||

|

|

ac6272e739 | ||

|

|

6e84f517a9 | ||

|

|

fdbdb3ad86 | ||

|

|

7adcf5ca46 | ||

|

|

fe6716cf76 | ||

|

|

3c2096db68 | ||

|

|

58cad1a6b3 | ||

|

|

662e67ff16 | ||

|

|

8d577b872f | ||

|

|

b55290f3cb | ||

|

|

e8d3eb7393 | ||

|

|

47fa16e35f | ||

|

|

a87f769b85 | ||

|

|

8e63fa4594 | ||

|

|

63501a0d59 | ||

|

|

828fb37ca8 | ||

|

|

40f513d3b6 | ||

|

|

f0b8b66a75 | ||

|

|

d51cdc068b | ||

|

|

f8b382e480 | ||

|

|

1995f43b67 | ||

|

|

69e0392a8b | ||

|

|

1f6319442e | ||

|

|

559c4c0c2c | ||

|

|

feeb5b58d9 | ||

|

|

7a00f79a56 | ||

|

|

10d744704a | ||

|

|

eee35f9cc3 | ||

|

|

b3656761eb | ||

|

|

7b5fe34316 | ||

|

|

4536780a19 | ||

|

|

05d866e6b3 | ||

|

|

0d138cf473 | ||

|

|

dbe539ac80 | ||

|

|

665a39d179 | ||

|

|

5fd5d8c8c5 | ||

|

|

2832b4564c | ||

|

|

d4369a64ee | ||

|

|

81fa1630b7 | ||

|

|

a1c4b35205 | ||

|

|

5e567f3e37 | ||

|

|

c4757684c1 | ||

|

|

a55a6bf94b | ||

|

|

fa1792eb77 | ||

|

|

93a8f6e759 | ||

|

|

4a614855d4 | ||

|

|

8bdd47f912 | ||

|

|

f9e82abadc | ||

|

|

428fda81e2 | ||

|

|

29c9ad602d | ||

|

|

44458e2a97 | ||

|

|

861fb1f54b | ||

|

|

02534f4d55 | ||

|

|

5532cb95a2 | ||

|

|

9176e43fc9 | ||

|

|

cb190f54fc | ||

|

|

4be2539bc2 | ||

|

|

291e2adffa | ||

|

|

fa2ec63f45 | ||

|

|

946c943457 | ||

|

|

0e50766d6e | ||

|

|

58a1610ae0 | ||

|

|

06dc21168a | ||

|

|

305b67fbed | ||

|

|

4da6d152c3 | ||

|

|

25630f1ef5 | ||

|

|

9b01e3f1c9 | ||

|

|

99450400eb | ||

|

|

2f8a8988d7 | ||

|

|

9104d2e89e | ||

|

|

e75022763c | ||

|

|

f0f3fb337d | ||

|

|

f7f01a34c2 | ||

|

|

f9f9ff0cb8 | ||

|

|

522ba05ba8 | ||

|

|

f4f4093466 | ||

|

|

2e16ab0c2c | ||

|

|

6f02606fb7 | ||

|

|

df40142b51 | ||

|

|

cc290d488b | ||

|

|

64328218fc | ||

|

|

8d1356a085 | ||

|

|

4f39dd0f73 | ||

|

|

54ffc8ae45 | ||

|

|

78ab1944bd | ||

|

|

434cf94657 | ||

|

|

dcb893e230 | ||

|

|

ce4fadc378 | ||

|

|

5683d1b1bd | ||

|

|

0eb88d0c10 | ||

|

|

eb1367e54d | ||

|

|

33a4786206 | ||

|

|

8c6606ad95 | ||

|

|

cde9519a76 | ||

|

|

7b2e0d79cb | ||

|

|

5b0da8e92a | ||

|

|

0126d2f77c | ||

|

|

0b436014c9 | ||

|

|

2cb7f223ed | ||

|

|

eca551ed98 | ||

|

|

608fd92861 | ||

|

|

e37d8fe45f | ||

|

|

4cce91ec97 | ||

|

|

72fdde35dc | ||

|

|

d425187778 | ||

|

|

e419aa1f1a | ||

|

|

5506547f7f | ||

|

|

568ed72b3e | ||

|

|

e8cc0e6684 | ||

|

|

4331f69395 | ||

|

|

7cc67ae7cb | ||

|

|

244b3438fc | ||

|

|

1a741f7ca0 | ||

|

|

1447800e2b | ||

|

|

f968fe7512 | ||

|

|

0a2349fad7 | ||

|

|

941b8cbc1e | ||

|

|

3b7b16acfd | ||

|

|

fbc7bb68fc | ||

|

|

0d16880596 | ||

|

|

3b5218128f | ||

|

|

cb731bf1db | ||

|

|

7c4d6eb02d | ||

|

|

c14e7fb17a | ||

|

|

fe57811bc5 | ||

|

|

e073b48f7d | ||

|

|

a9df609593 | ||

|

|

6c3db9646e | ||

|

|

ff9c4c717e | ||

|

|

182374b46f | ||

|

|

0871cda526 | ||

|

|

1b47cba37a | ||

|

|

e5bef36905 | ||

|

|

706d723703 | ||

|

|

51eacbfac5 | ||

|

|

5c2a411982 | ||

|

|

08d65cbc41 | ||

|

|

9d2bf429c1 | ||

|

|

d34f863bd4 | ||

|

|

b4abf1c2c7 | ||

|

|

68baaf589e | ||

|

|

be74e41d84 | ||

|

|

848122b0ec | ||

|

|

0edcb7c0d9 | ||

|

|

cc58e06b5e | ||

|

|

0d6ca606ea | ||

|

|

75ee93789f | ||

|

|

05daddafbf | ||

|

|

7bbce6725d | ||

|

|

789b211586 | ||

|

|

826a043748 | ||

|

|

6761048298 | ||

|

|

738fc9acad | ||

|

|

43c0540de7 | ||

|

|

2d1c3d8121 | ||

|

|

f48a5c650d | ||

|

|

66c18eddb8 | ||

|

|

fdd2ee6365 | ||

|

|

c207f60ad8 | ||

|

|

0eaa95c8c0 | ||

|

|

df2fca5935 | ||

|

|

dcaf5d9c7d | ||

|

|

0112969a97 | ||

|

|

3ec0f3d69c | ||

|

|

5555d300a1 | ||

|

|

8155ef4b60 | ||

|

|

a12402f6c8 | ||

|

|

cf28b814cb | ||

|

|

b05f67db19 | ||

|

|

260f4659d5 | ||

|

|

9e700f298c | ||

|

|

56510734c4 | ||

|

|

3938a4d14e | ||

|

|

fa3b9eeeaf | ||

|

|

eb9d6fa25c | ||

|

|

b53307c1c2 | ||

|

|

c3fc708a66 | ||

|

|

b34ffbe6d0 | ||

|

|

f364315e48 | ||

|

|

3ddb5a13a5 | ||

|

|

a24cc399a4 | ||

|

|

305f4b2688 | ||

|

|

9823171d65 | ||

|

|

4761bd8fda | ||

|

|

9c22698723 | ||

|

|

e3892bbcc6 | ||

|

|

629b156f52 | ||

|

|

c45dd47d34 | ||

|

|

ef8831f784 | ||

|

|

c5a42cf5de | ||

|

|

90ebbfc20f | ||

|

|

17cd0dc91d | ||

|

|

fa1f42af59 | ||

|

|

f45ea1ab53 | ||

|

|

0dde3fe483 | ||

|

|

277dc7dd09 | ||

|

|

3215d0b856 | ||

|

|

0167d5efcd | ||

|

|

b48ac808a6 | ||

|

|

616524775c | ||

|

|

5832849b11 | ||

|

|

467c5d01e9 | ||

|

|

24711a2f39 | ||

|

|

24e8286f35 | ||

|

|

e8a1378ad0 | ||

|

|

76bb418ea9 | ||

|

|

cd8770a3e3 | ||

|

|

da834c0935 | ||

|

|

024ffb1117 | ||

|

|

eed7ab9793 | ||

|

|

032feb343f | ||

|

|

eabccba3fa | ||

|

|

d86d656316 | ||

|

|

fa73c91b0b | ||

|

|

2eee50832d | ||

|

|

b40736918b | ||

|

|

ffb1a2e30f | ||

|

|

d6c3c0c6c1 | ||

|

|

ee251721ac | ||

|

|

fdbb9195d5 | ||

|

|

c68b08d9af | ||

|

|

3653bbfca0 | ||

|

|

05c7cc7277 | ||

|

|

5670bf099b | ||

|

|

0c324b0f09 | ||

|

|

968557e38e | ||

|

|

882cdebacb | ||

|

|

07753e1774 | ||

|

|

5b984507fc | ||

|

|

27df481967 | ||

|

|

0943031f23 | ||

|

|

2d95168de0 | ||

|

|

97cae8f92c | ||

|

|

eb213bac92 | ||

|

|

8187788b2c | ||

|

|

c80e08abce | ||

|

|

42fd851e5c | ||

|

|

70e4ebccab | ||

|

|

140f87c741 | ||

|

|

b0d756123e | ||

|

|

6188c92916 | ||

|

|

34c6f96728 | ||

|

|

50fd047c0b | ||

|

|

5bcc05b536 | ||

|

|

ce7d6c8dd5 | ||

|

|

d87a1e28b4 | ||

|

|

227306c572 | ||

|

|

45c2691f89 | ||

|

|

d0c81245b8 | ||

|

|

e494afb1aa | ||

|

|

ecc3c1cf3b | ||

|

|

228b16416a | ||

|

|

17eb74842a | ||

|

|

c01ff74c73 | ||

|

|

f88613b26d | ||

|

|

3464f4241f | ||

|

|

849b703828 | ||

|

|

4b935a40b6 | ||

|

|

5873a23ccb | ||

|

|

eae2786825 | ||

|

|

6407386de5 | ||

|

|

3fe950723f | ||

|

|

52bf6acd46 | ||

|

|

9590e7d7e0 | ||

|

|

7a08140a2d | ||

|

|

d1491cfbd1 | ||

|

|

695b80549d | ||

|

|

11c60a637f | ||

|

|

844ad70bb9 | ||

|

|

5ac7cde577 | ||

|

|

ce3ef0550f | ||

|

|

813f3e7d42 | ||

|

|

d03f97af6b | ||

|

|

019ab0286d | ||

|

|

c6647b4706 | ||

|

|

f913536d88 | ||

|

|

640d1bd176 | ||

|

|

66baccf528 | ||

|

|

6e6dacbace | ||

|

|

cdbb10fb26 | ||

|

|

c34ba3918c | ||

|

|

fa228c876c | ||

|

|

2f4d0af7d7 | ||

|

|

2d3e5235a9 | ||

|

|

8e91ccaa54 | ||

|

|

6955658b36 | ||

|

|

dbb44401fd | ||

|

|

b42ed70c84 | ||

|

|

a28276d823 | ||

|

|

fa4b27dd0e | ||

|

|

0be44d5c49 | ||

|

|

2514596276 | ||

|

|

7008d2a953 | ||

|

|

2539fedfc4 | ||

|

|

b453df7591 | ||

|

|

9e5d5edcba | ||

|

|

2d5de6ff99 | ||

|

|

259e9f1c17 | ||

|

|

daeb53009e | ||

|

|

f12d271ca5 | ||

|

|

965185ca3b | ||

|

|

9c484f6a78 | ||

|

|

de18c3c722 | ||

|

|

9be753b281 | ||

|

|

d6ae122de1 | ||

|

|

c6b90044f2 | ||

|

|

14898b6422 | ||

|

|

26294b0759 | ||

|

|

6da45b5c2b | ||

|

|

674332fddd | ||

|

|

ab8942d05a | ||

|

|

29790b8a5c | ||

|

|

4a4c26ffeb | ||

|

|

25c9bc07b2 | ||

|

|

d22d4c4c83 | ||

|

|

d88640fd20 | ||

|

|

57a2fca3a4 | ||

|

|

f796688c84 | ||

|

|

d6bbf8b7cc | ||

|

|

37ec460f64 | ||

|

|

004b9c95e4 | ||

|

|

86e27b465a | ||

|

|

5e9afddc3a | ||

|

|

de281535b1 | ||

|

|

9df7def14e | ||

|

|

5b9db9795d | ||

|

|

7d2ce7e6ab | ||

|

|

3e807af2b2 | ||

|

|

4c64dc7885 | ||

|

|

e7a7874b34 | ||

|

|

c78a47788b | ||

|

|

922698c5d9 |

2

.github/CODEOWNERS

vendored

@@ -1 +1 @@

|

||||

* @prowler-cloud/prowler-team

|

||||

* @prowler-cloud/prowler-oss

|

||||

|

||||

52

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,52 +0,0 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: "[Bug]: "

|

||||

labels: bug, status/needs-triage

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--

|

||||

Please use this template to create your bug report. By providing as much info as possible you help us understand the issue, reproduce it and resolve it for you quicker. Therefore, take a couple of extra minutes to make sure you have provided all info needed.

|

||||

|

||||

PROTIP: record your screen and attach it as a gif to showcase the issue.

|

||||

|

||||

- How to record and attach gif: https://bit.ly/2Mi8T6K

|

||||

-->

|

||||

|

||||

**What happened?**

|

||||

A clear and concise description of what the bug is or what is not working as expected

|

||||

|

||||

|

||||

**How to reproduce it**

|

||||

Steps to reproduce the behavior:

|

||||

1. What command are you running?

|

||||

2. Cloud provider you are launching

|

||||

3. Environment you have like single account, multi-account, organizations, multi or single subsctiption, etc.

|

||||

4. See error

|

||||

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

|

||||

**Screenshots or Logs**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

Also, you can add logs (anonymize them first!). Here a command that may help to share a log

|

||||

`prowler <your arguments> --log-level DEBUG --log-file $(date +%F)_debug.log` then attach here the log file.

|

||||

|

||||

|

||||

**From where are you running Prowler?**

|

||||

Please, complete the following information:

|

||||

- Resource: (e.g. EC2 instance, Fargate task, Docker container manually, EKS, Cloud9, CodeBuild, workstation, etc.)

|

||||

- OS: [e.g. Amazon Linux 2, Mac, Alpine, Windows, etc. ]

|

||||

- Prowler Version [`prowler --version`]:

|

||||

- Python version [`python --version`]:

|

||||

- Pip version [`pip --version`]:

|

||||

- Installation method (Are you running it from pip package or cloning the github repo?):

|

||||

- Others:

|

||||

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

97

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

Normal file

@@ -0,0 +1,97 @@

|

||||

name: 🐞 Bug Report

|

||||

description: Create a report to help us improve

|

||||

title: "[Bug]: "

|

||||

labels: ["bug", "status/needs-triage"]

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: reproduce

|

||||

attributes:

|

||||

label: Steps to Reproduce

|

||||

description: Steps to reproduce the behavior

|

||||

placeholder: |-

|

||||

1. What command are you running?

|

||||

2. Cloud provider you are launching

|

||||

3. Environment you have, like single account, multi-account, organizations, multi or single subscription, etc.

|

||||

4. See error

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: expected

|

||||

attributes:

|

||||

label: Expected behavior

|

||||

description: A clear and concise description of what you expected to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: actual

|

||||

attributes:

|

||||

label: Actual Result with Screenshots or Logs

|

||||

description: If applicable, add screenshots to help explain your problem. Also, you can add logs (anonymize them first!). Here a command that may help to share a log `prowler <your arguments> --log-level DEBUG --log-file $(date +%F)_debug.log` then attach here the log file.

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

id: type

|

||||

attributes:

|

||||

label: How did you install Prowler?

|

||||

options:

|

||||

- Cloning the repository from github.com (git clone)

|

||||

- From pip package (pip install prowler)

|

||||

- From brew (brew install prowler)

|

||||

- Docker (docker pull toniblyx/prowler)

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: environment

|

||||

attributes:

|

||||

label: Environment Resource

|

||||

description: From where are you running Prowler?

|

||||

placeholder: |-

|

||||

1. EC2 instance

|

||||

2. Fargate task

|

||||

3. Docker container locally

|

||||

4. EKS

|

||||

5. Cloud9

|

||||

6. CodeBuild

|

||||

7. Workstation

|

||||

8. Other(please specify)

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: os

|

||||

attributes:

|

||||

label: OS used

|

||||

description: Which OS are you using?

|

||||

placeholder: |-

|

||||

1. Amazon Linux 2

|

||||

2. MacOS

|

||||

3. Alpine Linux

|

||||

4. Windows

|

||||

5. Other(please specify)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: prowler-version

|

||||

attributes:

|

||||

label: Prowler version

|

||||

description: Which Prowler version are you using?

|

||||

placeholder: |-

|

||||

prowler --version

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: pip-version

|

||||

attributes:

|

||||

label: Pip version

|

||||

description: Which pip version are you using?

|

||||

placeholder: |-

|

||||

pip --version

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: additional

|

||||

attributes:

|

||||

description: Additional context

|

||||

label: Context

|

||||

validations:

|

||||

required: false

|

||||

36

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

Normal file

@@ -0,0 +1,36 @@

|

||||

name: 💡 Feature Request

|

||||

description: Suggest an idea for this project

|

||||

labels: ["enhancement", "status/needs-triage"]

|

||||

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: Problem

|

||||

attributes:

|

||||

label: New feature motivation

|

||||

description: Is your feature request related to a problem? Please describe

|

||||

placeholder: |-

|

||||

1. A clear and concise description of what the problem is. Ex. I'm always frustrated when

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Solution

|

||||

attributes:

|

||||

label: Solution Proposed

|

||||

description: A clear and concise description of what you want to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Alternatives

|

||||

attributes:

|

||||

label: Describe alternatives you've considered

|

||||

description: A clear and concise description of any alternative solutions or features you've considered.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Context

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context or screenshots about the feature request here.

|

||||

validations:

|

||||

required: false

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -1,20 +0,0 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement, status/needs-triage

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

2

.github/dependabot.yml

vendored

@@ -8,7 +8,7 @@ updates:

|

||||

- package-ecosystem: "pip" # See documentation for possible values

|

||||

directory: "/" # Location of package manifests

|

||||

schedule:

|

||||

interval: "daily"

|

||||

interval: "weekly"

|

||||

target-branch: master

|

||||

labels:

|

||||

- "dependencies"

|

||||

|

||||

180

.github/workflows/build-lint-push-containers.yml

vendored

@@ -10,113 +10,53 @@ on:

|

||||

- "docs/**"

|

||||

|

||||

release:

|

||||

types: [published, edited]

|

||||

types: [published]

|

||||

|

||||

env:

|

||||

AWS_REGION_STG: eu-west-1

|

||||

AWS_REGION_PLATFORM: eu-west-1

|

||||

AWS_REGION_PRO: us-east-1

|

||||

AWS_REGION: us-east-1

|

||||

IMAGE_NAME: prowler

|

||||

LATEST_TAG: latest

|

||||

STABLE_TAG: stable

|

||||

TEMPORARY_TAG: temporary

|

||||

DOCKERFILE_PATH: ./Dockerfile

|

||||

PYTHON_VERSION: 3.9

|

||||

|

||||

jobs:

|

||||

# Lint Dockerfile using Hadolint

|

||||

# dockerfile-linter:

|

||||

# runs-on: ubuntu-latest

|

||||

# steps:

|

||||

# -

|

||||

# name: Checkout

|

||||

# uses: actions/checkout@v3

|

||||

# -

|

||||

# name: Install Hadolint

|

||||

# run: |

|

||||

# VERSION=$(curl --silent "https://api.github.com/repos/hadolint/hadolint/releases/latest" | \

|

||||

# grep '"tag_name":' | \

|

||||

# sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

# ) && curl -L -o /tmp/hadolint https://github.com/hadolint/hadolint/releases/download/v${VERSION}/hadolint-Linux-x86_64 \

|

||||

# && chmod +x /tmp/hadolint

|

||||

# -

|

||||

# name: Run Hadolint

|

||||

# run: |

|

||||

# /tmp/hadolint util/Dockerfile

|

||||

|

||||

# Build Prowler OSS container

|

||||

container-build:

|

||||

container-build-push:

|

||||

# needs: dockerfile-linter

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

POETRY_VIRTUALENVS_CREATE: "false"

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

- name: Build

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

# Without pushing to registries

|

||||

push: false

|

||||

tags: ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

outputs: type=docker,dest=/tmp/${{ env.IMAGE_NAME }}.tar

|

||||

- name: Share image between jobs

|

||||

uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

path: /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

|

||||

# Lint Prowler OSS container using Dockle

|

||||

# container-linter:

|

||||

# needs: container-build

|

||||

# runs-on: ubuntu-latest

|

||||

# steps:

|

||||

# -

|

||||

# name: Get container image from shared

|

||||

# uses: actions/download-artifact@v2

|

||||

# with:

|

||||

# name: ${{ env.IMAGE_NAME }}.tar

|

||||

# path: /tmp

|

||||

# -

|

||||

# name: Load Docker image

|

||||

# run: |

|

||||

# docker load --input /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

# docker image ls -a

|

||||

# -

|

||||

# name: Install Dockle

|

||||

# run: |

|

||||

# VERSION=$(curl --silent "https://api.github.com/repos/goodwithtech/dockle/releases/latest" | \

|

||||

# grep '"tag_name":' | \

|

||||

# sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

# ) && curl -L -o dockle.deb https://github.com/goodwithtech/dockle/releases/download/v${VERSION}/dockle_${VERSION}_Linux-64bit.deb \

|

||||

# && sudo dpkg -i dockle.deb && rm dockle.deb

|

||||

# -

|

||||

# name: Run Dockle

|

||||

# run: dockle ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }}

|

||||

|

||||

# Push Prowler OSS container to registries

|

||||

container-push:

|

||||

# needs: container-linter

|

||||

needs: container-build

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

id-token: write

|

||||

contents: read # This is required for actions/checkout

|

||||

steps:

|

||||

- name: Get container image from shared

|

||||

uses: actions/download-artifact@v2

|

||||

- name: Setup python (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

path: /tmp

|

||||

- name: Load Docker image

|

||||

python-version: ${{ env.PYTHON_VERSION }}

|

||||

|

||||

- name: Install dependencies (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker load --input /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

docker image ls -a

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

|

||||

- name: Update Prowler version (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

poetry version ${{ github.event.release.tag_name }}

|

||||

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Login to Public ECR

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

@@ -124,55 +64,53 @@ jobs:

|

||||

username: ${{ secrets.PUBLIC_ECR_AWS_ACCESS_KEY_ID }}

|

||||

password: ${{ secrets.PUBLIC_ECR_AWS_SECRET_ACCESS_KEY }}

|

||||

env:

|

||||

AWS_REGION: ${{ env.AWS_REGION_PRO }}

|

||||

AWS_REGION: ${{ env.AWS_REGION }}

|

||||

|

||||

- name: Tag (latest)

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Build and push container image (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

|

||||

- # Push to master branch - push "latest" tag

|

||||

name: Push (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

|

||||

- # Tag the new release (stable and release tag)

|

||||

name: Tag (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

|

||||

- # Push the new release (stable and release tag)

|

||||

name: Push (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

|

||||

- name: Delete artifacts

|

||||

if: always()

|

||||

uses: geekyeggo/delete-artifact@v1

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

- name: Build and push container image (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

# Use local context to get changes

|

||||

# https://github.com/docker/build-push-action#path-context

|

||||

context: .

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

dispatch-action:

|

||||

needs: container-push

|

||||

needs: container-build-push

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Get latest commit info

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

LATEST_COMMIT_HASH=$(echo ${{ github.event.after }} | cut -b -7)

|

||||

echo "LATEST_COMMIT_HASH=${LATEST_COMMIT_HASH}" >> $GITHUB_ENV

|

||||

- name: Dispatch event for latest

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"latest"}'

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"latest", "tag": "${{ env.LATEST_COMMIT_HASH }}"}}'

|

||||

- name: Dispatch event for release

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

|

||||

28

.github/workflows/pull-request.yml

vendored

@@ -17,42 +17,48 @@ jobs:

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Install poetry

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pipx install poetry

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: 'poetry'

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pipenv

|

||||

pipenv install --dev

|

||||

pipenv run pip list

|

||||

poetry install

|

||||

poetry run pip list

|

||||

VERSION=$(curl --silent "https://api.github.com/repos/hadolint/hadolint/releases/latest" | \

|

||||

grep '"tag_name":' | \

|

||||

sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

) && curl -L -o /tmp/hadolint "https://github.com/hadolint/hadolint/releases/download/v${VERSION}/hadolint-Linux-x86_64" \

|

||||

&& chmod +x /tmp/hadolint

|

||||

- name: Poetry check

|

||||

run: |

|

||||

poetry lock --check

|

||||

- name: Lint with flake8

|

||||

run: |

|

||||

pipenv run flake8 . --ignore=E266,W503,E203,E501,W605,E128 --exclude contrib

|

||||

poetry run flake8 . --ignore=E266,W503,E203,E501,W605,E128 --exclude contrib

|

||||

- name: Checking format with black

|

||||

run: |

|

||||

pipenv run black --check .

|

||||

poetry run black --check .

|

||||

- name: Lint with pylint

|

||||

run: |

|

||||

pipenv run pylint --disable=W,C,R,E -j 0 -rn -sn prowler/

|

||||

poetry run pylint --disable=W,C,R,E -j 0 -rn -sn prowler/

|

||||

- name: Bandit

|

||||

run: |

|

||||

pipenv run bandit -q -lll -x '*_test.py,./contrib/' -r .

|

||||

poetry run bandit -q -lll -x '*_test.py,./contrib/' -r .

|

||||

- name: Safety

|

||||

run: |

|

||||

pipenv run safety check

|

||||

poetry run safety check

|

||||

- name: Vulture

|

||||

run: |

|

||||

pipenv run vulture --exclude "contrib" --min-confidence 100 .

|

||||

poetry run vulture --exclude "contrib" --min-confidence 100 .

|

||||

- name: Hadolint

|

||||

run: |

|

||||

/tmp/hadolint Dockerfile --ignore=DL3013

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

pipenv run pytest tests -n auto

|

||||

poetry run pytest tests -n auto

|

||||

|

||||

76

.github/workflows/pypi-release.yml

vendored

@@ -5,37 +5,75 @@ on:

|

||||

types: [published]

|

||||

|

||||

env:

|

||||

GITHUB_BRANCH: ${{ github.event.release.tag_name }}

|

||||

RELEASE_TAG: ${{ github.event.release.tag_name }}

|

||||

|

||||

jobs:

|

||||

release-prowler-job:

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

POETRY_VIRTUALENVS_CREATE: "false"

|

||||

name: Release Prowler to PyPI

|

||||

steps:

|

||||

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: ${{ env.GITHUB_BRANCH }}

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9 #install the python needed

|

||||

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install build toml --upgrade

|

||||

- name: Build package

|

||||

run: python -m build

|

||||

- name: Publish prowler-cloud package to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

password: ${{ secrets.PYPI_API_TOKEN }}

|

||||

python-version: 3.9

|

||||

cache: 'poetry'

|

||||

- name: Change version and Build package

|

||||

run: |

|

||||

poetry version ${{ env.RELEASE_TAG }}

|

||||

git config user.name "github-actions"

|

||||

git config user.email "<noreply@github.com>"

|

||||

git add prowler/config/config.py pyproject.toml

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}"

|

||||

git push -f origin ${{ env.RELEASE_TAG }}

|

||||

git checkout -B release-${{ env.RELEASE_TAG }}

|

||||

poetry build

|

||||

- name: Publish prowler package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request with new version

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

commit-message: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}."

|

||||

base: master

|

||||

branch: release-${{ env.RELEASE_TAG }}

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}"

|

||||

body: |

|

||||

### Description

|

||||

|

||||

This PR updates Prowler Version to ${{ env.RELEASE_TAG }}.

|

||||

|

||||

### License

|

||||

|

||||

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

|

||||

- name: Replicate PyPi Package

|

||||

run: |

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler_cloud.egg-info

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler.egg-info

|

||||

pip install toml

|

||||

python util/replicate_pypi_package.py

|

||||

python -m build

|

||||

- name: Publish prowler package to PyPI

|

||||

uses: pypa/gh-action-pypi-publish@release/v1

|

||||

poetry build

|

||||

- name: Publish prowler-cloud package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request to github.com/Homebrew/homebrew-core to update prowler formula

|

||||

- name: Bump Homebrew formula

|

||||

uses: mislav/bump-homebrew-formula-action@v2

|

||||

with:

|

||||

password: ${{ secrets.PYPI_API_TOKEN }}

|

||||

formula-name: prowler

|

||||

base-branch: release-${{ env.RELEASE_TAG }}

|

||||

env:

|

||||

COMMITTER_TOKEN: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

|

||||

@@ -52,9 +52,9 @@ jobs:

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

token: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

commit-message: "feat(regions_update): Update regions for AWS services."

|

||||

branch: "aws-services-regions-updated"

|

||||

branch: "aws-services-regions-updated-${{ github.sha }}"

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "chore(regions_update): Changes in regions for AWS services."

|

||||

body: |

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

repos:

|

||||

## GENERAL

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v4.3.0

|

||||

rev: v4.4.0

|

||||

hooks:

|

||||

- id: check-merge-conflict

|

||||

- id: check-yaml

|

||||

@@ -13,14 +13,22 @@ repos:

|

||||

- id: pretty-format-json

|

||||

args: ["--autofix", --no-sort-keys, --no-ensure-ascii]

|

||||

|

||||

## TOML

|

||||

- repo: https://github.com/macisamuele/language-formatters-pre-commit-hooks

|

||||

rev: v2.7.0

|

||||

hooks:

|

||||

- id: pretty-format-toml

|

||||

args: [--autofix]

|

||||

files: pyproject.toml

|

||||

|

||||

## BASH

|

||||

- repo: https://github.com/koalaman/shellcheck-precommit

|

||||

rev: v0.8.0

|

||||

rev: v0.9.0

|

||||

hooks:

|

||||

- id: shellcheck

|

||||

## PYTHON

|

||||

- repo: https://github.com/myint/autoflake

|

||||

rev: v1.7.7

|

||||

rev: v2.0.1

|

||||

hooks:

|

||||

- id: autoflake

|

||||

args:

|

||||

@@ -31,30 +39,32 @@ repos:

|

||||

]

|

||||

|

||||

- repo: https://github.com/timothycrosley/isort

|

||||

rev: 5.10.1

|

||||

rev: 5.12.0

|

||||

hooks:

|

||||

- id: isort

|

||||

args: ["--profile", "black"]

|

||||

|

||||

- repo: https://github.com/psf/black

|

||||

rev: 22.10.0

|

||||

rev: 23.1.0

|

||||

hooks:

|

||||

- id: black

|

||||

|

||||

- repo: https://github.com/pycqa/flake8

|

||||

rev: 5.0.4

|

||||

rev: 6.0.0

|

||||

hooks:

|

||||

- id: flake8

|

||||

exclude: contrib

|

||||

args: ["--ignore=E266,W503,E203,E501,W605"]

|

||||

|

||||

- repo: https://github.com/haizaar/check-pipfile-lock

|

||||

rev: v0.0.5

|

||||

- repo: https://github.com/python-poetry/poetry

|

||||

rev: 1.4.0 # add version here

|

||||

hooks:

|

||||

- id: check-pipfile-lock

|

||||

- id: poetry-check

|

||||

- id: poetry-lock

|

||||

args: ["--no-update"]

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.12.0

|

||||

rev: v2.12.1-beta

|

||||

hooks:

|

||||

- id: hadolint

|

||||

args: ["--ignore=DL3013"]

|

||||

@@ -66,6 +76,15 @@ repos:

|

||||

entry: bash -c 'pylint --disable=W,C,R,E -j 0 -rn -sn prowler/'

|

||||

language: system

|

||||

|

||||

- id: trufflehog

|

||||

name: TruffleHog

|

||||

description: Detect secrets in your data.

|

||||

# entry: bash -c 'trufflehog git file://. --only-verified --fail'

|

||||

# For running trufflehog in docker, use the following entry instead:

|

||||

entry: bash -c 'docker run -v "$(pwd):/workdir" -i --rm trufflesecurity/trufflehog:latest git file:///workdir --only-verified --fail'

|

||||

language: system

|

||||

stages: ["commit", "push"]

|

||||

|

||||

- id: pytest-check

|

||||

name: pytest-check

|

||||

entry: bash -c 'pytest tests -n auto'

|

||||

|

||||

23

.readthedocs.yaml

Normal file

@@ -0,0 +1,23 @@

|

||||

# .readthedocs.yaml

|

||||

# Read the Docs configuration file

|

||||

# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

|

||||

|

||||

# Required

|

||||

version: 2

|

||||

|

||||

build:

|

||||

os: "ubuntu-22.04"

|

||||

tools:

|

||||

python: "3.9"

|

||||

jobs:

|

||||

post_create_environment:

|

||||

# Install poetry

|

||||

# https://python-poetry.org/docs/#installing-manually

|

||||

- pip install poetry

|

||||

# Tell poetry to not use a virtual environment

|

||||

- poetry config virtualenvs.create false

|

||||

post_install:

|

||||

- poetry install -E docs

|

||||

|

||||

mkdocs:

|

||||

configuration: mkdocs.yml

|

||||

@@ -16,6 +16,7 @@ USER prowler

|

||||

WORKDIR /home/prowler

|

||||

COPY prowler/ /home/prowler/prowler/

|

||||

COPY pyproject.toml /home/prowler

|

||||

COPY README.md /home/prowler

|

||||

|

||||

# Install dependencies

|

||||

ENV HOME='/home/prowler'

|

||||

@@ -24,9 +25,9 @@ ENV PATH="$HOME/.local/bin:$PATH"

|

||||

RUN pip install --no-cache-dir --upgrade pip && \

|

||||

pip install --no-cache-dir .

|

||||

|

||||

# Remove Prowler directory

|

||||

# Remove Prowler directory and build files

|

||||

USER 0

|

||||

RUN rm -rf /home/prowler/prowler /home/prowler/pyproject.toml

|

||||

RUN rm -rf /home/prowler/prowler /home/prowler/pyproject.toml /home/prowler/README.md /home/prowler/build /home/prowler/prowler.egg-info

|

||||

|

||||

USER prowler

|

||||

ENTRYPOINT ["prowler"]

|

||||

|

||||

4

Makefile

@@ -24,11 +24,11 @@ lint: ## Lint Code

|

||||

|

||||

##@ PyPI

|

||||

pypi-clean: ## Delete the distribution files

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler_cloud.egg-info

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler.egg-info

|

||||

|

||||

pypi-build: ## Build package

|

||||

$(MAKE) pypi-clean && \

|

||||

python3 -m build

|

||||

poetry build

|

||||

|

||||

pypi-upload: ## Upload package

|

||||

python3 -m twine upload --repository pypi dist/*

|

||||

|

||||

42

Pipfile

@@ -1,42 +0,0 @@

|

||||

[[source]]

|

||||

url = "https://pypi.org/simple"

|

||||

verify_ssl = true

|

||||

name = "pypi"

|

||||

|

||||

[packages]

|

||||

colorama = "0.4.4"

|

||||

boto3 = "1.26.3"

|

||||

arnparse = "0.0.2"

|

||||

botocore = "1.27.8"

|

||||

pydantic = "1.9.1"

|

||||

schema = "0.7.5"

|

||||

shodan = "1.28.0"

|

||||

detect-secrets = "1.4.0"

|

||||

alive-progress = "2.4.1"

|

||||

tabulate = "0.9.0"

|

||||

azure-identity = "1.12.0"

|

||||

azure-storage-blob = "12.14.1"

|

||||

msgraph-core = "0.2.2"

|

||||

azure-mgmt-subscription = "3.1.1"

|

||||

azure-mgmt-authorization = "3.0.0"

|

||||

azure-mgmt-security = "3.0.0"

|

||||

azure-mgmt-storage = "21.0.0"

|

||||

|

||||

[dev-packages]

|

||||

black = "22.10.0"

|

||||

pylint = "2.16.1"

|

||||

flake8 = "5.0.4"

|

||||

bandit = "1.7.4"

|

||||

safety = "2.3.1"

|

||||

vulture = "2.7"

|

||||

moto = "4.1.2"

|

||||

docker = "6.0.0"

|

||||

openapi-spec-validator = "0.5.5"

|

||||

pytest = "7.2.1"

|

||||

pytest-xdist = "2.5.0"

|

||||

coverage = "7.1.0"

|

||||

sure = "2.0.1"

|

||||

freezegun = "1.2.1"

|

||||

|

||||

[requires]

|

||||

python_version = "3.9"

|

||||

1703

Pipfile.lock

generated

61

README.md

@@ -11,14 +11,14 @@

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://pypi.org/project/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg"></a>

|

||||

<a href="https://pypi.org/project/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg?label=prowler%20downloads"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg?label=prowler-cloud%20downloads"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

<a href="https://gallery.ecr.aws/o4g1s5r6/prowler"><img width="120" height=19" alt="AWS ECR Gallery" src="https://user-images.githubusercontent.com/3985464/151531396-b6535a68-c907-44eb-95a1-a09508178616.png"></a>

|

||||

<a href="https://gallery.ecr.aws/prowler-cloud/prowler"><img width="120" height=19" alt="AWS ECR Gallery" src="https://user-images.githubusercontent.com/3985464/151531396-b6535a68-c907-44eb-95a1-a09508178616.png"></a>

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://github.com/prowler-cloud/prowler"><img alt="Repo size" src="https://img.shields.io/github/repo-size/prowler-cloud/prowler"></a>

|

||||

@@ -33,12 +33,17 @@

|

||||

|

||||

# Description

|

||||

|

||||

`Prowler` is an Open Source security tool to perform AWS and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

`Prowler` is an Open Source security tool to perform AWS, GCP and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

The full documentation can now be found at [https://docs.prowler.cloud](https://docs.prowler.cloud)

|

||||

|

||||

## Looking for Prowler v2 documentation?

|

||||

For Prowler v2 Documentation, please go to https://github.com/prowler-cloud/prowler/tree/2.12.1.

|

||||

|

||||

# ⚙️ Install

|

||||

|

||||

## Pip package

|

||||

@@ -48,6 +53,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

pip install prowler

|

||||

prowler -v

|

||||

```

|

||||

More details at https://docs.prowler.cloud

|

||||

|

||||

## Containers

|

||||

|

||||

@@ -60,30 +66,25 @@ The available versions of Prowler are the following:

|

||||

The container images are available here:

|

||||

|

||||

- [DockerHub](https://hub.docker.com/r/toniblyx/prowler/tags)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/o4g1s5r6/prowler)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/prowler-cloud/prowler)

|

||||

|

||||

## From Github

|

||||

|

||||

Python >= 3.9 is required with pip and pipenv:

|

||||

Python >= 3.9 is required with pip and poetry:

|

||||

|

||||

```

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

cd prowler

|

||||

pipenv shell

|

||||

pipenv install

|

||||

poetry shell

|

||||

poetry install

|

||||

python prowler.py -v

|

||||

```

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

The full documentation can now be found at [https://docs.prowler.cloud](https://docs.prowler.cloud)

|

||||

|

||||

|

||||

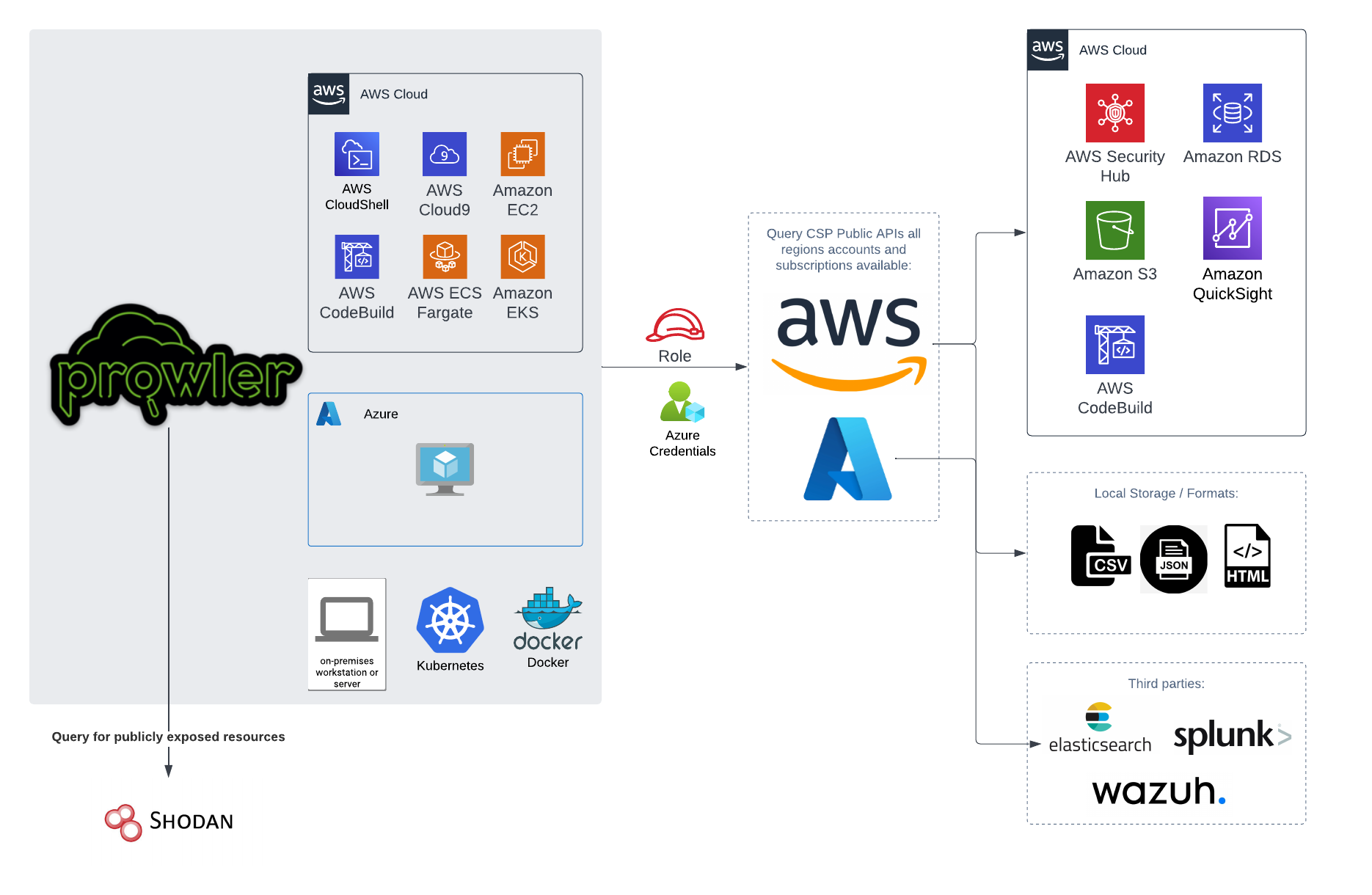

# 📐✏️ High level architecture

|

||||

|

||||

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

|

||||

# 📝 Requirements

|

||||

|

||||

@@ -162,6 +163,22 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

- `Reader`

|

||||

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

# 💻 Basic Usage

|

||||

|

||||

To run prowler, you will need to specify the provider (e.g aws or azure):

|

||||

@@ -236,12 +253,14 @@ prowler azure [--sp-env-auth, --az-cli-auth, --browser-auth, --managed-identity-

|

||||

```

|

||||

> By default, `prowler` will scan all Azure subscriptions.

|

||||

|

||||

# 🎉 New Features

|

||||

## Google Cloud Platform

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

- Python: we got rid of all bash and it is now all in Python.

|

||||

- Faster: huge performance improvements (same account from 2.5 hours to 4 minutes).

|

||||

- Developers and community: we have made it easier to contribute with new checks and new compliance frameworks. We also included unit tests.

|

||||

- Multi-cloud: in addition to AWS, we have added Azure, we plan to include GCP and OCI soon, let us know if you want to contribute!

|

||||

|

||||

# 📃 License

|

||||

|

||||

|

||||

@@ -79,3 +79,21 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

|

||||

- `Security Reader`

|

||||

- `Reader`

|

||||

|

||||

## Google Cloud

|

||||

|

||||

### GCP Authentication

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

|

Before Width: | Height: | Size: 258 KiB After Width: | Height: | Size: 283 KiB |

BIN

docs/img/output-html.png

Normal file

|

After Width: | Height: | Size: 631 KiB |

BIN

docs/img/quick-inventory.jpg

Normal file

|

After Width: | Height: | Size: 320 KiB |

|

Before Width: | Height: | Size: 220 KiB |

@@ -5,7 +5,7 @@

|

||||

|

||||

# Prowler Documentation

|

||||

|

||||

**Welcome to [Prowler Open Source v3](https://github.com/prowler-cloud/prowler/) Documentation!** 📄

|

||||

**Welcome to [Prowler Open Source v3](https://github.com/prowler-cloud/prowler/) Documentation!** 📄

|

||||

|

||||

For **Prowler v2 Documentation**, please go [here](https://github.com/prowler-cloud/prowler/tree/2.12.0) to the branch and its README.md.

|

||||

|

||||

@@ -16,7 +16,7 @@ For **Prowler v2 Documentation**, please go [here](https://github.com/prowler-cl

|

||||

|

||||

## About Prowler

|

||||

|

||||

**Prowler** is an Open Source security tool to perform AWS and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

**Prowler** is an Open Source security tool to perform AWS, Azure and Google Cloud security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

@@ -40,7 +40,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

|

||||

* `Python >= 3.9`

|

||||

* `Python pip >= 3.9`

|

||||

* AWS and/or Azure credentials

|

||||

* AWS, GCP and/or Azure credentials

|

||||

|

||||

_Commands_:

|

||||

|

||||

@@ -54,7 +54,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

_Requirements_:

|

||||

|

||||

* Have `docker` installed: https://docs.docker.com/get-docker/.

|

||||

* AWS and/or Azure credentials

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* In the command below, change `-v` to your local directory path in order to access the reports.

|

||||

|

||||

_Commands_:

|

||||

@@ -71,7 +71,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

|

||||

_Requirements for Ubuntu 20.04.3 LTS_:

|

||||

|

||||

* AWS and/or Azure credentials

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* Install python 3.9 with: `sudo apt-get install python3.9`

|

||||

* Remove python 3.8 to avoid conflicts if you can: `sudo apt-get remove python3.8`

|

||||

* Make sure you have the python3 distutils package installed: `sudo apt-get install python3-distutils`

|

||||

@@ -87,11 +87,28 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "GitHub"

|

||||

|

||||

_Requirements for Developers_:

|

||||

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* `git`, `Python >= 3.9`, `pip` and `poetry` installed (`pip install poetry`)

|

||||

|

||||

_Commands_:

|

||||

|

||||

```

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

cd prowler

|

||||

poetry shell

|

||||

poetry install

|

||||

python prowler.py -v

|

||||

```

|

||||

|

||||

=== "Amazon Linux 2"

|

||||

|

||||

_Requirements_:

|

||||

|

||||

* AWS and/or Azure credentials

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* Latest Amazon Linux 2 should come with Python 3.9 already installed however it may need pip. Install Python pip 3.9 with: `sudo dnf install -y python3-pip`.

|

||||

* Make sure setuptools for python is already installed with: `pip3 install setuptools`

|

||||

|

||||

@@ -103,6 +120,20 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "Brew"

|

||||

|

||||

_Requirements_:

|

||||

|

||||

* `Brew` installed in your Mac or Linux

|

||||

* AWS, GCP and/or Azure credentials

|

||||

|

||||

_Commands_:

|

||||

|

||||

``` bash

|

||||

brew install prowler

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "AWS CloudShell"

|

||||

|

||||

Prowler can be easely executed in AWS CloudShell but it has some prerequsites to be able to to so. AWS CloudShell is a container running with `Amazon Linux release 2 (Karoo)` that comes with Python 3.7, since Prowler requires Python >= 3.9 we need to first install a newer version of Python. Follow the steps below to successfully execute Prowler v3 in AWS CloudShell:

|

||||

@@ -118,7 +149,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

./configure --enable-optimizations

|

||||

sudo make altinstall

|

||||

python3.9 --version

|