Compare commits

79 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fad5a1937c | ||

|

|

635c257502 | ||

|

|

58a38c08d7 | ||

|

|

8fbee7737b | ||

|

|

e84f5f184e | ||

|

|

0bd26b19d7 | ||

|

|

64f82d5d51 | ||

|

|

f63ff994ce | ||

|

|

a10ee43271 | ||

|

|

54ed29e08d | ||

|

|

cc097e7a3f | ||

|

|

5de92ada43 | ||

|

|

0c546211cf | ||

|

|

4dc5a3a67c | ||

|

|

c51b226ceb | ||

|

|

0a5ca6cf74 | ||

|

|

96957219e4 | ||

|

|

32b7620db3 | ||

|

|

347f65e089 | ||

|

|

16628a427e | ||

|

|

ed16034a25 | ||

|

|

0c5f144e41 | ||

|

|

acc7d6e7dc | ||

|

|

84b4139052 | ||

|

|

9943643958 | ||

|

|

9ceaefb663 | ||

|

|

ec03ea5bc1 | ||

|

|

5855633c1f | ||

|

|

a53bc2bc2e | ||

|

|

88445820ed | ||

|

|

044ed3ae98 | ||

|

|

6f48012234 | ||

|

|

d344318dd4 | ||

|

|

6273dd3d83 | ||

|

|

0f3f3cbffd | ||

|

|

3244123b21 | ||

|

|

cba2ee3622 | ||

|

|

25ed925df5 | ||

|

|

8c5bd60bab | ||

|

|

c5510556a7 | ||

|

|

bbcfca84ef | ||

|

|

1260e94c2a | ||

|

|

8a02574303 | ||

|

|

c930f08348 | ||

|

|

5204acb5d0 | ||

|

|

784aaa98c9 | ||

|

|

745e2494bc | ||

|

|

c00792519d | ||

|

|

142fe5a12c | ||

|

|

5b127f232e | ||

|

|

c22bf01003 | ||

|

|

05e4911d6f | ||

|

|

9b551ef0ba | ||

|

|

56a8bb2349 | ||

|

|

8503c6a64d | ||

|

|

820f18da4d | ||

|

|

51a2432ebf | ||

|

|

6639534e97 | ||

|

|

0621577c7d | ||

|

|

26a507e3db | ||

|

|

244b540fe0 | ||

|

|

030ca4c173 | ||

|

|

88a2810f29 | ||

|

|

9164ee363a | ||

|

|

4cd47fdcc5 | ||

|

|

708852a3cb | ||

|

|

4a93bdf3ea | ||

|

|

22e7d2a811 | ||

|

|

93eca1dff2 | ||

|

|

9afe7408cd | ||

|

|

5dc2347a25 | ||

|

|

e3a0124b10 | ||

|

|

16af89c281 | ||

|

|

621e4258c8 | ||

|

|

ac6272e739 | ||

|

|

6e84f517a9 | ||

|

|

fdbdb3ad86 | ||

|

|

7adcf5ca46 | ||

|

|

fe6716cf76 |

1

.github/workflows/pypi-release.yml

vendored

@@ -35,6 +35,7 @@ jobs:

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}"

|

||||

git push -f origin ${{ env.RELEASE_TAG }}

|

||||

git checkout -B release-${{ env.RELEASE_TAG }}

|

||||

poetry build

|

||||

- name: Publish prowler package to PyPI

|

||||

run: |

|

||||

|

||||

@@ -61,6 +61,7 @@ repos:

|

||||

hooks:

|

||||

- id: poetry-check

|

||||

- id: poetry-lock

|

||||

args: ["--no-update"]

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.12.1-beta

|

||||

@@ -75,6 +76,15 @@ repos:

|

||||

entry: bash -c 'pylint --disable=W,C,R,E -j 0 -rn -sn prowler/'

|

||||

language: system

|

||||

|

||||

- id: trufflehog

|

||||

name: TruffleHog

|

||||

description: Detect secrets in your data.

|

||||

# entry: bash -c 'trufflehog git file://. --only-verified --fail'

|

||||

# For running trufflehog in docker, use the following entry instead:

|

||||

entry: bash -c 'docker run -v "$(pwd):/workdir" -i --rm trufflesecurity/trufflehog:latest git file:///workdir --only-verified --fail'

|

||||

language: system

|

||||

stages: ["commit", "push"]

|

||||

|

||||

- id: pytest-check

|

||||

name: pytest-check

|

||||

entry: bash -c 'pytest tests -n auto'

|

||||

|

||||

59

README.md

@@ -11,11 +11,10 @@

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://pypi.org/project/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypi.org/project/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg?label=prowler%20downloads"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg?label=prowler-cloud%20downloads"></a>

|

||||

<a href="https://formulae.brew.sh/formula/prowler#default"><img alt="Brew Prowler Downloads" src="https://img.shields.io/homebrew/installs/dm/prowler?label=brew%20downloads"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

@@ -85,7 +84,7 @@ python prowler.py -v

|

||||

|

||||

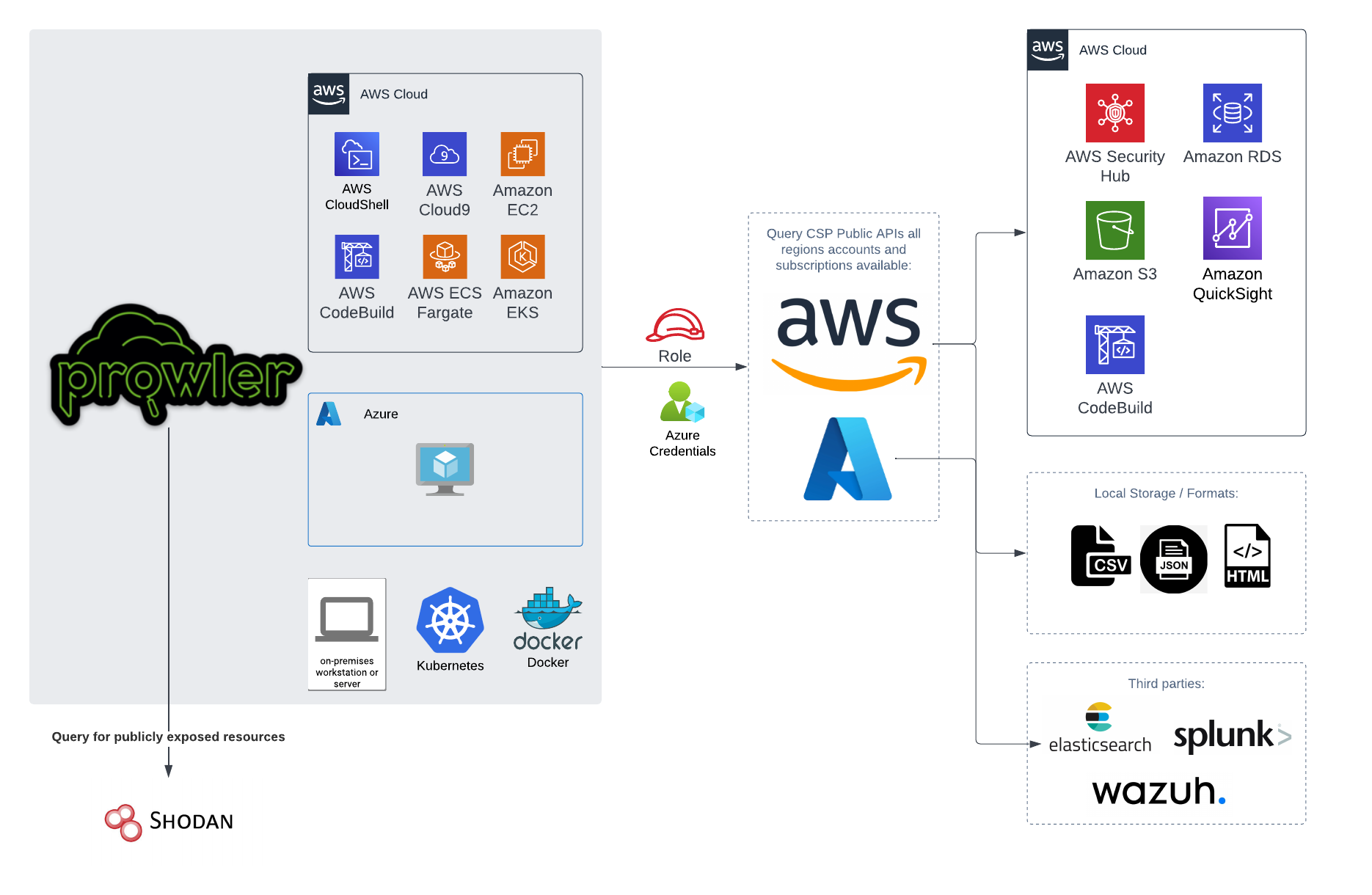

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

|

||||

# 📝 Requirements

|

||||

|

||||

@@ -116,22 +115,6 @@ Those credentials must be associated to a user or role with proper permissions t

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

## Azure

|

||||

|

||||

Prowler for Azure supports the following authentication types:

|

||||

@@ -180,6 +163,22 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

- `Reader`

|

||||

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

# 💻 Basic Usage

|

||||

|

||||

To run prowler, you will need to specify the provider (e.g aws or azure):

|

||||

@@ -245,14 +244,6 @@ prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

```

|

||||

> By default, `prowler` will scan all AWS regions.

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

## Azure

|

||||

|

||||

With Azure you need to specify which auth method is going to be used:

|

||||

@@ -262,12 +253,14 @@ prowler azure [--sp-env-auth, --az-cli-auth, --browser-auth, --managed-identity-

|

||||

```

|

||||

> By default, `prowler` will scan all Azure subscriptions.

|

||||

|

||||

# 🎉 New Features

|

||||

## Google Cloud Platform

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

- Python: we got rid of all bash and it is now all in Python.

|

||||

- Faster: huge performance improvements (same account from 2.5 hours to 4 minutes).

|

||||

- Developers and community: we have made it easier to contribute with new checks and new compliance frameworks. We also included unit tests.

|

||||

- Multi-cloud: in addition to AWS, we have added Azure, we plan to include GCP and OCI soon, let us know if you want to contribute!

|

||||

|

||||

# 📃 License

|

||||

|

||||

|

||||

@@ -30,24 +30,6 @@ Those credentials must be associated to a user or role with proper permissions t

|

||||

|

||||

> If you want Prowler to send findings to [AWS Security Hub](https://aws.amazon.com/security-hub), make sure you also attach the custom policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json).

|

||||

|

||||

## Google Cloud

|

||||

|

||||

### GCP Authentication

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

## Azure

|

||||

|

||||

Prowler for azure supports the following authentication types:

|

||||

@@ -97,3 +79,21 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

|

||||

- `Security Reader`

|

||||

- `Reader`

|

||||

|

||||

## Google Cloud

|

||||

|

||||

### GCP Authentication

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

|

Before Width: | Height: | Size: 258 KiB After Width: | Height: | Size: 283 KiB |

@@ -256,25 +256,6 @@ prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

|

||||

See more details about AWS Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

### Google Cloud

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

> `prowler` will scan the GCP project associated with the credentials.

|

||||

|

||||

See more details about GCP Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

### Azure

|

||||

|

||||

With Azure you need to specify which auth method is going to be used:

|

||||

@@ -299,3 +280,22 @@ Prowler by default scans all the subscriptions that is allowed to scan, if you w

|

||||

```console

|

||||

prowler azure --az-cli-auth --subscription-ids <subscription ID 1> <subscription ID 2> ... <subscription ID N>

|

||||

```

|

||||

|

||||

### Google Cloud

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

> `prowler` will scan the GCP project associated with the credentials.

|

||||

|

||||

See more details about GCP Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

@@ -7,9 +7,10 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

|

||||

## Allowlist Yaml File Syntax

|

||||

|

||||

### Account, Check and/or Region can be * to apply for all the cases

|

||||

### Resources is a list that can have either Regex or Keywords

|

||||

### Tags is an optional list containing tuples of 'key=value'

|

||||

### Account, Check and/or Region can be * to apply for all the cases.

|

||||

### Resources and tags are lists that can have either Regex or Keywords.

|

||||

### Tags is an optional list that matches on tuples of 'key=value' and are "ANDed" together.

|

||||

### Use an alternation Regex to match one of multiple tags with "ORed" logic.

|

||||

########################### ALLOWLIST EXAMPLE ###########################

|

||||

Allowlist:

|

||||

Accounts:

|

||||

@@ -21,14 +22,19 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

Resources:

|

||||

- "user-1" # Will ignore user-1 in check iam_user_hardware_mfa_enabled

|

||||

- "user-2" # Will ignore user-2 in check iam_user_hardware_mfa_enabled

|

||||

"ec2_*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*" # Will ignore every EC2 check in every account and region

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

- "test"

|

||||

Tags:

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

- "project=test"

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and

|

||||

- "project=test|project=stage" # either of ('project=test' OR project=stage) in account 123456789012 and every region

|

||||

|

||||

"*":

|

||||

Checks:

|

||||

|

||||

81

docs/tutorials/aws/regions-and-partitions.md

Normal file

@@ -0,0 +1,81 @@

|

||||

# AWS Regions and Partitions

|

||||

|

||||

By default Prowler is able to scan the following AWS partitions:

|

||||

|

||||

- Commercial: `aws`

|

||||

- China: `aws-cn`

|

||||

- GovCloud (US): `aws-us-gov`

|

||||

|

||||

> To check the available regions for each partition and service please refer to the following document [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json)

|

||||

|

||||

It is important to take into consideration that to scan the China (`aws-cn`) or GovCloud (`aws-us-gov`) partitions it is either required to have a valid region for that partition in your AWS credentials or to specify the regions you want to audit for that partition using the `-f/--region` flag.

|

||||

> Please, refer to https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html#configuring-credentials for more information about the AWS credentials configuration.

|

||||

|

||||

You can get more information about the available partitions and regions in the following [Botocore](https://github.com/boto/botocore) [file](https://github.com/boto/botocore/blob/22a19ea7c4c2c4dd7df4ab8c32733cba0c7597a4/botocore/data/partitions.json).

|

||||

## AWS China

|

||||

|

||||

To scan your AWS account in the China partition (`aws-cn`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region cn-north-1 cn-northwest-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = cn-north-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS GovCloud (US)

|

||||

|

||||

To scan your AWS account in the GovCloud (US) partition (`aws-us-gov`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region us-gov-east-1 us-gov-west-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = us-gov-east-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS ISO (US & Europe)

|

||||

|

||||

For the AWS ISO partitions, which are known as "secret partitions" and are air-gapped from the Internet, there is no builtin way to scan it. If you want to audit an AWS account in one of the AWS ISO partitions you should manually update the [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json) and include the partition, region and services, e.g.:

|

||||

```json

|

||||

"iam": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"eu-west-1",

|

||||

"us-east-1",

|

||||

],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

],

|

||||

"aws-iso": [

|

||||

"aws-iso-global",

|

||||

"us-iso-east-1",

|

||||

"us-iso-west-1"

|

||||

],

|

||||

"aws-iso-b": [

|

||||

"aws-iso-b-global",

|

||||

"us-isob-east-1"

|

||||

],

|

||||

"aws-iso-e": [],

|

||||

}

|

||||

},

|

||||

```

|

||||

@@ -29,14 +29,34 @@ prowler -S -f eu-west-1

|

||||

|

||||

> **Note 1**: It is recommended to send only fails to Security Hub and that is possible adding `-q` to the command.

|

||||

|

||||

> **Note 2**: Since Prowler perform checks to all regions by defauls you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs).

|

||||

> **Note 2**: Since Prowler perform checks to all regions by default you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs). Prowler will send findings to the Security Hub on the region where the scanned resource is located.

|

||||

|

||||

> **Note 3** to have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

> **Note 3**: To have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

|

||||

Once you run findings for first time you will be able to see Prowler findings in Findings section:

|

||||

|

||||

|

||||

|

||||

## Send findings to Security Hub assuming an IAM Role

|

||||

|

||||

When you are auditing a multi-account AWS environment, you can send findings to a Security Hub of another account by assuming an IAM role from that account using the `-R` flag in the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -R arn:aws:iam::123456789012:role/ProwlerExecRole

|

||||

```

|

||||

|

||||

> Remember that the used role needs to have permissions to send findings to Security Hub. To get more information about the permissions required, please refer to the following IAM policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json)

|

||||

|

||||

|

||||

## Send only failed findings to Security Hub

|

||||

|

||||

When using Security Hub it is recommended to send only the failed findings generated. To follow that recommendation you could add the `-q` flag to the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -q

|

||||

```

|

||||

|

||||

|

||||

## Skip sending updates of findings to Security Hub

|

||||

|

||||

By default, Prowler archives all its findings in Security Hub that have not appeared in the last scan.

|

||||

|

||||

29

docs/tutorials/gcp/authentication.md

Normal file

@@ -0,0 +1,29 @@

|

||||

# GCP authentication

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

> `prowler` will scan the GCP project associated with the credentials.

|

||||

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

BIN

docs/tutorials/img/create-slack-app.png

Normal file

|

After Width: | Height: | Size: 61 KiB |

BIN

docs/tutorials/img/install-in-slack-workspace.png

Normal file

|

After Width: | Height: | Size: 67 KiB |

BIN

docs/tutorials/img/integrate-slack-app.png

Normal file

|

After Width: | Height: | Size: 200 KiB |

BIN

docs/tutorials/img/slack-app-token.png

Normal file

|

After Width: | Height: | Size: 456 KiB |

BIN

docs/tutorials/img/slack-prowler-message.png

Normal file

|

After Width: | Height: | Size: 69 KiB |

36

docs/tutorials/integrations.md

Normal file

@@ -0,0 +1,36 @@

|

||||

# Integrations

|

||||

|

||||

## Slack

|

||||

|

||||

Prowler can be integrated with [Slack](https://slack.com/) to send a summary of the execution having configured a Slack APP in your channel with the following command:

|

||||

|

||||

```sh

|

||||

prowler <provider> --slack

|

||||

```

|

||||

|

||||

|

||||

|

||||

> Slack integration needs SLACK_API_TOKEN and SLACK_CHANNEL_ID environment variables.

|

||||

### Configuration

|

||||

|

||||

To configure the Slack Integration, follow the next steps:

|

||||

|

||||

1. Create a Slack Application:

|

||||

- Go to [Slack API page](https://api.slack.com/tutorials/tracks/getting-a-token), scroll down to the *Create app* button and select your workspace:

|

||||

|

||||

|

||||

- Install the application in your selected workspaces:

|

||||

|

||||

|

||||

- Get the *Slack App OAuth Token* that Prowler needs to send the message:

|

||||

|

||||

|

||||

2. Optionally, create a Slack Channel (you can use an existing one)

|

||||

|

||||

3. Integrate the created Slack App to your Slack channel:

|

||||

- Click on the channel, go to the Integrations tab, and Add an App.

|

||||

|

||||

|

||||

4. Set the following environment variables that Prowler will read:

|

||||

- `SLACK_API_TOKEN`: the *Slack App OAuth Token* that was previously get.

|

||||

- `SLACK_CHANNEL_ID`: the name of your Slack Channel where Prowler will send the message.

|

||||

@@ -33,6 +33,7 @@ nav:

|

||||

- Reporting: tutorials/reporting.md

|

||||

- Compliance: tutorials/compliance.md

|

||||

- Quick Inventory: tutorials/quick-inventory.md

|

||||

- Integrations: tutorials/integrations.md

|

||||

- Configuration File: tutorials/configuration_file.md

|

||||

- Logging: tutorials/logging.md

|

||||

- Allowlist: tutorials/allowlist.md

|

||||

@@ -42,6 +43,7 @@ nav:

|

||||

- Assume Role: tutorials/aws/role-assumption.md

|

||||

- AWS Security Hub: tutorials/aws/securityhub.md

|

||||

- AWS Organizations: tutorials/aws/organizations.md

|

||||

- AWS Regions and Partitions: tutorials/aws/regions-and-partitions.md

|

||||

- Scan Multiple AWS Accounts: tutorials/aws/multiaccount.md

|

||||

- AWS CloudShell: tutorials/aws/cloudshell.md

|

||||

- Checks v2 to v3 Mapping: tutorials/aws/v2_to_v3_checks_mapping.md

|

||||

@@ -51,6 +53,8 @@ nav:

|

||||

- Azure:

|

||||

- Authentication: tutorials/azure/authentication.md

|

||||

- Subscriptions: tutorials/azure/subscriptions.md

|

||||

- Google Cloud:

|

||||

- Authentication: tutorials/gcp/authentication.md

|

||||

- Developer Guide: tutorials/developer-guide.md

|

||||

- Security: security.md

|

||||

- Contact Us: contact.md

|

||||

|

||||

@@ -6,28 +6,33 @@

|

||||

"account:Get*",

|

||||

"appstream:Describe*",

|

||||

"appstream:List*",

|

||||

"backup:List*",

|

||||

"cloudtrail:GetInsightSelectors",

|

||||

"codeartifact:List*",

|

||||

"codebuild:BatchGet*",

|

||||

"ds:Describe*",

|

||||

"drs:Describe*",

|

||||

"ds:Get*",

|

||||

"ds:Describe*",

|

||||

"ds:List*",

|

||||

"ec2:GetEbsEncryptionByDefault",

|

||||

"ecr:Describe*",

|

||||

"ecr:GetRegistryScanningConfiguration",

|

||||

"elasticfilesystem:DescribeBackupPolicy",

|

||||

"glue:GetConnections",

|

||||

"glue:GetSecurityConfiguration*",

|

||||

"glue:SearchTables",

|

||||

"lambda:GetFunction*",

|

||||

"logs:FilterLogEvents",

|

||||

"macie2:GetMacieSession",

|

||||

"s3:GetAccountPublicAccessBlock",

|

||||

"shield:DescribeProtection",

|

||||

"shield:GetSubscriptionState",

|

||||

"securityhub:BatchImportFindings",

|

||||

"securityhub:GetFindings",

|

||||

"ssm:GetDocument",

|

||||

"ssm-incidents:List*",

|

||||

"support:Describe*",

|

||||

"tag:GetTagKeys",

|

||||

"organizations:DescribeOrganization",

|

||||

"organizations:ListPolicies*",

|

||||

"organizations:DescribePolicy"

|

||||

"tag:GetTagKeys"

|

||||

],

|

||||

"Resource": "*",

|

||||

"Effect": "Allow",

|

||||

@@ -39,7 +44,8 @@

|

||||

"apigateway:GET"

|

||||

],

|

||||

"Resource": [

|

||||

"arn:aws:apigateway:*::/restapis/*"

|

||||

"arn:aws:apigateway:*::/restapis/*",

|

||||

"arn:aws:apigateway:*::/apis/*"

|

||||

]

|

||||

}

|

||||

]

|

||||

|

||||

586

poetry.lock

generated

@@ -1,6 +1,7 @@

|

||||

#!/usr/bin/env python3

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

import os

|

||||

import sys

|

||||

|

||||

from prowler.lib.banner import print_banner

|

||||

@@ -29,6 +30,7 @@ from prowler.lib.outputs.compliance import display_compliance_table

|

||||

from prowler.lib.outputs.html import add_html_footer, fill_html_overview_statistics

|

||||

from prowler.lib.outputs.json import close_json

|

||||

from prowler.lib.outputs.outputs import extract_findings_statistics, send_to_s3_bucket

|

||||

from prowler.lib.outputs.slack import send_slack_message

|

||||

from prowler.lib.outputs.summary_table import display_summary_table

|

||||

from prowler.providers.aws.lib.security_hub.security_hub import (

|

||||

resolve_security_hub_previous_findings,

|

||||

@@ -169,6 +171,21 @@ def prowler():

|

||||

# Extract findings stats

|

||||

stats = extract_findings_statistics(findings)

|

||||

|

||||

if args.slack:

|

||||

if "SLACK_API_TOKEN" in os.environ and "SLACK_CHANNEL_ID" in os.environ:

|

||||

_ = send_slack_message(

|

||||

os.environ["SLACK_API_TOKEN"],

|

||||

os.environ["SLACK_CHANNEL_ID"],

|

||||

stats,

|

||||

provider,

|

||||

audit_info,

|

||||

)

|

||||

else:

|

||||

logger.critical(

|

||||

"Slack integration needs SLACK_API_TOKEN and SLACK_CHANNEL_ID environment variables (see more in https://docs.prowler.cloud/en/latest/tutorials/integrations/#slack)."

|

||||

)

|

||||

sys.exit(1)

|

||||

|

||||

if args.output_modes:

|

||||

for mode in args.output_modes:

|

||||

# Close json file if exists

|

||||

|

||||

@@ -1,6 +1,7 @@

|

||||

### Account, Check and/or Region can be * to apply for all the cases

|

||||

### Resources is a list that can have either Regex or Keywords

|

||||

### Tags is an optional list containing tuples of 'key=value'

|

||||

### Account, Check and/or Region can be * to apply for all the cases.

|

||||

### Resources and tags are lists that can have either Regex or Keywords.

|

||||

### Tags is an optional list that matches on tuples of 'key=value' and are "ANDed" together.

|

||||

### Use an alternation Regex to match one of multiple tags with "ORed" logic.

|

||||

########################### ALLOWLIST EXAMPLE ###########################

|

||||

Allowlist:

|

||||

Accounts:

|

||||

@@ -12,14 +13,19 @@ Allowlist:

|

||||

Resources:

|

||||

- "user-1" # Will ignore user-1 in check iam_user_hardware_mfa_enabled

|

||||

- "user-2" # Will ignore user-2 in check iam_user_hardware_mfa_enabled

|

||||

"ec2_*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*" # Will ignore every EC2 check in every account and region

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

- "test"

|

||||

Tags:

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and 'project=test' in account 123456789012 and every region

|

||||

- "project=test"

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and

|

||||

- "project=test|project=stage" # either of ('project=test' OR project=stage) in account 123456789012 and every region

|

||||

|

||||

"*":

|

||||

Checks:

|

||||

|

||||

@@ -10,9 +10,13 @@ from prowler.lib.logger import logger

|

||||

|

||||

timestamp = datetime.today()

|

||||

timestamp_utc = datetime.now(timezone.utc).replace(tzinfo=timezone.utc)

|

||||

prowler_version = "3.4.0"

|

||||

prowler_version = "3.5.2"

|

||||

html_logo_url = "https://github.com/prowler-cloud/prowler/"

|

||||

html_logo_img = "https://user-images.githubusercontent.com/3985464/113734260-7ba06900-96fb-11eb-82bc-d4f68a1e2710.png"

|

||||

square_logo_img = "https://user-images.githubusercontent.com/38561120/235905862-9ece5bd7-9aa3-4e48-807a-3a9035eb8bfb.png"

|

||||

aws_logo = "https://user-images.githubusercontent.com/38561120/235953920-3e3fba08-0795-41dc-b480-9bea57db9f2e.png"

|

||||

azure_logo = "https://user-images.githubusercontent.com/38561120/235927375-b23e2e0f-8932-49ec-b59c-d89f61c8041d.png"

|

||||

gcp_logo = "https://user-images.githubusercontent.com/38561120/235928332-eb4accdc-c226-4391-8e97-6ca86a91cf50.png"

|

||||

|

||||

orange_color = "\033[38;5;208m"

|

||||

banner_color = "\033[1;92m"

|

||||

|

||||

@@ -154,6 +154,11 @@ Detailed documentation at https://docs.prowler.cloud

|

||||

common_outputs_parser.add_argument(

|

||||

"-b", "--no-banner", action="store_true", help="Hide Prowler banner"

|

||||

)

|

||||

common_outputs_parser.add_argument(

|

||||

"--slack",

|

||||

action="store_true",

|

||||

help="Send a summary of the execution with a Slack APP in your channel. Environment variables SLACK_API_TOKEN and SLACK_CHANNEL_ID are required (see more in https://docs.prowler.cloud/en/latest/tutorials/integrations/#slack).",

|

||||

)

|

||||

|

||||

def __init_logging_parser__(self):

|

||||

# Logging Options

|

||||

|

||||

@@ -42,8 +42,6 @@ def generate_provider_output_csv(

|

||||

set_provider_output_options configures automatically the outputs based on the selected provider and returns the Provider_Output_Options object.

|

||||

"""

|

||||

try:

|

||||

finding_output_model = f"{provider.capitalize()}_Check_Output_{mode.upper()}"

|

||||

output_model = getattr(importlib.import_module(__name__), finding_output_model)

|

||||

# Dynamically load the Provider_Output_Options class

|

||||

finding_output_model = f"{provider.capitalize()}_Check_Output_{mode.upper()}"

|

||||

output_model = getattr(importlib.import_module(__name__), finding_output_model)

|

||||

|

||||

135

prowler/lib/outputs/slack.py

Normal file

@@ -0,0 +1,135 @@

|

||||

import sys

|

||||

|

||||

from slack_sdk import WebClient

|

||||

|

||||

from prowler.config.config import aws_logo, azure_logo, gcp_logo, square_logo_img

|

||||

from prowler.lib.logger import logger

|

||||

|

||||

|

||||

def send_slack_message(token, channel, stats, provider, audit_info):

|

||||

try:

|

||||

client = WebClient(token=token)

|

||||

identity, logo = create_message_identity(provider, audit_info)

|

||||

response = client.chat_postMessage(

|

||||

username="Prowler",

|

||||

icon_url=square_logo_img,

|

||||

channel="#" + channel,

|

||||

blocks=create_message_blocks(identity, logo, stats),

|

||||

)

|

||||

return response

|

||||

except Exception as error:

|

||||

logger.error(

|

||||

f"{error.__class__.__name__}[{error.__traceback__.tb_lineno}]: {error}"

|

||||

)

|

||||

|

||||

|

||||

def create_message_identity(provider, audit_info):

|

||||

try:

|

||||

identity = ""

|

||||

logo = aws_logo

|

||||

if provider == "aws":

|

||||

identity = f"AWS Account *{audit_info.audited_account}*"

|

||||

elif provider == "gcp":

|

||||

identity = f"GCP Project *{audit_info.project_id}*"

|

||||

logo = gcp_logo

|

||||

elif provider == "azure":

|

||||

printed_subscriptions = []

|

||||

for key, value in audit_info.identity.subscriptions.items():

|

||||

intermediate = "- *" + key + ": " + value + "*\n"

|

||||

printed_subscriptions.append(intermediate)

|

||||

identity = f"Azure Subscriptions:\n{''.join(printed_subscriptions)}"

|

||||

logo = azure_logo

|

||||

return identity, logo

|

||||

except Exception as error:

|

||||

logger.error(

|

||||

f"{error.__class__.__name__}[{error.__traceback__.tb_lineno}]: {error}"

|

||||

)

|

||||

|

||||

|

||||

def create_message_blocks(identity, logo, stats):

|

||||

try:

|

||||

blocks = [

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": f"Hey there 👋 \n I'm *Prowler*, _the handy cloud security tool_ :cloud::key:\n\n I have just finished the security assessment on your {identity} with a total of *{stats['findings_count']}* findings.",

|

||||

},

|

||||

"accessory": {

|

||||

"type": "image",

|

||||

"image_url": logo,

|

||||

"alt_text": "Provider Logo",

|

||||

},

|

||||

},

|

||||

{"type": "divider"},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": f"\n:white_check_mark: *{stats['total_pass']} Passed findings* ({round(stats['total_pass']/stats['findings_count']*100,2)}%)\n",

|

||||

},

|

||||

},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": f"\n:x: *{stats['total_fail']} Failed findings* ({round(stats['total_fail']/stats['findings_count']*100,2)}%)\n ",

|

||||

},

|

||||

},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": f"\n:bar_chart: *{stats['resources_count']} Scanned Resources*\n",

|

||||

},

|

||||

},

|

||||

{"type": "divider"},

|

||||

{

|

||||

"type": "context",

|

||||

"elements": [

|

||||

{

|

||||

"type": "mrkdwn",

|

||||

"text": f"Used parameters: `prowler {' '.join(sys.argv[1:])} `",

|

||||

}

|

||||

],

|

||||

},

|

||||

{"type": "divider"},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {"type": "mrkdwn", "text": "Join our Slack Community!"},

|

||||

"accessory": {

|

||||

"type": "button",

|

||||

"text": {"type": "plain_text", "text": "Prowler :slack:"},

|

||||

"url": "https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog",

|

||||

},

|

||||

},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": "Feel free to contact us in our repo",

|

||||

},

|

||||

"accessory": {

|

||||

"type": "button",

|

||||

"text": {"type": "plain_text", "text": "Prowler :github:"},

|

||||

"url": "https://github.com/prowler-cloud/prowler",

|

||||

},

|

||||

},

|

||||

{

|

||||

"type": "section",

|

||||

"text": {

|

||||

"type": "mrkdwn",

|

||||

"text": "See all the things you can do with ProwlerPro",

|

||||

},

|

||||

"accessory": {

|

||||

"type": "button",

|

||||

"text": {"type": "plain_text", "text": "Prowler Pro"},

|

||||

"url": "https://prowler.pro",

|

||||

},

|

||||

},

|

||||

]

|

||||

return blocks

|

||||

except Exception as error:

|

||||

logger.error(

|

||||

f"{error.__class__.__name__}[{error.__traceback__.tb_lineno}]: {error}"

|

||||

)

|

||||

@@ -36,7 +36,7 @@ class AWS_Provider:

|

||||

secret_key=audit_info.credentials.aws_secret_access_key,

|

||||

token=audit_info.credentials.aws_session_token,

|

||||

expiry_time=audit_info.credentials.expiration,

|

||||

refresh_using=self.refresh,

|

||||

refresh_using=self.refresh_credentials,

|

||||

method="sts-assume-role",

|

||||

)

|

||||

# Here we need the botocore session since it needs to use refreshable credentials

|

||||

@@ -60,7 +60,7 @@ class AWS_Provider:

|

||||

# Refresh credentials method using assume role

|

||||

# This method is called "adding ()" to the name, so it cannot accept arguments

|

||||

# https://github.com/boto/botocore/blob/098cc255f81a25b852e1ecdeb7adebd94c7b1b73/botocore/credentials.py#L570

|

||||

def refresh(self):

|

||||

def refresh_credentials(self):

|

||||

logger.info("Refreshing assumed credentials...")

|

||||

|

||||

response = assume_role(self.aws_session, self.role_info)

|

||||

|

||||

@@ -736,12 +736,16 @@

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-south-1",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"eu-central-1",

|

||||

"eu-north-1",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-2"

|

||||

@@ -778,6 +782,7 @@

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

@@ -980,6 +985,47 @@

|

||||

]

|

||||

}

|

||||

},

|

||||

"awshealthdashboard": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"af-south-1",

|

||||

"ap-east-1",

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-northeast-3",

|

||||

"ap-south-1",

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

"eu-north-1",

|

||||

"eu-south-1",

|

||||

"eu-south-2",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"me-central-1",

|

||||

"me-south-1",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

]

|

||||

}

|

||||

},

|

||||

"backup": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -1937,6 +1983,7 @@

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

]

|

||||

}

|

||||

@@ -3421,6 +3468,7 @@

|

||||

"emr-serverless": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-east-1",

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-south-1",

|

||||

@@ -3432,13 +3480,17 @@

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"me-south-1",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

@@ -3949,6 +4001,7 @@

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

@@ -3963,6 +4016,7 @@

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

@@ -4925,6 +4979,21 @@

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"ivs-realtime": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-south-1",

|

||||

"eu-central-1",

|

||||

"eu-west-1",

|

||||

"us-east-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"ivschat": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -5094,6 +5163,7 @@

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

@@ -5279,6 +5349,7 @@

|

||||

"ap-south-1",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-north-1",

|

||||

@@ -5293,7 +5364,10 @@

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

@@ -5423,7 +5497,10 @@

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

@@ -6195,13 +6272,17 @@

|

||||

"ap-northeast-2",

|

||||

"ap-northeast-3",

|

||||

"ap-south-1",

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

"eu-north-1",

|

||||

"eu-south-1",

|

||||

"eu-south-2",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

@@ -6251,7 +6332,10 @@

|

||||

"us-east-2",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

@@ -6592,6 +6676,24 @@

|

||||

]

|

||||

}

|

||||

},

|

||||

"osis": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-northeast-1",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"eu-central-1",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"outposts": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -6660,47 +6762,6 @@

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"phd": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"af-south-1",

|

||||

"ap-east-1",

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-northeast-3",

|

||||

"ap-south-1",

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

"eu-north-1",

|

||||

"eu-south-1",

|

||||

"eu-south-2",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"me-central-1",

|

||||

"me-south-1",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

]

|

||||

}

|

||||

},

|

||||

"pi": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -7562,6 +7623,7 @@

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

@@ -7802,6 +7864,7 @@

|

||||

"ap-northeast-2",

|

||||

"ap-northeast-3",

|

||||

"ap-south-1",

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

@@ -7881,14 +7944,21 @@

|

||||

"ap-northeast-2",

|

||||

"ap-northeast-3",

|

||||

"ap-south-1",

|

||||

"ap-south-2",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ap-southeast-3",

|

||||

"ap-southeast-4",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-central-2",

|

||||

"eu-north-1",

|

||||

"eu-south-1",

|

||||

"eu-south-2",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"me-central-1",

|

||||

"me-south-1",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

@@ -7897,7 +7967,10 @@

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

]

|

||||

}

|

||||

},

|

||||

"scheduler": {

|

||||

@@ -8876,30 +8949,6 @@

|

||||

]

|

||||

}

|

||||

},

|

||||

"sumerian": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-northeast-1",

|

||||

"ap-northeast-2",

|

||||

"ap-south-1",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-north-1",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"eu-west-3",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"support": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -9269,6 +9318,24 @@

|

||||

]

|

||||

}

|

||||

},

|

||||

"verified-access": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-southeast-2",

|

||||

"ca-central-1",

|

||||

"eu-central-1",

|

||||

"eu-west-1",

|

||||

"eu-west-2",

|

||||

"sa-east-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-1",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"vmwarecloudonaws": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -9358,6 +9425,21 @@

|

||||

]

|

||||

}

|

||||

},

|

||||

"vpc-lattice": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"ap-northeast-1",

|

||||

"ap-southeast-1",

|

||||

"ap-southeast-2",

|

||||

"eu-west-1",

|

||||

"us-east-1",

|

||||

"us-east-2",

|

||||

"us-west-2"

|

||||

],

|

||||

"aws-cn": [],

|

||||

"aws-us-gov": []

|

||||

}

|

||||

},

|

||||

"vpn": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

@@ -9643,6 +9725,7 @@

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

]

|

||||

}

|

||||

|

||||

@@ -130,19 +130,33 @@ def is_allowlisted(allowlist, audited_account, check, region, resource, tags):

|

||||

|

||||

def is_allowlisted_in_check(allowlist, audited_account, check, region, resource, tags):

|

||||

try:

|

||||

# If there is a *, it affects to all checks

|

||||

if "*" in allowlist["Accounts"][audited_account]["Checks"]:

|

||||

check = "*"

|

||||

if is_allowlisted_in_region(

|

||||

allowlist, audited_account, check, region, resource, tags

|

||||

):

|

||||

return True

|

||||

# Check if there is the specific check

|

||||

if check in allowlist["Accounts"][audited_account]["Checks"]:

|

||||

if is_allowlisted_in_region(

|

||||

allowlist, audited_account, check, region, resource, tags

|

||||

):

|

||||

return True

|

||||

for allowlisted_check in allowlist["Accounts"][audited_account][

|

||||

"Checks"

|

||||

].keys():

|

||||

# If there is a *, it affects to all checks

|

||||

if "*" == allowlisted_check:

|

||||

check = "*"

|

||||

if is_allowlisted_in_region(

|

||||

allowlist, audited_account, check, region, resource, tags

|

||||

):

|

||||

return True

|

||||

# Check if there is the specific check

|

||||

elif check == allowlisted_check:

|

||||

if is_allowlisted_in_region(

|

||||

allowlist, audited_account, check, region, resource, tags

|

||||

):

|

||||

return True

|

||||

# Check if check is a regex

|

||||

elif re.search(allowlisted_check, check):

|

||||

if is_allowlisted_in_region(

|

||||

allowlist,

|

||||

audited_account,

|

||||

allowlisted_check,

|

||||

region,

|

||||

resource,

|

||||

tags,

|

||||

):

|

||||

return True

|

||||

return False

|

||||

except Exception as error:

|

||||

logger.critical(

|

||||

@@ -192,13 +206,21 @@ def is_allowlisted_in_tags(check_allowlist, elem, resource, tags):

|

||||

# Check if there are allowlisted tags

|

||||

if "Tags" in check_allowlist:

|

||||

# Check if there are resource tags

|

||||

if tags:

|

||||

tags_in_resource_tags = True

|

||||

for tag in check_allowlist["Tags"]:

|

||||

if tag not in tags:

|

||||

tags_in_resource_tags = False

|

||||

if tags_in_resource_tags and re.search(elem, resource):

|

||||

return True

|

||||

if not tags or not re.search(elem, resource):

|

||||

return False

|

||||

|

||||

all_allowed_tags_in_resource_tags = True

|

||||

for allowed_tag in check_allowlist["Tags"]:

|

||||

found_allowed_tag = False

|

||||

for resource_tag in tags:

|

||||

if re.search(allowed_tag, resource_tag):

|

||||

found_allowed_tag = True

|

||||

break

|

||||

|

||||

if not found_allowed_tag:

|

||||

all_allowed_tags_in_resource_tags = False

|

||||

|

||||

return all_allowed_tags_in_resource_tags

|

||||

else:

|

||||

if re.search(elem, resource):

|

||||

return True

|

||||

|

||||

@@ -1,50 +1,48 @@

|

||||

import re

|

||||

|

||||

from arnparse import arnparse

|

||||

|

||||

from prowler.providers.aws.lib.arn.error import (

|

||||

RoleArnParsingEmptyResource,

|

||||

RoleArnParsingFailedMissingFields,

|

||||

RoleArnParsingIAMRegionNotEmpty,

|

||||

RoleArnParsingInvalidAccountID,

|

||||

RoleArnParsingInvalidResourceType,

|

||||

RoleArnParsingPartitionEmpty,

|

||||

RoleArnParsingServiceNotIAM,

|

||||

RoleArnParsingServiceNotIAMnorSTS,

|

||||

)

|

||||

from prowler.providers.aws.lib.arn.models import ARN

|

||||

|

||||

|

||||

def arn_parsing(arn):

|

||||

# check for number of fields, must be six

|

||||

if len(arn.split(":")) != 6:

|

||||

raise RoleArnParsingFailedMissingFields

|

||||

def parse_iam_credentials_arn(arn: str) -> ARN:

|

||||

arn_parsed = ARN(arn)

|

||||

# First check if region is empty (in IAM ARN's region is always empty)

|

||||

if arn_parsed.region:

|

||||