Compare commits

1 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

bffe2a2c63 |

2

.github/CODEOWNERS

vendored

@@ -1 +1 @@

|

||||

* @prowler-cloud/prowler-oss

|

||||

* @prowler-cloud/prowler-team

|

||||

|

||||

52

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,52 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: "[Bug]: "

|

||||

labels: bug, status/needs-triage

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

<!--

|

||||

Please use this template to create your bug report. By providing as much info as possible you help us understand the issue, reproduce it and resolve it for you quicker. Therefore, take a couple of extra minutes to make sure you have provided all info needed.

|

||||

|

||||

PROTIP: record your screen and attach it as a gif to showcase the issue.

|

||||

|

||||

- How to record and attach gif: https://bit.ly/2Mi8T6K

|

||||

-->

|

||||

|

||||

**What happened?**

|

||||

A clear and concise description of what the bug is or what is not working as expected

|

||||

|

||||

|

||||

**How to reproduce it**

|

||||

Steps to reproduce the behavior:

|

||||

1. What command are you running?

|

||||

2. Cloud provider you are launching

|

||||

3. Environment you have like single account, multi-account, organizations, multi or single subsctiption, etc.

|

||||

4. See error

|

||||

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

|

||||

**Screenshots or Logs**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

Also, you can add logs (anonymize them first!). Here a command that may help to share a log

|

||||

`prowler <your arguments> --log-level DEBUG --log-file $(date +%F)_debug.log` then attach here the log file.

|

||||

|

||||

|

||||

**From where are you running Prowler?**

|

||||

Please, complete the following information:

|

||||

- Resource: (e.g. EC2 instance, Fargate task, Docker container manually, EKS, Cloud9, CodeBuild, workstation, etc.)

|

||||

- OS: [e.g. Amazon Linux 2, Mac, Alpine, Windows, etc. ]

|

||||

- Prowler Version [`prowler --version`]:

|

||||

- Python version [`python --version`]:

|

||||

- Pip version [`pip --version`]:

|

||||

- Installation method (Are you running it from pip package or cloning the github repo?):

|

||||

- Others:

|

||||

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

97

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

@@ -1,97 +0,0 @@

|

||||

name: 🐞 Bug Report

|

||||

description: Create a report to help us improve

|

||||

title: "[Bug]: "

|

||||

labels: ["bug", "status/needs-triage"]

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: reproduce

|

||||

attributes:

|

||||

label: Steps to Reproduce

|

||||

description: Steps to reproduce the behavior

|

||||

placeholder: |-

|

||||

1. What command are you running?

|

||||

2. Cloud provider you are launching

|

||||

3. Environment you have, like single account, multi-account, organizations, multi or single subscription, etc.

|

||||

4. See error

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: expected

|

||||

attributes:

|

||||

label: Expected behavior

|

||||

description: A clear and concise description of what you expected to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: actual

|

||||

attributes:

|

||||

label: Actual Result with Screenshots or Logs

|

||||

description: If applicable, add screenshots to help explain your problem. Also, you can add logs (anonymize them first!). Here a command that may help to share a log `prowler <your arguments> --log-level DEBUG --log-file $(date +%F)_debug.log` then attach here the log file.

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

id: type

|

||||

attributes:

|

||||

label: How did you install Prowler?

|

||||

options:

|

||||

- Cloning the repository from github.com (git clone)

|

||||

- From pip package (pip install prowler)

|

||||

- From brew (brew install prowler)

|

||||

- Docker (docker pull toniblyx/prowler)

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: environment

|

||||

attributes:

|

||||

label: Environment Resource

|

||||

description: From where are you running Prowler?

|

||||

placeholder: |-

|

||||

1. EC2 instance

|

||||

2. Fargate task

|

||||

3. Docker container locally

|

||||

4. EKS

|

||||

5. Cloud9

|

||||

6. CodeBuild

|

||||

7. Workstation

|

||||

8. Other(please specify)

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: os

|

||||

attributes:

|

||||

label: OS used

|

||||

description: Which OS are you using?

|

||||

placeholder: |-

|

||||

1. Amazon Linux 2

|

||||

2. MacOS

|

||||

3. Alpine Linux

|

||||

4. Windows

|

||||

5. Other(please specify)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: prowler-version

|

||||

attributes:

|

||||

label: Prowler version

|

||||

description: Which Prowler version are you using?

|

||||

placeholder: |-

|

||||

prowler --version

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: pip-version

|

||||

attributes:

|

||||

label: Pip version

|

||||

description: Which pip version are you using?

|

||||

placeholder: |-

|

||||

pip --version

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: additional

|

||||

attributes:

|

||||

description: Additional context

|

||||

label: Context

|

||||

validations:

|

||||

required: false

|

||||

36

.github/ISSUE_TEMPLATE/feature-request.yml

vendored

@@ -1,36 +0,0 @@

|

||||

name: 💡 Feature Request

|

||||

description: Suggest an idea for this project

|

||||

labels: ["enhancement", "status/needs-triage"]

|

||||

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: Problem

|

||||

attributes:

|

||||

label: New feature motivation

|

||||

description: Is your feature request related to a problem? Please describe

|

||||

placeholder: |-

|

||||

1. A clear and concise description of what the problem is. Ex. I'm always frustrated when

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Solution

|

||||

attributes:

|

||||

label: Solution Proposed

|

||||

description: A clear and concise description of what you want to happen.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Alternatives

|

||||

attributes:

|

||||

label: Describe alternatives you've considered

|

||||

description: A clear and concise description of any alternative solutions or features you've considered.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: Context

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context or screenshots about the feature request here.

|

||||

validations:

|

||||

required: false

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement, status/needs-triage

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

2

.github/dependabot.yml

vendored

@@ -8,7 +8,7 @@ updates:

|

||||

- package-ecosystem: "pip" # See documentation for possible values

|

||||

directory: "/" # Location of package manifests

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

interval: "daily"

|

||||

target-branch: master

|

||||

labels:

|

||||

- "dependencies"

|

||||

|

||||

178

.github/workflows/build-lint-push-containers.yml

vendored

@@ -15,17 +15,36 @@ on:

|

||||

env:

|

||||

AWS_REGION_STG: eu-west-1

|

||||

AWS_REGION_PLATFORM: eu-west-1

|

||||

AWS_REGION: us-east-1

|

||||

AWS_REGION_PRO: us-east-1

|

||||

IMAGE_NAME: prowler

|

||||

LATEST_TAG: latest

|

||||

STABLE_TAG: stable

|

||||

TEMPORARY_TAG: temporary

|

||||

DOCKERFILE_PATH: ./Dockerfile

|

||||

PYTHON_VERSION: 3.9

|

||||

|

||||

jobs:

|

||||

# Lint Dockerfile using Hadolint

|

||||

# dockerfile-linter:

|

||||

# runs-on: ubuntu-latest

|

||||

# steps:

|

||||

# -

|

||||

# name: Checkout

|

||||

# uses: actions/checkout@v3

|

||||

# -

|

||||

# name: Install Hadolint

|

||||

# run: |

|

||||

# VERSION=$(curl --silent "https://api.github.com/repos/hadolint/hadolint/releases/latest" | \

|

||||

# grep '"tag_name":' | \

|

||||

# sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

# ) && curl -L -o /tmp/hadolint https://github.com/hadolint/hadolint/releases/download/v${VERSION}/hadolint-Linux-x86_64 \

|

||||

# && chmod +x /tmp/hadolint

|

||||

# -

|

||||

# name: Run Hadolint

|

||||

# run: |

|

||||

# /tmp/hadolint util/Dockerfile

|

||||

|

||||

# Build Prowler OSS container

|

||||

container-build-push:

|

||||

container-build:

|

||||

# needs: dockerfile-linter

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

@@ -33,30 +52,87 @@ jobs:

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Setup python (release)

|

||||

- name: setup python (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: ${{ env.PYTHON_VERSION }}

|

||||

|

||||

python-version: 3.9 #install the python needed

|

||||

- name: Install dependencies (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

|

||||

- name: Update Prowler version (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

poetry version ${{ github.event.release.tag_name }}

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

- name: Build

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

# Without pushing to registries

|

||||

push: false

|

||||

tags: ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

outputs: type=docker,dest=/tmp/${{ env.IMAGE_NAME }}.tar

|

||||

- name: Share image between jobs

|

||||

uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

path: /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

|

||||

# Lint Prowler OSS container using Dockle

|

||||

# container-linter:

|

||||

# needs: container-build

|

||||

# runs-on: ubuntu-latest

|

||||

# steps:

|

||||

# -

|

||||

# name: Get container image from shared

|

||||

# uses: actions/download-artifact@v2

|

||||

# with:

|

||||

# name: ${{ env.IMAGE_NAME }}.tar

|

||||

# path: /tmp

|

||||

# -

|

||||

# name: Load Docker image

|

||||

# run: |

|

||||

# docker load --input /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

# docker image ls -a

|

||||

# -

|

||||

# name: Install Dockle

|

||||

# run: |

|

||||

# VERSION=$(curl --silent "https://api.github.com/repos/goodwithtech/dockle/releases/latest" | \

|

||||

# grep '"tag_name":' | \

|

||||

# sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

# ) && curl -L -o dockle.deb https://github.com/goodwithtech/dockle/releases/download/v${VERSION}/dockle_${VERSION}_Linux-64bit.deb \

|

||||

# && sudo dpkg -i dockle.deb && rm dockle.deb

|

||||

# -

|

||||

# name: Run Dockle

|

||||

# run: dockle ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }}

|

||||

|

||||

# Push Prowler OSS container to registries

|

||||

container-push:

|

||||

# needs: container-linter

|

||||

needs: container-build

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

id-token: write

|

||||

contents: read # This is required for actions/checkout

|

||||

steps:

|

||||

- name: Get container image from shared

|

||||

uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

path: /tmp

|

||||

- name: Load Docker image

|

||||

run: |

|

||||

docker load --input /tmp/${{ env.IMAGE_NAME }}.tar

|

||||

docker image ls -a

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

- name: Login to Public ECR

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

@@ -64,53 +140,55 @@ jobs:

|

||||

username: ${{ secrets.PUBLIC_ECR_AWS_ACCESS_KEY_ID }}

|

||||

password: ${{ secrets.PUBLIC_ECR_AWS_SECRET_ACCESS_KEY }}

|

||||

env:

|

||||

AWS_REGION: ${{ env.AWS_REGION }}

|

||||

AWS_REGION: ${{ env.AWS_REGION_PRO }}

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Build and push container image (latest)

|

||||

if: github.event_name == 'push'

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

- name: Build and push container image (release)

|

||||

if: github.event_name == 'release'

|

||||

uses: docker/build-push-action@v2

|

||||

with:

|

||||

# Use local context to get changes

|

||||

# https://github.com/docker/build-push-action#path-context

|

||||

context: .

|

||||

push: true

|

||||

tags: |

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

file: ${{ env.DOCKERFILE_PATH }}

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

dispatch-action:

|

||||

needs: container-build-push

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Get latest commit info

|

||||

- name: Tag (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

LATEST_COMMIT_HASH=$(echo ${{ github.event.after }} | cut -b -7)

|

||||

echo "LATEST_COMMIT_HASH=${LATEST_COMMIT_HASH}" >> $GITHUB_ENV

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

|

||||

- # Push to master branch - push "latest" tag

|

||||

name: Push (latest)

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.LATEST_TAG }}

|

||||

|

||||

- # Tag the new release (stable and release tag)

|

||||

name: Tag (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker tag ${{ env.IMAGE_NAME }}:${{ env.TEMPORARY_TAG }} ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

|

||||

- # Push the new release (stable and release tag)

|

||||

name: Push (release)

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ github.event.release.tag_name }}

|

||||

|

||||

docker push ${{ secrets.DOCKER_HUB_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

docker push ${{ secrets.PUBLIC_ECR_REPOSITORY }}/${{ env.IMAGE_NAME }}:${{ env.STABLE_TAG }}

|

||||

|

||||

- name: Delete artifacts

|

||||

if: always()

|

||||

uses: geekyeggo/delete-artifact@v1

|

||||

with:

|

||||

name: ${{ env.IMAGE_NAME }}.tar

|

||||

|

||||

dispatch-action:

|

||||

needs: container-push

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Dispatch event for latest

|

||||

if: github.event_name == 'push'

|

||||

run: |

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"latest", "tag": "${{ env.LATEST_COMMIT_HASH }}"}}'

|

||||

curl https://api.github.com/repos/${{ secrets.DISPATCH_OWNER }}/${{ secrets.DISPATCH_REPO }}/dispatches -H "Accept: application/vnd.github+json" -H "Authorization: Bearer ${{ secrets.ACCESS_TOKEN }}" -H "X-GitHub-Api-Version: 2022-11-28" --data '{"event_type":"dispatch","client_payload":{"version":"latest"}'

|

||||

- name: Dispatch event for release

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

|

||||

10

.github/workflows/pull-request.yml

vendored

@@ -17,17 +17,14 @@ jobs:

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Install poetry

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pipx install poetry

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

cache: 'poetry'

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install poetry

|

||||

poetry install

|

||||

poetry run pip list

|

||||

VERSION=$(curl --silent "https://api.github.com/repos/hadolint/hadolint/releases/latest" | \

|

||||

@@ -35,9 +32,6 @@ jobs:

|

||||

sed -E 's/.*"v([^"]+)".*/\1/' \

|

||||

) && curl -L -o /tmp/hadolint "https://github.com/hadolint/hadolint/releases/download/v${VERSION}/hadolint-Linux-x86_64" \

|

||||

&& chmod +x /tmp/hadolint

|

||||

- name: Poetry check

|

||||

run: |

|

||||

poetry lock --check

|

||||

- name: Lint with flake8

|

||||

run: |

|

||||

poetry run flake8 . --ignore=E266,W503,E203,E501,W605,E128 --exclude contrib

|

||||

|

||||

44

.github/workflows/pypi-release.yml

vendored

@@ -6,6 +6,7 @@ on:

|

||||

|

||||

env:

|

||||

RELEASE_TAG: ${{ github.event.release.tag_name }}

|

||||

GITHUB_BRANCH: master

|

||||

|

||||

jobs:

|

||||

release-prowler-job:

|

||||

@@ -16,16 +17,16 @@ jobs:

|

||||

steps:

|

||||

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

with:

|

||||

ref: ${{ env.GITHUB_BRANCH }}

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: 3.9 #install the python needed

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

pipx install poetry

|

||||

pipx inject poetry poetry-bumpversion

|

||||

- name: setup python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: 3.9

|

||||

cache: 'poetry'

|

||||

- name: Change version and Build package

|

||||

run: |

|

||||

poetry version ${{ env.RELEASE_TAG }}

|

||||

@@ -35,19 +36,26 @@ jobs:

|

||||

git commit -m "chore(release): ${{ env.RELEASE_TAG }}" --no-verify

|

||||

git tag -fa ${{ env.RELEASE_TAG }} -m "chore(release): ${{ env.RELEASE_TAG }}"

|

||||

git push -f origin ${{ env.RELEASE_TAG }}

|

||||

git checkout -B release-${{ env.RELEASE_TAG }}

|

||||

poetry build

|

||||

- name: Publish prowler package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

- name: Replicate PyPi Package

|

||||

run: |

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler.egg-info

|

||||

python util/replicate_pypi_package.py

|

||||

poetry build

|

||||

- name: Publish prowler-cloud package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request with new version

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v4

|

||||

with:

|

||||

token: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

commit-message: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}."

|

||||

base: master

|

||||

branch: release-${{ env.RELEASE_TAG }}

|

||||

labels: "status/waiting-for-revision, severity/low"

|

||||

title: "chore(release): update Prowler Version to ${{ env.RELEASE_TAG }}"

|

||||

@@ -59,21 +67,3 @@ jobs:

|

||||

### License

|

||||

|

||||

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.

|

||||

- name: Replicate PyPi Package

|

||||

run: |

|

||||

rm -rf ./dist && rm -rf ./build && rm -rf prowler.egg-info

|

||||

pip install toml

|

||||

python util/replicate_pypi_package.py

|

||||

poetry build

|

||||

- name: Publish prowler-cloud package to PyPI

|

||||

run: |

|

||||

poetry config pypi-token.pypi ${{ secrets.PYPI_API_TOKEN }}

|

||||

poetry publish

|

||||

# Create pull request to github.com/Homebrew/homebrew-core to update prowler formula

|

||||

- name: Bump Homebrew formula

|

||||

uses: mislav/bump-homebrew-formula-action@v2

|

||||

with:

|

||||

formula-name: prowler

|

||||

base-branch: release-${{ env.RELEASE_TAG }}

|

||||

env:

|

||||

COMMITTER_TOKEN: ${{ secrets.PROWLER_ACCESS_TOKEN }}

|

||||

|

||||

@@ -19,7 +19,6 @@ repos:

|

||||

hooks:

|

||||

- id: pretty-format-toml

|

||||

args: [--autofix]

|

||||

files: pyproject.toml

|

||||

|

||||

## BASH

|

||||

- repo: https://github.com/koalaman/shellcheck-precommit

|

||||

@@ -56,12 +55,10 @@ repos:

|

||||

exclude: contrib

|

||||

args: ["--ignore=E266,W503,E203,E501,W605"]

|

||||

|

||||

- repo: https://github.com/python-poetry/poetry

|

||||

rev: 1.4.0 # add version here

|

||||

- repo: https://github.com/haizaar/check-pipfile-lock

|

||||

rev: v0.0.5

|

||||

hooks:

|

||||

- id: poetry-check

|

||||

- id: poetry-lock

|

||||

args: ["--no-update"]

|

||||

- id: check-pipfile-lock

|

||||

|

||||

- repo: https://github.com/hadolint/hadolint

|

||||

rev: v2.12.1-beta

|

||||

@@ -76,15 +73,6 @@ repos:

|

||||

entry: bash -c 'pylint --disable=W,C,R,E -j 0 -rn -sn prowler/'

|

||||

language: system

|

||||

|

||||

- id: trufflehog

|

||||

name: TruffleHog

|

||||

description: Detect secrets in your data.

|

||||

# entry: bash -c 'trufflehog git file://. --only-verified --fail'

|

||||

# For running trufflehog in docker, use the following entry instead:

|

||||

entry: bash -c 'docker run -v "$(pwd):/workdir" -i --rm trufflesecurity/trufflehog:latest git file:///workdir --only-verified --fail'

|

||||

language: system

|

||||

stages: ["commit", "push"]

|

||||

|

||||

- id: pytest-check

|

||||

name: pytest-check

|

||||

entry: bash -c 'pytest tests -n auto'

|

||||

|

||||

55

README.md

@@ -11,14 +11,14 @@

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://join.slack.com/t/prowler-workspace/shared_invite/zt-1hix76xsl-2uq222JIXrC7Q8It~9ZNog"><img alt="Slack Shield" src="https://img.shields.io/badge/slack-prowler-brightgreen.svg?logo=slack"></a>

|

||||

<a href="https://pypi.org/project/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg?label=prowler%20downloads"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg?label=prowler-cloud%20downloads"></a>

|

||||

<a href="https://pypi.org/project/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/v/prowler.svg"></a>

|

||||

<a href="https://pypi.python.org/pypi/prowler-cloud/"><img alt="Python Version" src="https://img.shields.io/pypi/pyversions/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler"><img alt="PyPI Prowler Downloads" src="https://img.shields.io/pypi/dw/prowler.svg"></a>

|

||||

<a href="https://pypistats.org/packages/prowler-cloud"><img alt="PyPI Prowler-Cloud Downloads" src="https://img.shields.io/pypi/dw/prowler-cloud.svg"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/cloud/build/toniblyx/prowler"></a>

|

||||

<a href="https://hub.docker.com/r/toniblyx/prowler"><img alt="Docker" src="https://img.shields.io/docker/image-size/toniblyx/prowler"></a>

|

||||

<a href="https://gallery.ecr.aws/prowler-cloud/prowler"><img width="120" height=19" alt="AWS ECR Gallery" src="https://user-images.githubusercontent.com/3985464/151531396-b6535a68-c907-44eb-95a1-a09508178616.png"></a>

|

||||

<a href="https://gallery.ecr.aws/o4g1s5r6/prowler"><img width="120" height=19" alt="AWS ECR Gallery" src="https://user-images.githubusercontent.com/3985464/151531396-b6535a68-c907-44eb-95a1-a09508178616.png"></a>

|

||||

</p>

|

||||

<p align="center">

|

||||

<a href="https://github.com/prowler-cloud/prowler"><img alt="Repo size" src="https://img.shields.io/github/repo-size/prowler-cloud/prowler"></a>

|

||||

@@ -33,17 +33,12 @@

|

||||

|

||||

# Description

|

||||

|

||||

`Prowler` is an Open Source security tool to perform AWS, GCP and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

`Prowler` is an Open Source security tool to perform AWS and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

The full documentation can now be found at [https://docs.prowler.cloud](https://docs.prowler.cloud)

|

||||

|

||||

## Looking for Prowler v2 documentation?

|

||||

For Prowler v2 Documentation, please go to https://github.com/prowler-cloud/prowler/tree/2.12.1.

|

||||

|

||||

# ⚙️ Install

|

||||

|

||||

## Pip package

|

||||

@@ -53,7 +48,6 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

pip install prowler

|

||||

prowler -v

|

||||

```

|

||||

More details at https://docs.prowler.cloud

|

||||

|

||||

## Containers

|

||||

|

||||

@@ -66,7 +60,7 @@ The available versions of Prowler are the following:

|

||||

The container images are available here:

|

||||

|

||||

- [DockerHub](https://hub.docker.com/r/toniblyx/prowler/tags)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/prowler-cloud/prowler)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/o4g1s5r6/prowler)

|

||||

|

||||

## From Github

|

||||

|

||||

@@ -80,11 +74,16 @@ poetry install

|

||||

python prowler.py -v

|

||||

```

|

||||

|

||||

# 📖 Documentation

|

||||

|

||||

The full documentation can now be found at [https://docs.prowler.cloud](https://docs.prowler.cloud)

|

||||

|

||||

|

||||

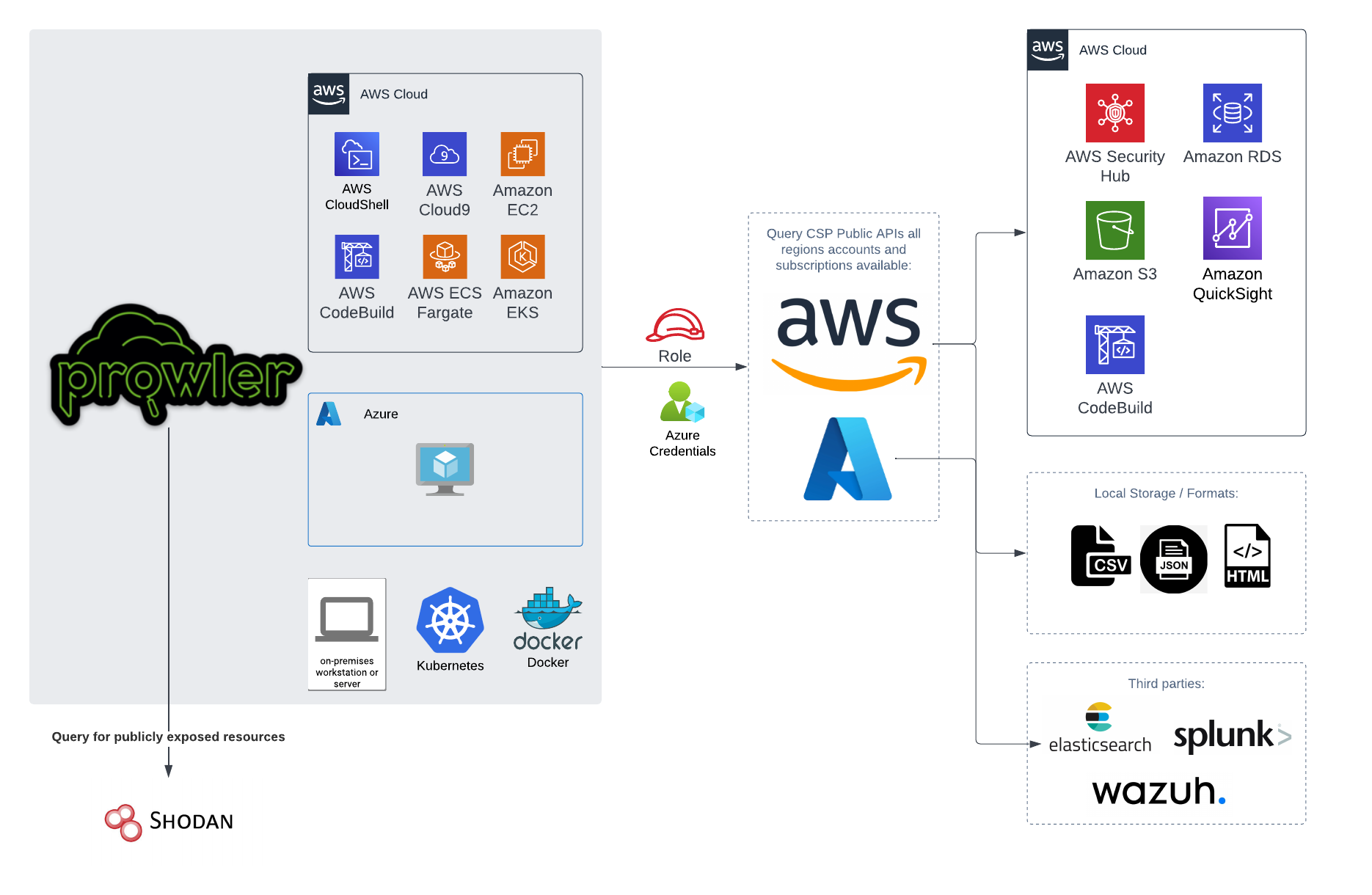

# 📐✏️ High level architecture

|

||||

|

||||

You can run Prowler from your workstation, an EC2 instance, Fargate or any other container, Codebuild, CloudShell and Cloud9.

|

||||

|

||||

|

||||

|

||||

|

||||

# 📝 Requirements

|

||||

|

||||

@@ -163,22 +162,6 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

- `Reader`

|

||||

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

# 💻 Basic Usage

|

||||

|

||||

To run prowler, you will need to specify the provider (e.g aws or azure):

|

||||

@@ -253,14 +236,12 @@ prowler azure [--sp-env-auth, --az-cli-auth, --browser-auth, --managed-identity-

|

||||

```

|

||||

> By default, `prowler` will scan all Azure subscriptions.

|

||||

|

||||

## Google Cloud Platform

|

||||

|

||||

Optionally, you can provide the location of an application credential JSON file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

# 🎉 New Features

|

||||

|

||||

- Python: we got rid of all bash and it is now all in Python.

|

||||

- Faster: huge performance improvements (same account from 2.5 hours to 4 minutes).

|

||||

- Developers and community: we have made it easier to contribute with new checks and new compliance frameworks. We also included unit tests.

|

||||

- Multi-cloud: in addition to AWS, we have added Azure, we plan to include GCP and OCI soon, let us know if you want to contribute!

|

||||

|

||||

# 📃 License

|

||||

|

||||

|

||||

@@ -79,21 +79,3 @@ Regarding the subscription scope, Prowler by default scans all the subscriptions

|

||||

|

||||

- `Security Reader`

|

||||

- `Reader`

|

||||

|

||||

## Google Cloud

|

||||

|

||||

### GCP Authentication

|

||||

|

||||

Prowler will follow the same credentials search as [Google authentication libraries](https://cloud.google.com/docs/authentication/application-default-credentials#search_order):

|

||||

|

||||

1. [GOOGLE_APPLICATION_CREDENTIALS environment variable](https://cloud.google.com/docs/authentication/application-default-credentials#GAC)

|

||||

2. [User credentials set up by using the Google Cloud CLI](https://cloud.google.com/docs/authentication/application-default-credentials#personal)

|

||||

3. [The attached service account, returned by the metadata server](https://cloud.google.com/docs/authentication/application-default-credentials#attached-sa)

|

||||

|

||||

Those credentials must be associated to a user or service account with proper permissions to do all checks. To make sure, add the following roles to the member associated with the credentials:

|

||||

|

||||

- Viewer

|

||||

- Security Reviewer

|

||||

- Stackdriver Account Viewer

|

||||

|

||||

> `prowler` will scan the project associated with the credentials.

|

||||

|

||||

|

Before Width: | Height: | Size: 283 KiB After Width: | Height: | Size: 258 KiB |

|

Before Width: | Height: | Size: 631 KiB |

|

Before Width: | Height: | Size: 320 KiB |

BIN

docs/img/quick-inventory.png

Normal file

|

After Width: | Height: | Size: 220 KiB |

@@ -16,7 +16,7 @@ For **Prowler v2 Documentation**, please go [here](https://github.com/prowler-cl

|

||||

|

||||

## About Prowler

|

||||

|

||||

**Prowler** is an Open Source security tool to perform AWS, Azure and Google Cloud security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

**Prowler** is an Open Source security tool to perform AWS and Azure security best practices assessments, audits, incident response, continuous monitoring, hardening and forensics readiness.

|

||||

|

||||

It contains hundreds of controls covering CIS, PCI-DSS, ISO27001, GDPR, HIPAA, FFIEC, SOC2, AWS FTR, ENS and custom security frameworks.

|

||||

|

||||

@@ -40,7 +40,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

|

||||

* `Python >= 3.9`

|

||||

* `Python pip >= 3.9`

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* AWS and/or Azure credentials

|

||||

|

||||

_Commands_:

|

||||

|

||||

@@ -54,7 +54,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

_Requirements_:

|

||||

|

||||

* Have `docker` installed: https://docs.docker.com/get-docker/.

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* AWS and/or Azure credentials

|

||||

* In the command below, change `-v` to your local directory path in order to access the reports.

|

||||

|

||||

_Commands_:

|

||||

@@ -71,7 +71,7 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

|

||||

_Requirements for Ubuntu 20.04.3 LTS_:

|

||||

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* AWS and/or Azure credentials

|

||||

* Install python 3.9 with: `sudo apt-get install python3.9`

|

||||

* Remove python 3.8 to avoid conflicts if you can: `sudo apt-get remove python3.8`

|

||||

* Make sure you have the python3 distutils package installed: `sudo apt-get install python3-distutils`

|

||||

@@ -87,28 +87,11 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "GitHub"

|

||||

|

||||

_Requirements for Developers_:

|

||||

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* `git`, `Python >= 3.9`, `pip` and `poetry` installed (`pip install poetry`)

|

||||

|

||||

_Commands_:

|

||||

|

||||

```

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

cd prowler

|

||||

poetry shell

|

||||

poetry install

|

||||

python prowler.py -v

|

||||

```

|

||||

|

||||

=== "Amazon Linux 2"

|

||||

|

||||

_Requirements_:

|

||||

|

||||

* AWS, GCP and/or Azure credentials

|

||||

* AWS and/or Azure credentials

|

||||

* Latest Amazon Linux 2 should come with Python 3.9 already installed however it may need pip. Install Python pip 3.9 with: `sudo dnf install -y python3-pip`.

|

||||

* Make sure setuptools for python is already installed with: `pip3 install setuptools`

|

||||

|

||||

@@ -120,20 +103,6 @@ Prowler is available as a project in [PyPI](https://pypi.org/project/prowler-clo

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "Brew"

|

||||

|

||||

_Requirements_:

|

||||

|

||||

* `Brew` installed in your Mac or Linux

|

||||

* AWS, GCP and/or Azure credentials

|

||||

|

||||

_Commands_:

|

||||

|

||||

``` bash

|

||||

brew install prowler

|

||||

prowler -v

|

||||

```

|

||||

|

||||

=== "AWS CloudShell"

|

||||

|

||||

Prowler can be easely executed in AWS CloudShell but it has some prerequsites to be able to to so. AWS CloudShell is a container running with `Amazon Linux release 2 (Karoo)` that comes with Python 3.7, since Prowler requires Python >= 3.9 we need to first install a newer version of Python. Follow the steps below to successfully execute Prowler v3 in AWS CloudShell:

|

||||

@@ -185,7 +154,7 @@ The available versions of Prowler are the following:

|

||||

The container images are available here:

|

||||

|

||||

- [DockerHub](https://hub.docker.com/r/toniblyx/prowler/tags)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/prowler-cloud/prowler)

|

||||

- [AWS Public ECR](https://gallery.ecr.aws/o4g1s5r6/prowler)

|

||||

|

||||

## High level architecture

|

||||

|

||||

@@ -194,7 +163,7 @@ You can run Prowler from your workstation, an EC2 instance, Fargate or any other

|

||||

|

||||

## Basic Usage

|

||||

|

||||

To run Prowler, you will need to specify the provider (e.g aws, gcp or azure):

|

||||

To run Prowler, you will need to specify the provider (e.g aws or azure):

|

||||

> If no provider specified, AWS will be used for backward compatibility with most of v2 options.

|

||||

|

||||

```console

|

||||

@@ -226,7 +195,6 @@ For executing specific checks or services you can use options `-c`/`checks` or `

|

||||

```console

|

||||

prowler azure --checks storage_blob_public_access_level_is_disabled

|

||||

prowler aws --services s3 ec2

|

||||

prowler gcp --services iam compute

|

||||

```

|

||||

|

||||

Also, checks and services can be excluded with options `-e`/`--excluded-checks` or `--excluded-services`:

|

||||

@@ -234,7 +202,6 @@ Also, checks and services can be excluded with options `-e`/`--excluded-checks`

|

||||

```console

|

||||

prowler aws --excluded-checks s3_bucket_public_access

|

||||

prowler azure --excluded-services defender iam

|

||||

prowler gcp --excluded-services kms

|

||||

```

|

||||

|

||||

More options and executions methods that will save your time in [Miscelaneous](tutorials/misc.md).

|

||||

@@ -254,8 +221,6 @@ prowler aws --profile custom-profile -f us-east-1 eu-south-2

|

||||

```

|

||||

> By default, `prowler` will scan all AWS regions.

|

||||

|

||||

See more details about AWS Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

### Azure

|

||||

|

||||

With Azure you need to specify which auth method is going to be used:

|

||||

@@ -274,28 +239,9 @@ prowler azure --browser-auth

|

||||

prowler azure --managed-identity-auth

|

||||

```

|

||||

|

||||

See more details about Azure Authentication in [Requirements](getting-started/requirements.md)

|

||||

More details in [Requirements](getting-started/requirements.md)

|

||||

|

||||

Prowler by default scans all the subscriptions that is allowed to scan, if you want to scan a single subscription or various concrete subscriptions you can use the following flag (using az cli auth as example):

|

||||

```console

|

||||

prowler azure --az-cli-auth --subscription-ids <subscription ID 1> <subscription ID 2> ... <subscription ID N>

|

||||

```

|

||||

|

||||

### Google Cloud

|

||||

|

||||

Prowler will use by default your User Account credentials, you can configure it using:

|

||||

|

||||

- `gcloud init` to use a new account

|

||||

- `gcloud config set account <account>` to use an existing account

|

||||

|

||||

Then, obtain your access credentials using: `gcloud auth application-default login`

|

||||

|

||||

Otherwise, you can generate and download Service Account keys in JSON format (refer to https://cloud.google.com/iam/docs/creating-managing-service-account-keys) and provide the location of the file with the following argument:

|

||||

|

||||

```console

|

||||

prowler gcp --credentials-file path

|

||||

```

|

||||

|

||||

> `prowler` will scan the GCP project associated with the credentials.

|

||||

|

||||

See more details about GCP Authentication in [Requirements](getting-started/requirements.md)

|

||||

|

||||

@@ -7,52 +7,35 @@ You can use `-w`/`--allowlist-file` with the path of your allowlist yaml file, b

|

||||

|

||||

## Allowlist Yaml File Syntax

|

||||

|

||||

### Account, Check and/or Region can be * to apply for all the cases.

|

||||

### Resources and tags are lists that can have either Regex or Keywords.

|

||||

### Tags is an optional list that matches on tuples of 'key=value' and are "ANDed" together.

|

||||

### Use an alternation Regex to match one of multiple tags with "ORed" logic.

|

||||

### Account, Check and/or Region can be * to apply for all the cases

|

||||

### Resources is a list that can have either Regex or Keywords:

|

||||

########################### ALLOWLIST EXAMPLE ###########################

|

||||

Allowlist:

|

||||

Accounts:

|

||||

"123456789012":

|

||||

Checks:

|

||||

Checks:

|

||||

"iam_user_hardware_mfa_enabled":

|

||||

Regions:

|

||||

Regions:

|

||||

- "us-east-1"

|

||||

Resources:

|

||||

Resources:

|

||||

- "user-1" # Will ignore user-1 in check iam_user_hardware_mfa_enabled

|

||||

- "user-2" # Will ignore user-2 in check iam_user_hardware_mfa_enabled

|

||||

"ec2_*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*" # Will ignore every EC2 check in every account and region

|

||||

"*":

|

||||

Regions:

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "test"

|

||||

Tags:

|

||||

- "test=test" # Will ignore every resource containing the string "test" and the tags 'test=test' and

|

||||

- "project=test|project=stage" # either of ('project=test' OR project=stage) in account 123456789012 and every region

|

||||

Resources:

|

||||

- "test" # Will ignore every resource containing the string "test" in every account and region

|

||||

|

||||

"*":

|

||||

Checks:

|

||||

Checks:

|

||||

"s3_bucket_object_versioning":

|

||||

Regions:

|

||||

Regions:

|

||||

- "eu-west-1"

|

||||

- "us-east-1"

|

||||

Resources:

|

||||

Resources:

|

||||

- "ci-logs" # Will ignore bucket "ci-logs" AND ALSO bucket "ci-logs-replica" in specified check and regions

|

||||

- "logs" # Will ignore EVERY BUCKET containing the string "logs" in specified check and regions

|

||||

- "[[:alnum:]]+-logs" # Will ignore all buckets containing the terms ci-logs, qa-logs, etc. in specified check and regions

|

||||

"*":

|

||||

Regions:

|

||||

- "*"

|

||||

Resources:

|

||||

- "*"

|

||||

Tags:

|

||||

- "environment=dev" # Will ignore every resource containing the tag 'environment=dev' in every account and region

|

||||

|

||||

|

||||

## Supported Allowlist Locations

|

||||

@@ -87,7 +70,6 @@ prowler aws -w arn:aws:dynamodb:<region_name>:<account_id>:table/<table_name>

|

||||

- Checks (String): This field can contain either a Prowler Check Name or an `*` (which applies to all the scanned checks).

|

||||

- Regions (List): This field contains a list of regions where this allowlist rule is applied (it can also contains an `*` to apply all scanned regions).

|

||||

- Resources (List): This field contains a list of regex expressions that applies to the resources that are wanted to be allowlisted.

|

||||

- Tags (List): -Optional- This field contains a list of tuples in the form of 'key=value' that applies to the resources tags that are wanted to be allowlisted.

|

||||

|

||||

<img src="../img/allowlist-row.png"/>

|

||||

|

||||

@@ -119,7 +101,7 @@ generates an Allowlist:

|

||||

```

|

||||

def handler(event, context):

|

||||

checks = {}

|

||||

checks["vpc_flow_logs_enabled"] = { "Regions": [ "*" ], "Resources": [ "" ], Optional("Tags"): [ "key:value" ] }

|

||||

checks["vpc_flow_logs_enabled"] = { "Regions": [ "*" ], "Resources": [ "" ] }

|

||||

|

||||

al = { "Allowlist": { "Accounts": { "*": { "Checks": checks } } } }

|

||||

return al

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Scan Multiple AWS Accounts

|

||||

|

||||

Prowler can scan multiple accounts when it is executed from one account that can assume a role in those given accounts to scan using [Assume Role feature](role-assumption.md) and [AWS Organizations integration feature](organizations.md).

|

||||

Prowler can scan multiple accounts when it is ejecuted from one account that can assume a role in those given accounts to scan using [Assume Role feature](role-assumption.md) and [AWS Organizations integration feature](organizations.md).

|

||||

|

||||

|

||||

## Scan multiple specific accounts sequentially

|

||||

|

||||

@@ -1,81 +0,0 @@

|

||||

# AWS Regions and Partitions

|

||||

|

||||

By default Prowler is able to scan the following AWS partitions:

|

||||

|

||||

- Commercial: `aws`

|

||||

- China: `aws-cn`

|

||||

- GovCloud (US): `aws-us-gov`

|

||||

|

||||

> To check the available regions for each partition and service please refer to the following document [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json)

|

||||

|

||||

It is important to take into consideration that to scan the China (`aws-cn`) or GovCloud (`aws-us-gov`) partitions it is either required to have a valid region for that partition in your AWS credentials or to specify the regions you want to audit for that partition using the `-f/--region` flag.

|

||||

> Please, refer to https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html#configuring-credentials for more information about the AWS credentials configuration.

|

||||

|

||||

You can get more information about the available partitions and regions in the following [Botocore](https://github.com/boto/botocore) [file](https://github.com/boto/botocore/blob/22a19ea7c4c2c4dd7df4ab8c32733cba0c7597a4/botocore/data/partitions.json).

|

||||

## AWS China

|

||||

|

||||

To scan your AWS account in the China partition (`aws-cn`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region cn-north-1 cn-northwest-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = cn-north-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS GovCloud (US)

|

||||

|

||||

To scan your AWS account in the GovCloud (US) partition (`aws-us-gov`):

|

||||

|

||||

- Using the `-f/--region` flag:

|

||||

```

|

||||

prowler aws --region us-gov-east-1 us-gov-west-1

|

||||

```

|

||||

- Using the region configured in your AWS profile at `~/.aws/credentials` or `~/.aws/config`:

|

||||

```

|

||||

[default]

|

||||

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

|

||||

aws_secret_access_key = XXXXXXXXXXXXXXXXXXX

|

||||

region = us-gov-east-1

|

||||

```

|

||||

> With this option all the partition regions will be scanned without the need of use the `-f/--region` flag

|

||||

|

||||

|

||||

## AWS ISO (US & Europe)

|

||||

|

||||

For the AWS ISO partitions, which are known as "secret partitions" and are air-gapped from the Internet, there is no builtin way to scan it. If you want to audit an AWS account in one of the AWS ISO partitions you should manually update the [aws_regions_by_service.json](https://github.com/prowler-cloud/prowler/blob/master/prowler/providers/aws/aws_regions_by_service.json) and include the partition, region and services, e.g.:

|

||||

```json

|

||||

"iam": {

|

||||

"regions": {

|

||||

"aws": [

|

||||

"eu-west-1",

|

||||

"us-east-1",

|

||||

],

|

||||

"aws-cn": [

|

||||

"cn-north-1",

|

||||

"cn-northwest-1"

|

||||

],

|

||||

"aws-us-gov": [

|

||||

"us-gov-east-1",

|

||||

"us-gov-west-1"

|

||||

],

|

||||

"aws-iso": [

|

||||

"aws-iso-global",

|

||||

"us-iso-east-1",

|

||||

"us-iso-west-1"

|

||||

],

|

||||

"aws-iso-b": [

|

||||

"aws-iso-b-global",

|

||||

"us-isob-east-1"

|

||||

],

|

||||

"aws-iso-e": [],

|

||||

}

|

||||

},

|

||||

```

|

||||

@@ -13,55 +13,35 @@ Before sending findings to Prowler, you will need to perform next steps:

|

||||

- Using the AWS Management Console:

|

||||

|

||||

3. Allow Prowler to import its findings to AWS Security Hub by adding the policy below to the role or user running Prowler:

|

||||

- [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json)

|

||||

- [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/iam/prowler-security-hub.json)

|

||||

|

||||

Once it is enabled, it is as simple as running the command below (for all regions):

|

||||

|

||||

```sh

|

||||

prowler aws -S

|

||||

./prowler aws -S

|

||||

```

|

||||

|

||||

or for only one filtered region like eu-west-1:

|

||||

|

||||

```sh

|

||||

prowler -S -f eu-west-1

|

||||

./prowler -S -f eu-west-1

|

||||

```

|

||||

|

||||

> **Note 1**: It is recommended to send only fails to Security Hub and that is possible adding `-q` to the command.

|

||||

|

||||

> **Note 2**: Since Prowler perform checks to all regions by default you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs). Prowler will send findings to the Security Hub on the region where the scanned resource is located.

|

||||

> **Note 2**: Since Prowler perform checks to all regions by defauls you may need to filter by region when runing Security Hub integration, as shown in the example above. Remember to enable Security Hub in the region or regions you need by calling `aws securityhub enable-security-hub --region <region>` and run Prowler with the option `-f <region>` (if no region is used it will try to push findings in all regions hubs).

|

||||

|

||||

> **Note 3**: To have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

> **Note 3** to have updated findings in Security Hub you have to run Prowler periodically. Once a day or every certain amount of hours.

|

||||

|

||||

Once you run findings for first time you will be able to see Prowler findings in Findings section:

|

||||

|

||||

|

||||

|

||||

## Send findings to Security Hub assuming an IAM Role

|

||||

|

||||

When you are auditing a multi-account AWS environment, you can send findings to a Security Hub of another account by assuming an IAM role from that account using the `-R` flag in the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -R arn:aws:iam::123456789012:role/ProwlerExecRole

|

||||

```

|

||||

|

||||

> Remember that the used role needs to have permissions to send findings to Security Hub. To get more information about the permissions required, please refer to the following IAM policy [prowler-security-hub.json](https://github.com/prowler-cloud/prowler/blob/master/permissions/prowler-security-hub.json)

|

||||

|

||||

|

||||

## Send only failed findings to Security Hub

|

||||

|

||||

When using Security Hub it is recommended to send only the failed findings generated. To follow that recommendation you could add the `-q` flag to the Prowler command:

|

||||

|

||||

```sh

|

||||

prowler -S -q

|

||||

```

|

||||

|

||||

|

||||

## Skip sending updates of findings to Security Hub

|

||||

|

||||

By default, Prowler archives all its findings in Security Hub that have not appeared in the last scan.

|

||||

You can skip this logic by using the option `--skip-sh-update` so Prowler will not archive older findings:

|

||||

|

||||

```sh

|

||||

prowler -S --skip-sh-update

|

||||

./prowler -S --skip-sh-update

|

||||

```

|

||||

|

||||

@@ -31,7 +31,7 @@ checks_v3_to_v2_mapping = {

|

||||

"awslambda_function_url_cors_policy": "extra7180",

|

||||

"awslambda_function_url_public": "extra7179",

|

||||

"awslambda_function_using_supported_runtimes": "extra762",

|

||||

"cloudformation_stack_outputs_find_secrets": "extra742",

|

||||

"cloudformation_outputs_find_secrets": "extra742",

|

||||

"cloudformation_stacks_termination_protection_enabled": "extra7154",

|

||||

"cloudfront_distributions_field_level_encryption_enabled": "extra767",

|

||||

"cloudfront_distributions_geo_restrictions_enabled": "extra732",

|

||||

@@ -113,6 +113,7 @@ checks_v3_to_v2_mapping = {

|

||||

"ec2_securitygroup_allow_wide_open_public_ipv4": "extra778",

|

||||

"ec2_securitygroup_default_restrict_traffic": "check43",

|

||||

"ec2_securitygroup_from_launch_wizard": "extra7173",

|

||||

"ec2_securitygroup_in_use_without_ingress_filtering": "extra74",

|

||||

"ec2_securitygroup_not_used": "extra75",

|

||||

"ec2_securitygroup_with_many_ingress_egress_rules": "extra777",

|

||||

"ecr_repositories_lifecycle_policy_enabled": "extra7194",

|

||||

@@ -137,6 +138,7 @@ checks_v3_to_v2_mapping = {

|

||||

"elbv2_internet_facing": "extra79",

|

||||

"elbv2_listeners_underneath": "extra7158",

|

||||

"elbv2_logging_enabled": "extra717",

|

||||

"elbv2_request_smugling": "extra7142",

|

||||

"elbv2_ssl_listeners": "extra793",

|

||||

"elbv2_waf_acl_attached": "extra7129",

|

||||

"emr_cluster_account_public_block_enabled": "extra7178",

|

||||

|

||||

@@ -81,4 +81,36 @@ Standard results will be shown and additionally the framework information as the

|

||||

|

||||

## Create and contribute adding other Security Frameworks

|

||||

|

||||

This information is part of the Developer Guide and can be found here: https://docs.prowler.cloud/en/latest/tutorials/developer-guide/.

|

||||

If you want to create or contribute with your own security frameworks or add public ones to Prowler you need to make sure the checks are available if not you have to create your own. Then create a compliance file per provider like in `prowler/compliance/aws/` and name it as `<framework>_<version>_<provider>.json` then follow the following format to create yours.

|

||||

|

||||

Each file version of a framework will have the following structure at high level with the case that each framework needs to be generally identified), one requirement can be also called one control but one requirement can be linked to multiple prowler checks.:

|

||||

|

||||

- `Framework`: string. Indistiguish name of the framework, like CIS

|

||||

- `Provider`: string. Provider where the framework applies, such as AWS, Azure, OCI,...

|

||||

- `Version`: string. Version of the framework itself, like 1.4 for CIS.

|

||||

- `Requirements`: array of objects. Include all requirements or controls with the mapping to Prowler.

|

||||

- `Requirements_Id`: string. Unique identifier per each requirement in the specific framework

|

||||

- `Requirements_Description`: string. Description as in the framework.

|

||||

- `Requirements_Attributes`: array of objects. Includes all needed attributes per each requirement, like levels, sections, etc. Whatever helps to create a dedicated report with the result of the findings. Attributes would be taken as closely as possible from the framework's own terminology directly.

|

||||

- `Requirements_Checks`: array. Prowler checks that are needed to prove this requirement. It can be one or multiple checks. In case of no automation possible this can be empty.

|

||||

|

||||

```

|

||||

{

|

||||

"Framework": "<framework>-<provider>",

|

||||

"Version": "<version>",

|

||||

"Requirements": [

|

||||

{

|

||||

"Id": "<unique-id>",

|

||||

"Description": "Requiemente full description",

|

||||

"Checks": [

|

||||

"Here is the prowler check or checks that is going to be executed"

|

||||

],

|

||||

"Attributes": [

|

||||

{

|

||||

<Add here your custom attributes.>

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

Finally, to have a proper output file for your reports, your framework data model has to be created in `prowler/lib/outputs/models.py` and also the CLI table output in `prowler/lib/outputs/compliance.py`.

|

||||

|

||||

@@ -1,281 +0,0 @@

|

||||

# Developer Guide

|

||||

|

||||

You can extend Prowler in many different ways, in most cases you will want to create your own checks and compliance security frameworks, here is where you can learn about how to get started with it. We also include how to create custom outputs, integrations and more.

|

||||

|

||||

## Get the code and install all dependencies

|

||||

|

||||

First of all, you need a version of Python 3.9 or higher and also pip installed to be able to install all dependencies requred. Once that is satisfied go a head and clone the repo:

|

||||

|

||||

```

|

||||

git clone https://github.com/prowler-cloud/prowler

|

||||

cd prowler

|

||||

```

|

||||

For isolation and avoid conflicts with other environments, we recommend usage of `poetry`:

|

||||

```

|

||||

pip install poetry

|

||||

```

|

||||

Then install all dependencies including the ones for developers:

|

||||

```

|

||||

poetry install

|

||||

poetry shell

|

||||

```

|

||||

|

||||

## Contributing with your code or fixes to Prowler

|

||||

|

||||

This repo has git pre-commit hooks managed via the pre-commit tool. Install it how ever you like, then in the root of this repo run:

|

||||

```

|

||||

pre-commit install

|

||||

```

|

||||

You should get an output like the following:

|

||||

```

|

||||

pre-commit installed at .git/hooks/pre-commit

|

||||

```

|

||||

|